YouTube search...

...Google search

- Strategy & Tactics ... Project Management ... Best Practices ... Checklists ... Project Check-in ... Evaluation ... Measures

- AI Solver ... Algorithms ... Administration ... Model Search ... Discriminative vs. Generative ... Train, Validate, and Test

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Cybersecurity: Evaluating & Selling

- Data Science ... Governance ... Preprocessing ... Exploration ... Interoperability ... Master Data Management (MDM) ... Bias and Variances ... Benchmarks ... Datasets

- Automated Scoring

- Development ... Notebooks ... AI Pair Programming ... Codeless ... Hugging Face ... AIOps/MLOps ... AIaaS/MLaaS

- Machine Learning: The High Interest Credit Card of Technical Debt | | D. Sculley, G Holt, D. Golovin, E. Davydov, T. Phillips, D. Ebner, V. Chaudhary, and M. Young - Google Research

- Hidden Technical Debt in Machine Learning Systems D. Sculley, G Holt, D. Golovin, E. Davydov, T. Phillips, D. Ebner, V. Chaudhary, M. Young, J. Crespo, and D. Dennison - Google Research

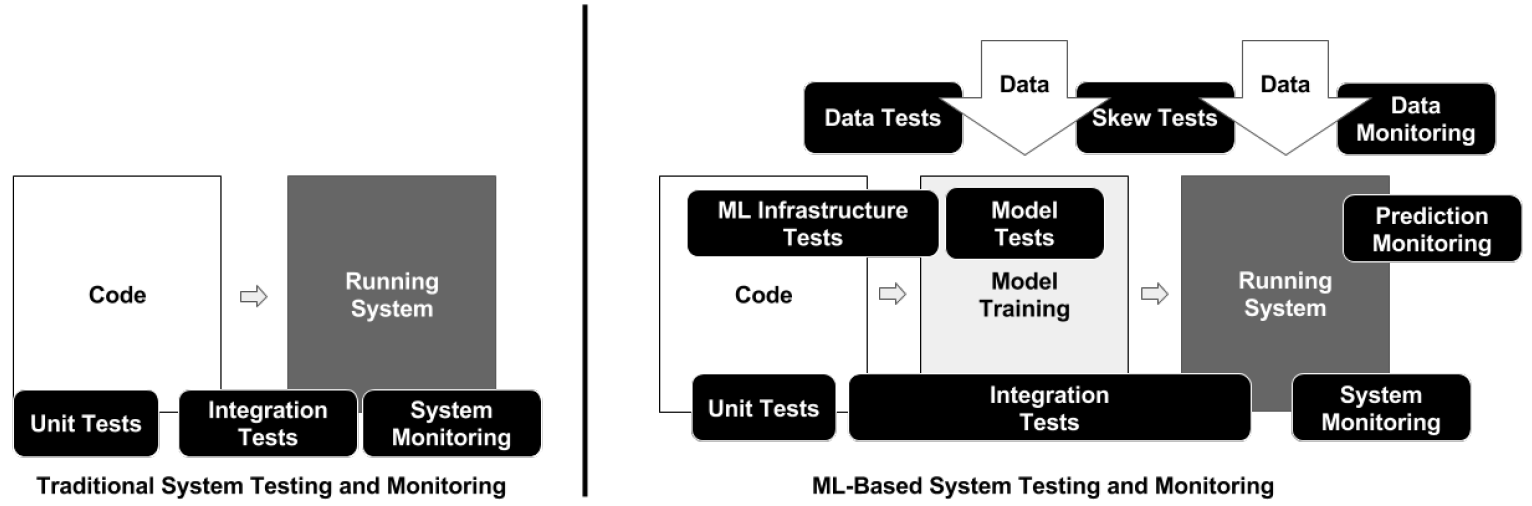

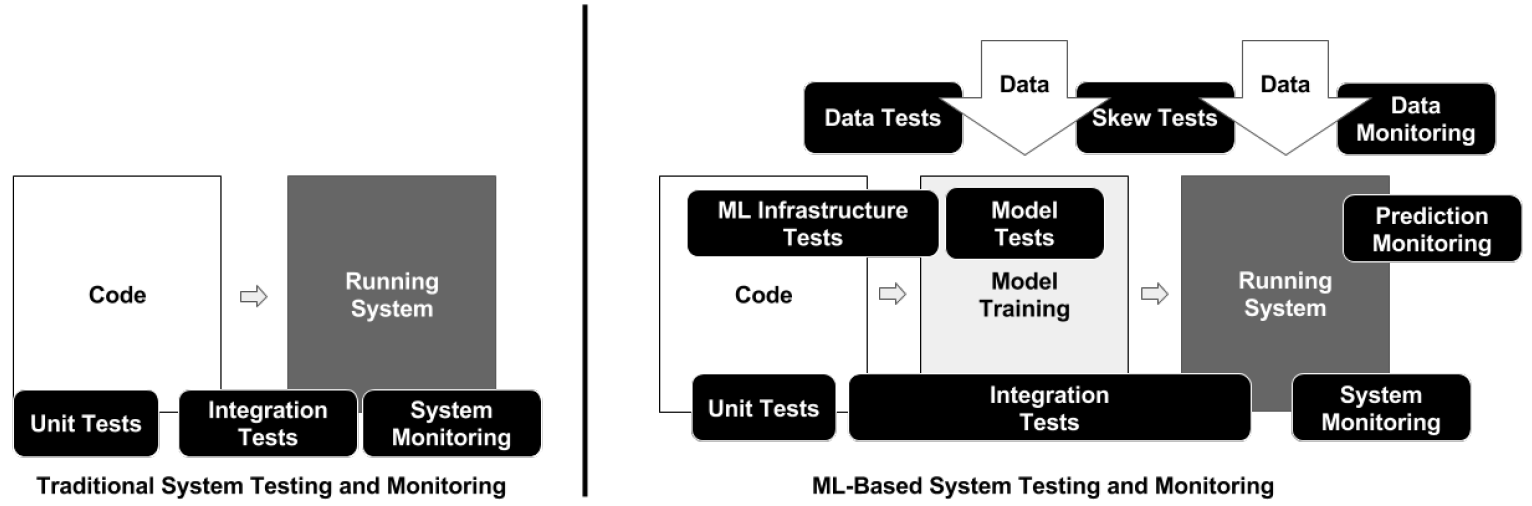

Creating reliable, production-level machine learning systems brings on a host of concerns not found in small toy examples or even large offline research experiments. Testing and monitoring are key considerations for ensuring the production-readiness of an ML system, and for reducing technical debt of ML systems. But it can be difficult to formulate specific tests, given that the actual prediction behavior of any given model is difficult to specify a priori. In this paper, we present 28 specific tests and monitoring needs, drawn from experience with a wide range of production ML systems to help quantify these issues and present an easy to follow road-map to improve production readiness and pay down ML technical debt. The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction | E. Breck, S. Cai, E. Nielsen, M. Salib, and D. Sculley - Google Research Full Stack Deep Learning

|

ML Test Score (2) - Testing & Deployment - Full Stack Deep Learning

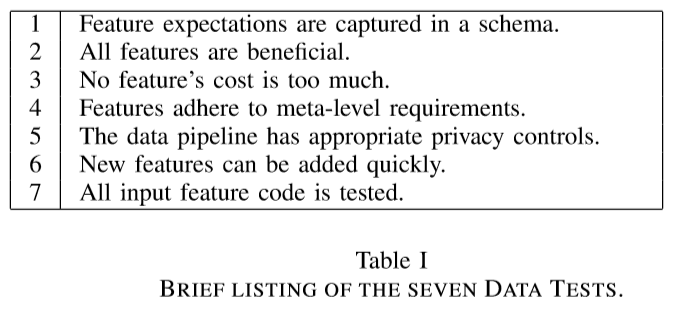

How can you test your machine learning system? A Rubric for Production Readiness and Technical Debt Reduction is an exhaustive framework/checklist from practitioners at Google.

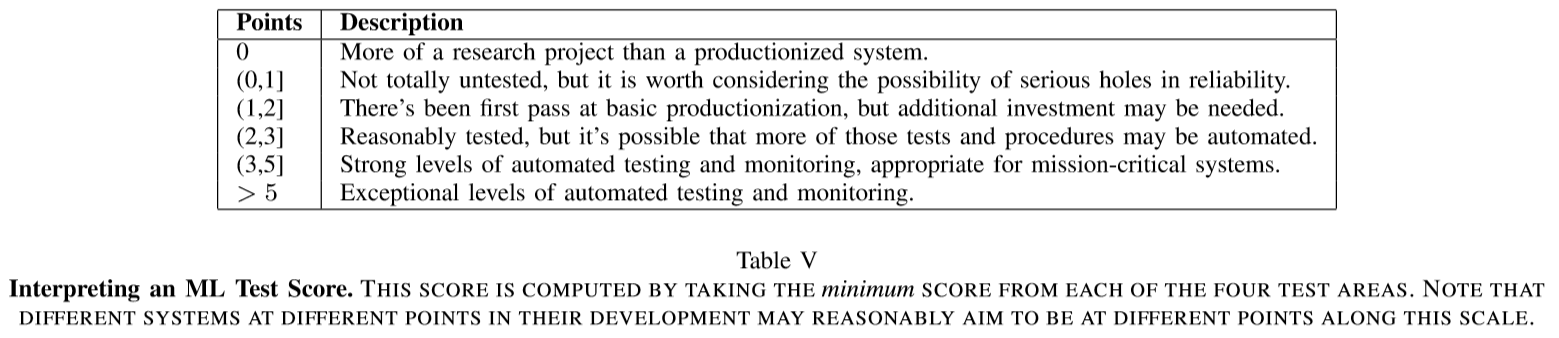

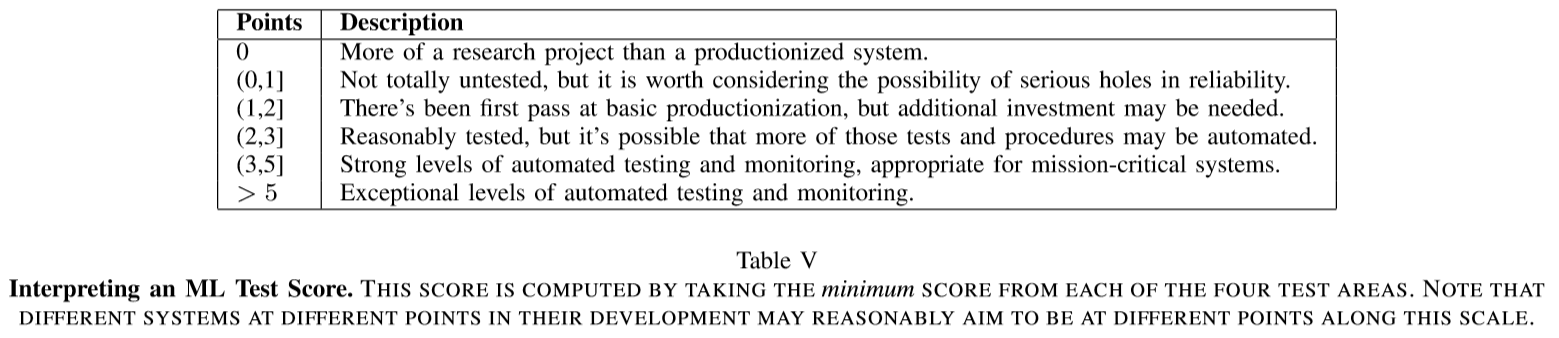

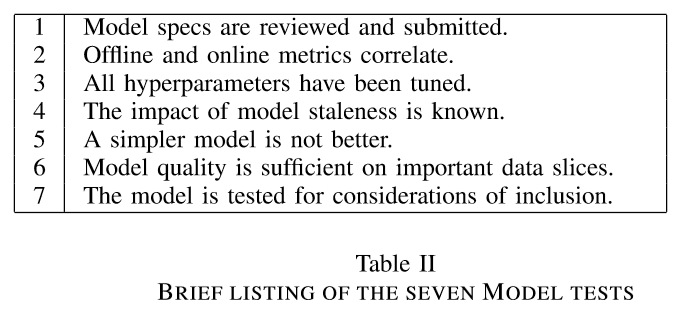

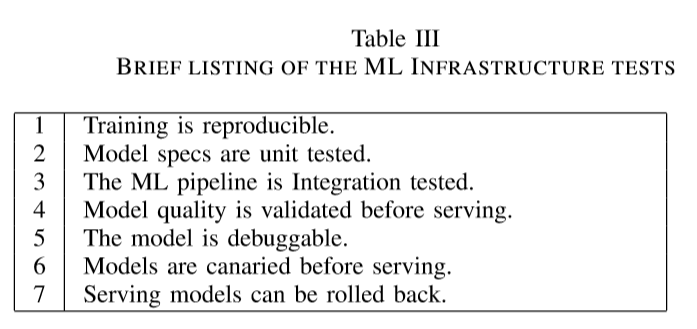

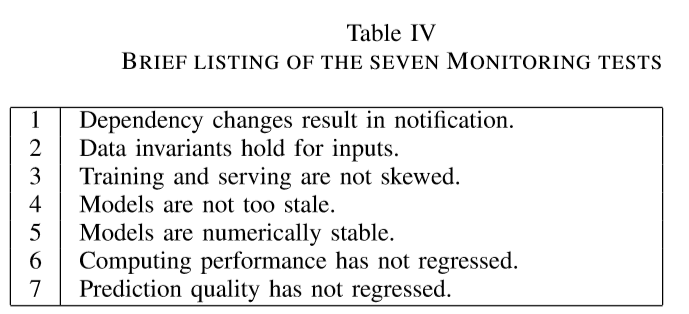

- The paper presents a rubric as a set of 28 actionable tests and offers a scoring system to measure how ready for production a given machine learning system is. These are categorized into 4 sections: (1) data tests, (2) model tests, (3) ML infrastructure tests, and (4) monitoring tests. - The scoring system provides a vector for incentivizing ML system developers to achieve stable levels of reliability by providing a clear indicator of readiness and clear guidelines for how to improve.

|

|

|

|

What is Your ML Score? - Tania Allard

Developer Advocate at Microsoft Using machine learning in real-world applications and production systems is complex. Testing, monitoring, and logging are key considerations for assessing the decay, current status, and production-readiness of machine learning systems. Where do you get started? Who is responsible for testing and monitoring? I’ll discuss the most frequent issues encountered in real-life ML applications and how you can make systems more robust. I’ll also provide a rubric with actionable examples to ensure quality and adequacy of a model in production.

|

|