Explainable / Interpretable AI

YouTube ... Quora ...Google search ...Google News ...Bing News

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Predictive Analytics ... Predictive Maintenance ... Forecasting ... Market Trading ... Sports Prediction ... Marketing ... Politics ... Excel

- Cybersecurity ... OSINT ... Frameworks ... References ... Offense ... NIST ... DHS ... Screening ... Law Enforcement ... Government ... Defense ... Lifecycle Integration ... Products ... Evaluating

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- Policy ... Policy vs Plan ... Constitutional AI ... Trust Region Policy Optimization (TRPO) ... Policy Gradient (PG) ... Proximal Policy Optimization (PPO)

- Strategy & Tactics ... Project Management ... Best Practices ... Checklists ... Project Check-in ... Evaluation

- Analytics ... Visualization ... Graphical Tools ... Diagrams & Business Analysis ... Requirements ... Loop ... Bayes ... Network Pattern

- Perspective ... Context ... In-Context Learning (ICL) ... Transfer Learning ... Out-of-Distribution (OOD) Generalization

- Causation vs. Correlation ... Autocorrelation ...Convolution vs. Cross-Correlation (Autocorrelation)

- Large Language Model (LLM) ... Multimodal ... Foundation Models (FM) ... Generative Pre-trained ... Transformer ... Attention ... GAN ... BERT

- RETRO | DeepMind ... see what the AI has learned by examining the database rather than by studying the Neural Network

- Creatives ... History of Artificial Intelligence (AI) ... Neural Network History ... Rewriting Past, Shape our Future ... Archaeology ... Paleontology

- Development ... Notebooks ... AI Pair Programming ... Codeless ... Hugging Face ... AIOps/MLOps ... AIaaS/MLaaS

- Python Libraries for Interpretable Machine Learning | Rebecca Vickery - Towards Data Science

- Tools:

- LIME (Local Interpretable Model-agnostic Explanations) explains the prediction of any classifier

- ELI5 debug machine learning classifiers and explain their predictions & inspect black-box models

- SHAP debug machine learning classifiers and explain their predictions & inspect black-box models

- yellowbrick the visualiser objects, the core interface, are scikit-learn estimators

- MLxtend the visualiser objects, the core interface, scikit-learn estimators

- Lucid Notebooks | GitHub ... a collection of infrastructure and tools for research in neural network interpretability.

- Singularity ... Sentience ... AGI ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Explaining decisions made with AI | Information Commisioner's Office (ICO) and The Alan Turing Institute

- Explanations based on the Missing: Towards Contrastive Explanations with Pertinent Negatives - IBM

- Why a right to explanation of automated decision-making does not exist in the General Data Protection Regulation | Wachter. S, Mittelstadt, B., Florida, L - University of Oxford, 28 Dec 2016

- This is What Happens When Deep Learning Neural Networks Hallucinate | Kimberley Mok

- H2O Machine Learning Interpretability with H2O Driverless AI

- A New Approach to Understanding How Machines Think | John Pavus

- DrWhy | GitHub collection of tools for Explainable AI (XAI)

- Mixed Formal Learning - A Path to Transparent Machine Learning | Sandra Carrico

- Take advantage of open source trusted AI packages in IBM Cloud Pak for Data | Deborah Schalm - DevOps.com

- Counterfactual Explanations with Theory-of-Mind for Enhancing Human Trust in Image Recognition Models | Arjun R. Akula, Keze Wang, Changsong Liu, Sari Saba-Sadiya, Hongjing Lu, Sinisa Todorovic, Joyce Chai, Song-Chun Zhu

- AI will soon become impossible for humans to comprehend – the story of neural networks tells us why | David Beer - The Conversation

- AI Foundational Research - Exp lainability | NIST ...Four Principles of Explainable Artificial Intelligence | P. J. Phillips, C. Hahn, P. Fontana, D. Broniatowski, and M. Przybocki - NIST

- Explanation: Systems deliver accompanying evidence or reason(s) for all outputs.

- Meaningful: Systems provide explanations that are understandable to individual users.

- Explanation Accuracy: The explanation correctly reflects the system’s process for generating the output.

- Knowledge Limits: The system only operates under conditions for which it was designed or when the system reaches a sufficient confidence in its output.

- A collection of recommendable papers and articles on Explainable AI (XAI) | Murat Durmus - LinkedIn

Contents

Explainable Artificial Intelligence (XAI)

AI system produces results with an account of the path the system took to derive the solution/prediction - transparency of interpretation, rationale and justification. 'If you have a good causal model of the world you are dealing with, you can generalize even in unfamiliar situations. That’s crucial. We humans are able to project ourselves into situations that are very different from our day-to-day experience. Machines are not, because they don’t have these causal models. We can hand-craft them but that’s not enough. We need machines that can discover causal models. To some extend it’s never going to be perfect. We don’t have a perfect causal model of the reality, that’s why we make a lot of mistakes. But we are much better off at doing this than other animals.' Yoshua Benjio

Progress made with XAI:

- Explainable AI Techniques: There are now off-the-shelf explainable AI techniques that developers can use to incorporate explainable AI techniques into their workflows as part of their modeling operations. These techniques help to disclose the program's strengths and weaknesses, the specific criteria the program uses to arrive at a decision, and why a program makes a particular decision, as opposed to alternatives.

- Model Explainability: is essential for high-stakes domains such as healthcare, finance, the legal system, and other critical industrial sectors. Explainable AI (XAI) is a subfield of AI that aims to develop AI systems that can provide clear and understandable explanations of their decision-making processes to humans. The goal of XAI is to make AI more transparent, trustworthy, responsible, and ethical

- Concept-Based Explanations: There has been progress in using concept-based explanations to explain deep neural networks. TCAV (Testing with Concept Activation Vectors) is a technique developed by Google AI that uses concept-based explanations to explain deep neural networks. This technique helps to make AI more transparent, trustworthy, responsible, and ethical.

- Interpretable and Inclusive AI: There has been progress in building interpretable and inclusive AI systems from the ground up with tools designed to help detect and resolve bias, drift, and other gaps in data and models. AI Explanations in AutoML Tables, Vertex AI Predictions, and Notebooks provide data scientists with the insight needed to improve datasets or model architecture and debug model performance.

Explainable Computer Vision with Grad-CAM

- FairyOnIce's Grad-CAM code | GitHub

- Grad-CAM implementation in Keras | Jacob Gildenblat - jacobgil/keras-grad-cam

- Grad-CAM Demo | modified by Siraj Raval

We propose a technique for producing "visual explanations" for decisions from a large class of CNN-based models, making them more transparent. Our approach - Gradient-weighted Class Activation Mapping (Grad-CAM), uses the gradients of any target concept, flowing into the final convolutional layer to produce a coarse localization map highlighting important regions in the image for predicting the concept. Grad-CAM is applicable to a wide variety of CNN model-families: (1) CNNs with fully-connected layers, (2) CNNs used for structured outputs, (3) CNNs used in tasks with multimodal inputs or reinforcement learning, without any architectural changes or re-training. We combine Grad-CAM with fine-grained visualizations to create a high-resolution class-discriminative visualization and apply it to off-the-shelf image classification, captioning, and visual question answering (VQA) models, including ResNet-based architectures. In the context of image classification models, our visualizations (a) lend insights into their failure modes, (b) are robust to adversarial images, (c) outperform previous methods on localization, (d) are more faithful to the underlying model and (e) help achieve generalization by identifying dataset bias. For captioning and VQA, we show that even non-attention based models can localize inputs. We devise a way to identify important neurons through Grad-CAM and combine it with neuron names to provide textual explanations for model decisions. Finally, we design and conduct human studies to measure if Grad-CAM helps users establish appropriate trust in predictions from models and show that Grad-CAM helps untrained users successfully discern a 'stronger' nodel from a 'weaker' one even when both make identical predictions. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization | R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra

Building powerful Computer Vision-based apps without deep expertise has become possible for more people due to easily accessible tools like Python, Colab, Keras, PyTorch, and Tensorflow. But why does a computer classify an image the way that it does? This is a question that is critical when it comes to AI applied to diagnostics, driving, or any other form of critical decision making. In this episode, I'd like to raise awareness around one technique in particular that I found called "Grad-Cam" or Gradient Class Activation Mappings. It allows you to generate a heatmap that helps detail what your model thinks the most relevant features in an image are that cause it to make its predictions. I'll be explaining the math behind it and demoing a code sample by fairyonice to help you understand it. I hope that after this video, you'll be able to implement it in your own project. Enjoy! | Siraj Raval

Interpretable

Youtube search... ...Google search

Please Stop Doing "Explainable" ML: There has been an increasing trend in healthcare and criminal justice to leverage machine learning (ML) for high-stakes prediction applications that deeply impact human lives. Many of the ML models are black boxes that do not explain their predictions in a way that humans can understand. The lack of transparency and accountability of predictive models can have (and has already had) severe consequences; there have been cases of people incorrectly denied parole, poor bail decisions leading to the release of dangerous criminals, ML-based pollution models stating that highly polluted air was safe to breathe, and generally poor use of limited valuable resources in criminal justice, medicine, energy reliability, finance, and in other domains. Rather than trying to create models that are inherently interpretable, there has been a recent explosion of work on “Explainable ML,” where a second (posthoc) model is created to explain the first black box model. This is problematic. Explanations are often not reliable, and can be misleading, as we discuss below. If we instead use models that are inherently interpretable, they provide their own explanations, which are faithful to what the model actually computes. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead | Cynthia Rudin - Duke University

Accuracy & Interpretability Trade-Off

Youtube search... ...Google search

- Model Prediction Accuracy Versus Interpretation in Machine Learning | Jason Brownlee - Machine Learning Mastery

- The balance: Accuracy vs. Interpretability | Sharayu Rane - Towards Data Science

|

Weirder Than You Thought

- Tracing the thoughts of a large language model | Anthropic

- On the Biology of a Large Language Model | Transformer Circuits Thread

- Anthropic has developed an AI 'brain scanner' to understand how LLMs work and it turns out the reason why chatbots are terrible at simple math and hallucinate is weirder than you thought | Jeremy Laird - PC GAMER

With two new papers, Anthropic's researchers have taken significant steps towards understanding the circuits that underlie an AI model’s thoughts. In one example from the paper, we find evidence that Claude will plan what it will say many words ahead, and write to get to that destination. We show this in the realm of poetry, where it thinks of possible rhyming words in advance and writes each line to get there. This is powerful evidence that, even though models are trained to output one word at a time, they may think on much longer horizons to do so.

Trust

YouTube search... ...Google search

- Cybersecurity Frameworks, Architectures & Roadmaps

- Fairlearn ...a toolkit to assess and improve the fairness of machine learning models. Use common fairness metrics and an interactive dashboard to assess which groups of people may be negatively impacted.

- In AI We Trust Incrementally: a Multi-layer Model of Trust to Analyze Human-Artificial Intelligence Interactions | A. Ferrario, M. Loi and E. Viganò - DOI.org

- Trusted AI | Capgemini

- BAE Systems delivers trusted computing MindfuL software to help humans believe artificial intelligence (AI) - Military & Aerospace Electronics

- How to make AI trustworthy | University of Southern California - ScienceDaily

- Building trust in AI is key to autonomous drones, flying cars | David Thorton - Federal News Network

- Governments should close the AI trust gap with businesses | Consultancy.asia

- Trust in Robots: Challenges and Opportunities | Bing Cai Kok and Harold Soh - SpringerLink

- We’re not ready for AI, says the winner of a new $1m AI prize | Will Douglas Heaven - MIT Technology Review ...Regina Barzilay, the first winner of the Squirrel AI Award, on why the pandemic should be a wake-up call.

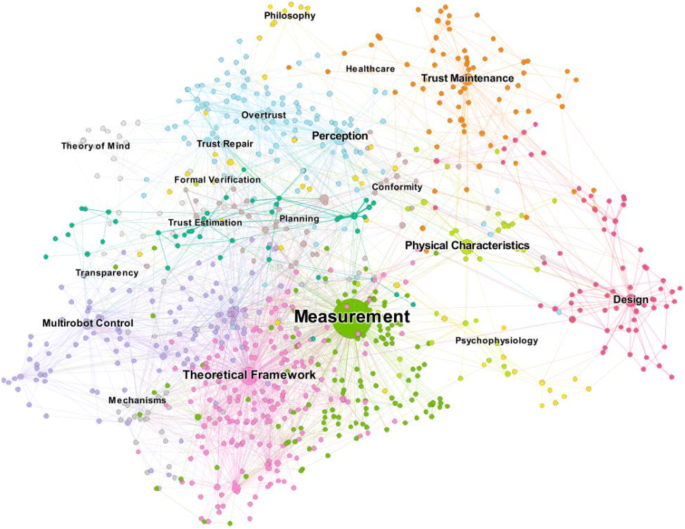

Measurement and Transparency are key to trusted AI

|

|

|

|

|

|

|

|

Domains

Government

AI AND TRUSTWORTHINESS -- Increasing trust in AI technologies is a key element in accelerating their adoption for economic growth and future innovations that can benefit society. Today, the ability to understand and analyze the decisions of AI systems and measure their trustworthiness is limited. Among the characteristics that relate to trustworthy AI technologies are accuracy, reliability, resiliency, objectivity, security, explainability, safety, and accountability. Ideally, these aspects of AI should be considered early in the design process and tested during the development and use of AI technologies. AI standards and related tools, along with AI risk management strategies, can help to address this limitation and spur innovation. ... It is important for those participating in AI standards development to be aware of, and to act consistently with, U.S. government policies and principles, including those that address societal and ethical issues, governance, and privacy. While there is broad agreement that these issues must factor into AI standards, it is not clear how that should be done and whether there is yet sufficient scientific and technical basis to develop those standards provisions. Plan Outlines Priorities for Federal Agency Engagement in AI Standards Development;

Healthcare

Interpretable Machine Learning for Healthcare