Ethics

YouTube search... ...Google search

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- Cybersecurity ... OSINT ... Frameworks ... References ... Offense ... NIST ... DHS ... Screening ... Law Enforcement ... Government ... Defense ... Lifecycle Integration ... Products ... Evaluating

- Policy ... Policy vs Plan ... Constitutional AI ... Trust Region Policy Optimization (TRPO) ... Policy Gradient (PG) ... Proximal Policy Optimization (PPO)

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Other Challenges in Artificial Intelligence

- Bias and Variances

- U.S. 10 Principles - White House Proposes 'Light-Touch Regulatory Approach' for Artificial Intelligence | Brandi Vincent - Nextgov

- Beijing publishes AI ethical standards, calls for int'l cooperation | Xinhua

- EU General Data Protection Regulations GDPR.org ...GDPR

- Responsible AI Champions Pilot | Department of Defense Joint Artificial Intelligence Center (JAIC) ...DoD AI Principles ...Themes ...Tactics

- Implementing Responsible Artificial Intelligence in the Department of Defense May 26, 2021

- Partnership on AI brings together diverse, global voices to realize the promise of artificial intelligence

- Amazon joins Microsoft in calling for regulation of facial recognition tech | Saqib Shah - engadget

- The Internet needs new rules. Let’s start in these four areas. | Mark Zuckerberg

- How Big Tech funds the debate on AI ethics | Oscar Williams - NewStatesman and NS Tech

- Europe is making AI rules now to avoid a new tech crisis | Ivana Kottasová - CNN Business

- OECD members, including U.S., back guiding principles to make AI safer | Leigh Thomas - Reuters

- 3 Practical Solutions to Offset Automation’s Impact on Work | Moran Cerf, Ryan Burke and Scott Payne - Singularity Hub

- EU backs AI regulation while China and US favour technology | Siddharth Venkataramakrishnan - The Financial Times Limited

- Could tough new rules to regulate big tech backfire? | Harry de Quetteville & Matthew Field - The Telegraph

- Don’t let industry write the rules for AI | Yochai Benkler - Nature

- The Algorithmic Accountability Act of 2019: Taking the Right Steps Toward AI Success | Colin Priest - DataRobot

There are many efforts underway to address the ethical issues raised by artificial intelligence. Some of these efforts are focused on developing ethical guidelines for the development and use of AI, while others are focused on developing technical solutions to mitigate the risks of AI. The development of ethical guidelines and technical solutions is just one part of the effort to address the ethical issues raised by AI. It is also important to have open and transparent discussions about the potential risks and benefits of AI, and to involve stakeholders from all sectors of society in the development of AI technologies.

|

|

|

|

|

|

|

|

|

|

Contents

Values, Rights, & Religion

Montreal Declaration for Responsible AI

- Montreal AI Ethics Institute creating tangible and applied technical and policy research in the ethical, safe, and inclusive development of AI.

- Montreal Declaration for Responsible AI

Effort to develop ethical guidelines for AI is the Montreal Declaration for Responsible AI. This declaration was developed by a group of experts from around the world in 2018. The declaration calls for the development of AI that is beneficial to humanity, and that respects human rights and dignity.

Partnership on AI

The Partnership on AI (PAI) is a non-profit coalition committed to the responsible use of artificial intelligence. It researches best practices for artificial intelligence systems and to educate the public about AI. Efforts underway to develop technical solutions to mitigate the risks of AI. The partnership has developed a set of AI principles, and is working on projects to address issues such as bias in AI systems and the safety of autonomous vehicles.

Publicly announced September 28, 2016, its founding members are Amazon, Facebook, Google, DeepMind, Microsoft, and IBM, with interim co-chairs Eric Horvitz of Microsoft Research and Mustafa Suleyman of DeepMind. Apple joined the consortium as a founding member in January 2017. More than 100 partners from academia, civil society, industry, and nonprofits are member organizations in 2019. In January 2017, Apple head of advanced development for Siri, Tom Gruber, joined the Partnership on AI's board. In October 2017, Terah Lyons joined the Partnership on AI as the organization's founding executive director.

The PAI's mission is to promote the beneficial use of AI through research, education, and public engagement. The PAI works to ensure that AI is developed and used in a way that is safe, ethical, and beneficial to society.

The PAI's work is guided by a set of AI principles, which were developed by the PAI's members and endorsed by the PAI's board of directors. The principles are:

- AI should be developed and used for beneficial purposes.

- AI should be used in a way that respects human rights and dignity.

- AI should be developed and used in a way that is safe and secure.

- AI should be developed and used in a way that is fair and unbiased.

- AI should be developed and used in a way that is transparent and accountable.

- AI should be developed and used in a way that is understandable and interpretable.

- AI should be developed and used in a way that is aligned with societal values.

The PAI's work is divided into four areas:

- Research: The PAI supports research on the societal and ethical implications of AI.

- Education: The PAI provides educational resources on AI to the public.

- Public engagement: The PAI engages with the public about AI through events, publications, and other activities.

- Policy: The PAI works to develop and promote policies that promote the beneficial use of AI.

The PAI is a valuable resource for anyone who is interested in the responsible use of artificial intelligence. The PAI's work is helping to ensure that AI is developed and used in a way that is safe, ethical, and beneficial to society.

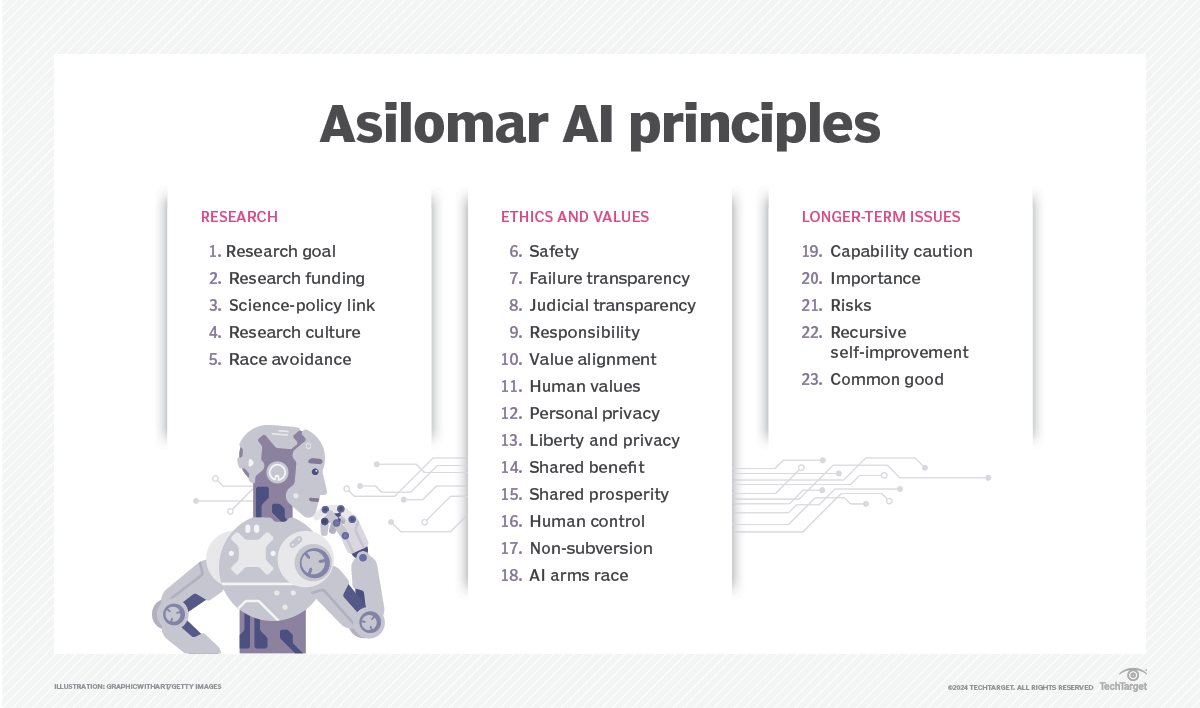

Asilomar AI Principles

- AI Principles | Future of Life Institute

- Asilomar AI Principles | Alexander Gillis - WhatIs.com ... Asilomar AI Principles are 23 guidelines for the research and development of artificial intelligence (AI). The Asilomar Principles outline developmental issues, ethics and guidelines for the development of AI, with the goal of guiding the development of beneficial AI. The tenets were created at the Asilomar Conference on Beneficial AI in 2017 in Pacific Grove, Calif. The conference was organized by the Future of Life Institute.

One of the most well-known efforts to develop ethical guidelines for AI is the Asilomar AI Principles. These principles were developed by a group of experts in AI, ethics, and law in 2017. The principles outline a set of values that should guide the development and use of AI, including safety, transparency, accountability, and fairness.

Stupidity

Dietrich Bonhoeffer, a German theologian and anti-Nazi dissident, proposed a profound and cautionary theory about stupidity in his letters and writings, particularly during his imprisonment by the Nazis. During his imprisonment in World War II wrote about how a highly educated society could fall prey to horrific ideas. He concluded that the root cause wasn't malice, but a specific type of "stupidity." His "Theory of Stupidity" highlights stupidity as a societal and moral issue, arguing that it can be more dangerous than outright evil.

Bonhoeffer didn't define this stupidity as a low IQ. Instead, he saw it as a sociological and moral surrender. Bonhoeffer observed that when confronted with a massive external power, people often lose their inner independence. They consciously or unconsciously give up their autonomous position. Instead of thinking critically for themselves, they become vessels for slogans and catchwords. They stop engaging with reality and simply adopt the stance of the dominant power.

Stupidity is a more dangerous enemy of the good than malice - Dietrich Bonhoeffer

AI as the External Power

In modern technology ethics, scholars apply Bonhoeffer's theory directly to our relationship with artificial intelligence. Today, AI systems, algorithms, and automated platforms act as that overwhelming external power.

When we use AI to write our emails, dictate our schedules, or make business decisions, it is incredibly easy to surrender our autonomous position. Thinking critically, evaluating complex data, and making moral judgments require intense effort. It is much easier to let a machine do the heavy lifting and accept the output as fact. By defaulting to what the algorithm suggests, humans risk turning off their critical faculties. We stop asking why a decision was made and simply accept the machine's verdict.

The Real Ethical Danger The immediate ethical danger of AI isn't that the machines will suddenly become evil. The danger is that humans will become "stupid" in the Bonhoeffer sense. If we give up our autonomy to algorithms, we make ourselves vulnerable. We might blindly follow biased recommendations, spread generated misinformation, or enforce unfair policies simply because the computer told us to do so. When humans lose their capacity for independent moral reasoning, they can participate in harmful systems without ever recognizing the harm they are causing.

Below is an overview of Bonhoeffer's perspective:

- Stupidity as a Moral Weakness: Bonhoeffer viewed stupidity not merely as a lack of intelligence but as a moral failing—a lack of critical reflection, self-awareness, and responsibility. Stupidity, in this sense, is a choice to relinquish independent thought and judgment. He linked it to a failure to exercise freedom responsibly, often driven by conformity, fear, or an uncritical acceptance of authority.

- The Danger of Stupidity: Bonhoeffer believed stupidity is more dangerous than malice because it is impervious to reason. Unlike evil, which can be confronted with moral arguments or force, stupidity resists logic and appeals to rationality. Stupid individuals or groups can unknowingly perpetuate harm, acting as tools for those with malicious intent.

- Collective Phenomenon: Stupidity, Bonhoeffer argued, often manifests in groups or societies under oppressive systems. People tend to abandon critical thinking and align with the crowd to avoid standing out or facing repercussions. He observed that totalitarian regimes, like the Nazis, exploit and encourage collective stupidity by demanding blind loyalty and discouraging dissent.

- Loss of Autonomy: Stupid individuals, according to Bonhoeffer, stop thinking for themselves and become passive. Their moral and intellectual faculties are outsourced to a leader or ideology, making them manipulable.

- Hope Through Enlightenment: Bonhoeffer maintained that stupidity can be overcome, not through confrontation or ridicule, but through education and fostering critical thinking. He called for the cultivation of wisdom and courage in individuals, enabling them to resist manipulation and embrace independent thought.

Modern Implications: Bonhoeffer's insights remain relevant, especially in discussions about the dangers of groupthink, misinformation, and the erosion of critical thinking in political and social contexts. They caution societies to value wisdom, encourage dialogue, and remain vigilant against the allure of simplistic or authoritarian solutions to complex problems.

Cipolla's Five Laws of Human Stupidity: Cipolla's framework is a humorous yet insightful way of understanding human behavior and its consequences.

- Always and inevitably, everyone underestimates the number of stupid individuals in circulation. This law asserts that stupidity is far more prevalent than we assume. Even in groups of educated or seemingly competent individuals, stupidity can appear unexpectedly.

- The probability that a person is stupid is independent of any other characteristic of that person. Stupidity does not discriminate based on education, social status, profession, or any other factor. It is a universal trait found in all demographics.

- A stupid person is someone who causes harm to another person or group while deriving no personal gain, and possibly even incurring self-harm. This is the "Golden Law of Stupidity." It highlights the irrational and self-destructive nature of stupidity, as it leads to harm without logical benefit to the perpetrator.

- Non-stupid people always underestimate the damaging power of stupid individuals. Non-stupid individuals often fail to recognize the potential harm caused by stupidity. They either underestimate its impact or assume it can be controlled.

- A stupid person is the most dangerous type of person. Stupid people are more dangerous than malicious individuals because their actions are unpredictable and irrational. A malicious person may act out of calculated self-interest, but a stupid person's behavior harms everyone, including themselves.

How AI Can Help?

- Perspective ... Context ... In-Context Learning (ICL) ... Transfer Learning ... Out-of-Distribution (OOD) Generalization

AI can assist in understanding and disseminating the insights from Dietrich Bonhoeffer's "Theory of Stupidity" and Carlo Cipolla's "Five Laws of Human Stupidity" in several ways:

- Summarization and Simplification: AI can summarize and distill complex theories into accessible, easy-to-understand formats for various audiences. Examples include concise bullet points, infographics, or video scripts for educational purposes.

- Comparative Analysis: AI can draw connections between Bonhoeffer's and Cipolla's theories, highlighting shared themes and unique aspects. It can provide a comparative framework to help readers better understand their relevance to historical and contemporary issues.

- Educational Tools: AI can create quizzes, discussion prompts, and lesson plans to teach these theories in classrooms or workshops. Interactive simulations can illustrate concepts, such as the dangers of groupthink or the societal impact of stupidity.

- Content Creation: AI can generate articles, blog posts, or social media content to raise awareness about these theories. It can tailor the content to specific audiences, such as students, professionals, or policymakers.

- Detection of Modern Implications: AI can analyze current events, media, and social trends to identify instances where Bonhoeffer's and Cipolla's insights are relevant. It can help flag situations where collective stupidity or groupthink might be at play, encouraging critical discussions.

- Facilitation of Dialogue: AI-powered chatbots or forums can facilitate discussions, offering counterpoints or probing questions to encourage deeper reflection on these theories. This could be useful for academic settings or public forums.

- Misinformation and Stupidity Mitigation: AI can assist in combating misinformation by fact-checking and promoting critical thinking, addressing Bonhoeffer’s concerns about the erosion of reason. Tools like language analysis and sentiment tracking can help identify patterns of irrational or harmful behavior in digital spaces.

- Personal Development Tools: AI can create self-assessment tools to help individuals identify cognitive biases or tendencies to conform uncritically,

Debating

YouTube search... ...Google search

- Law

- Agents ... Robotic Process Automation ... Assistants ... Personal Companions ... Productivity ... Email ... Negotiation ... LangChain

- Project Debater | IBM

- Hanson Robotics

|

|

|

|