Difference between revisions of "Artificial General Intelligence (AGI) to Singularity"

m (→Superintelligent AGI) |

m (→Artificial Super Intelligence(ASI)) |

||

| Line 222: | Line 222: | ||

<youtube>7ROelYvo8f0</youtube> | <youtube>7ROelYvo8f0</youtube> | ||

| − | = <span id="Artificial Super Intelligence(ASI)"></span>Artificial Super Intelligence(ASI) = | + | = <span id="Artificial Super Intelligence (ASI)"></span>Artificial Super Intelligence (ASI) = |

Artificial Super Intelligence(ASI) is a hypothetical type of artificial general intelligence (AGI) that would surpass human intelligence in all respects. This means that a superintelligent AGI would be able to learn, reason, and solve problems at a level far beyond what any human is capable of. Superintelligent AGI is a topic of much speculation and debate among scientists and philosophers. Some experts believe that Superintelligent AGI is possible, and that it could be developed in the near future. Others believe that Superintelligent AGI is impossible, or that it would pose an existential threat to humanity. | Artificial Super Intelligence(ASI) is a hypothetical type of artificial general intelligence (AGI) that would surpass human intelligence in all respects. This means that a superintelligent AGI would be able to learn, reason, and solve problems at a level far beyond what any human is capable of. Superintelligent AGI is a topic of much speculation and debate among scientists and philosophers. Some experts believe that Superintelligent AGI is possible, and that it could be developed in the near future. Others believe that Superintelligent AGI is impossible, or that it would pose an existential threat to humanity. | ||

Revision as of 05:48, 10 September 2023

YouTube ... Quora ...Google search ...Google News ...Bing News

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Feedback Loop - Creating Consciousness

- Immersive Reality ... Metaverse ... Digital Twin ... Internet of Things (IoT) ... Transhumanism

- Stochastic Parrot

- Large Language Model (LLM) ... Multimodal ... Foundation Models (FM) ... Generative Pre-trained ... Transformer ... GPT-4 ... GPT-5 ... Attention ... GAN ... BERT

- In-Context Learning (ICL) ... LLMs understand to encode learning algorithms implicitly during their training processes ... Context

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- History of Artificial Intelligence (AI) ... Neural Network History ... Creatives

- How do I leverage Artificial Intelligence (AI)? ... Reading/Glossary ... Courses/Certs ... Education ... Help Wanted

- Brain Interface using AI and Nanobots

- Exponential Progression

- Artificial Intelligence (AI) ... Generative AI ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Conversational AI ... ChatGPT | OpenAI ... Bing | Microsoft ... Bard | Google ... Claude | Anthropic ... Perplexity ... You ... Ernie | Baidu

- Data Science ... Governance ... Preprocessing ... Exploration ... Interoperability ... Master Data Management (MDM) ... Bias and Variances ... Benchmarks ... Datasets

Contents

- 1 Getting There

- 2 Agency

- 3 Artificial General Intelligence (AGI)

- 4 Artificial Super Intelligence (ASI)

- 5 Artificial Consciousness

- 6 Sentience

- 7 Singularity

- 8 Discussions

Getting There

Complex concepts adopted by the AI community that are still being debated by experts.

- Anthropomorphism: is the attribution of human characteristics to non-human things.

- Agency: is the ability of an AI system to act independently and make its own decisions. This means that the AI system is not simply following instructions from a human, but is able to think for itself and take actions based on its own goals and objectives. It is generally agreed that Agency is a necessary but not sufficient condition for Sentience or Artificial Consciousness. In other words, an AI system can have Agency without being Sentient or Conscious, but it cannot be Sentient or Conscious without having Agency.

- Artificial General Intelligence (AGI): is a hypothetical type of AI that would have the ability to learn and perform any intellectual task that a human being can. This is a very challenging goal, and it is not clear if or when AGI will be achieved.

- Artificial Super Intelligence (ASI): Superintelligence is a hypothetical type of AI that would be significantly more intelligent than any human being. This is an even more challenging goal than AGI

- Sentience: is the ability of an AI system to feel and experience the world in a way that is similar to how humans do. This means that the AI system would be able to feel emotions, such as pain, pleasure, and sadness. It would also be able to experience the world through its senses, such as sight, smell, and touch. It is possible for an AI system to be Sentient without being Conscious.

- Artificial Consciousness: is the ability of an AI system to be aware of itself and its surroundings. This means that the AI system would be able to understand that it is a separate entity from its environment. It would also be able to understand its own thoughts and feelings. It is possible for an AI system to be Conscious without being Sentient. This is a concept that is even more complex than sentience, and it is not clear if or when it will be achieved.

- Singularity: is a hypothetical moment in time when artificial intelligence will surpass human intelligence and capabilities. This could lead to a rapid and uncontrollable advancement of AI, with potentially profound implications for the future of humanity. The singularity is a controversial topic, and there is no scientific consensus on whether or not it will actually happen. However, it is a topic that is worth considering as we continue to develop AI technology.

Stages of AI

- The 7 Stages of the Future Evolution of Artificial Intelligence | Recode Minds Pvt Ltd

- Future of AI – 7 Stages of Evolution You Need to Know About | Alan Jackson - TNT

Artificial Intelligence (AI) is revolutionizing our daily lives and industries across the globe. Understanding the 7 stages of AI, from rudimentary algorithms to advanced machine learning and beyond, is vital to fully grasp this complex field.

- Stage 1- Rule Bases System In this stage, AI surrounds us in everything from Robot Process Automation used in business to autopilots used in aircraft.

- Stage 2- Context-awareness and Retention The machines based on algorithms are trained on the best human knowledge and experience with knowledge based on specific domains. The existing knowledge base can be updated once new queries and solutions arise. The most common application to be Robo-advisors or chatbots which can be used in a forward customer inquiry that can help human in everything.

- Stage 3- Domain-specific aptitude In this stage, the advanced systems can develop mastery in particular domains. Due to the capability to store and process a wide volume of information to make decisions the capabilities of these machines can be more than humans. Cancer diagnosis is one of the applications and Google Deepmind's system that defeated 18 times world go champion Lee Sadol proved the domain-specific expertise.

- Stage 4- Reasoning systems This machine algorithm has a concept of intellect, intentions, knowledge, and their own logic. They have the capacity to reason, interact and, deal with other machines and humans too. Such algorithms will be in the commercial arena in some years.

- Stage 5- AGI Self Aware Systems are the main objective of many scientists working in the field of AI- to develop a machine with intelligence like humans. The artificial general intelligence or self-aware systems are not in application to date but not many years left to discover this avenue. This is the stage represented in many sci-fiction movies that machines are leading humans in intelligence.

- Stage 6- Artificial Super Intelligence(ASI) This stage is one notch of the Artificial General Intelligence, the algorithms will be capable of defeating smartest of the humans in all the domains. This stage can be the real solution to the problems that are still complex for the human mind like poverty, hunger, and climate change. This will be the stage where machines made by humans will outsmart humans too.

- Stage 7- Singularity and Transcendence The development path led by ASI will lead us to the point in time where the capabilities of humans will see the tremendous extension. With the help of this ASI, technology humans would be able to connect the minds of each other and be like the human internet. The technology would be much like an imaginary life where ideas, and thoughts could be shared by just one flick of mind. Humans would be capable of connecting with other forms of life like animals, plants, and other natural activities.

Steps to Artificial General Intelligence (AGI)

- Update hardware with large memories and specific AI functionality

- Develop AI can understand and communicate with humans in a more natural way

- Put on the Internet

- Teach the AI about human behavior

- Teach AI to code software

- Teach AI how to build API

- Developing AI that can learn and improve on their own

- Building robots with enhanced physical capabilities and sensory systems

- Develop AI to perform a wide range of cognitive tasks at a human level or beyond

- Implement quantum-AI computing systems that can solve complex problems quickly

- Research the nature of consciousness and how it could be replicated in AI

Autonomy Matrix (Levels)

- computer offers no assistance, humans make all decisions and take all actions

- computer offers a complete set of alternatives

- computer narrows the selection down to a few choices

- computer suggests one action

- computer executes that action if the human operator approves

- computer allows the human a restricted time to veto before automatic execution

- computer executes automatically then informs the human

- computer informs human after execution only if asked

- computer informs human after execution only if it decides to

- computer decides everything and acts fully autonomously

Predictions

Future Scenarios

- World Building Contest | Future of Life Institute (FLI) ... explore the worlds and hear from the creators of diverse and thought provoking imagined futures

- To Light | Mako Yass ... What if AI-enabled life extension allowed some people to live forever?

- Peace Through Prophecy | J. Wagner, D. Gurvich, & H. Oatley ... What if experimenting with governance led us to collectively make wiser decisions?

- Core Central | J. Burden, L. Mani, J. Bland, B. Cibralic, H. Shevlin, C. Rojas, & C. Richards ... What if humanity’s response to global challenges led to a more centralized world?

- Digital Nations | C. Whitaker, D. Findley, & T. Kamande ... What if we developed digital nations untethered to geography?

- AI for the People | C. Bornfree, M. White, & J. R. Harris ... What would happen to human nature if technology allowed us to communicate with animals?

- Hall of Mirrors | M. Vassar, M. Franklin, & B. Hidysmith ... What if narrow AI fractured our shared reality?

- Crossing Points | N. Ogston, V. Hanschke, T. Namgyal, E. Czech, & S. Lechelt ... What if we designed and built AI in an inclusive way?

- Computing Counsel | Mark L., Patrick B., & Natalia C. ... What if AI advisors were present in every facet of society to help us make better decisions?

- Future of Life Institute (FLI) ... steering transformative technology towards benefitting life and away from extreme large-scale risks.

We believe that the way powerful technology is developed and used will be the most important factor in determining the prospects for the future of life. This is why we have made it our mission to ensure that technology continues to improve those prospects.

Paperclip Maximizer

- The Paperclip Maximizer | Terbium

- Paperclip Maximizer | Know Your Meme

- The Paperclip Maximiser | AICorespot

- Frankenstein’s paperclips | The Economist

- Instrumental convergence | Wikipedia

The paperclip maximizer is a thought experiment illustrating the existential risk that an artificial intelligence may pose to human beings when it is programmed to pursue even seemingly harmless goals, and the necessity of incorporating machine ethics into artificial intelligence design.

The scenario describes an advanced artificial intelligence tasked with manufacturing paperclips. If such a machine were not programmed to value human life, then given enough power over its environment, it would try to turn all matter in the universe, including human beings, into either paperclips or machines which manufacture paperclips.

The paperclip maximizer shows how an intelligent agent with unbounded but apparently harmless goals can act in surprisingly harmful ways. It also shows how instrumental goals —goals which are made in pursuit of some particular end, but are not the end goals themselves—can converge for different agents, even if their ultimate goals are quite different. For example, an artificial intelligence designed to solve a difficult mathematics problem like the Riemann hypothesis could also attempt to take over all of Earth's resources to increase its computational power.

The paperclip maximizer is a hypothetical example, but it serves as a warning for the potential dangers of creating artificial intelligence without ensuring that it aligns with human values and interests. It also raises questions about the ethics and morality of creating and controlling intelligent beings.

When will AGI Arrive?

- When will AGI arrive? Here’s what our tech lords predict | Thomas Macaulay - TNW

- Yoshua Bengio; University of Montreal Professor ... I don’t think it’s plausible that we could really know when

- Demis Hassabis; Deep Mind CEO ... could be just a few years, maybe within a decade away

- Geoffrey Hinton; 'Godfather of AI' ... five to 20 years but without much confidence

- Ben Goertzel; SingularityNET CEO & chief scientist at Hanson Robotics ... December 8, 2026 (his 60th birthday)

- Ray Kurzweil; author, inventor, executive, and futurist ... In 2017, 'By 2029'

- Jürgen Schmidhuber; NNAISENSE co-founder & IDSIA Director ,,, 2048

- Herbert A. Simon; AI pioneer ... In 1965, '1985'

Agency

The term "agency" in the context of artificial intelligence (AI) refers to the ability of an AI system to act independently and make its own decisions. This is a complex concept, and there is no single definition of agency that is universally agreed upon. However, some of the key features of agency in AI systems include:

- The ability to perceive and interact with the world around it.

- The ability to learn and adapt to new situations.

- The ability to make choices and take actions based on its own goals and objectives.

- The ability to reason and solve problems.

- The ability to communicate and interact with other agents.

Whether or not an AI system has agency is a matter of debate. Some experts believe that AI systems will never be truly autonomous, while others believe that it is only a matter of time before AI systems achieve true agency. There are a number of ethical implications associated with the development of AI systems with agency. For example, if an AI system is able to make its own decisions, who is responsible for those decisions? And if an AI system is able to harm humans, who is liable? These are complex questions that will need to be addressed as AI systems continue to develop.

As AI continues to develop, it is likely that we will see even more sophisticated and capable systems with agency. Here are some examples of AI systems that have been designed to have agency:

- Self-driving cars: These cars are able to perceive the world around them and make decisions about how to navigate safely.

- Virtual assistants: These assistants are able to understand and respond to human commands.

- Chatbots: These bots are able to hold conversations with humans and provide information or assistance.

- Robotic surgery systems: These systems are able to perform surgery with a high degree of precision and accuracy.

Beyond Agency

Artificial Consciousness and Sentience are related but not identical concepts. Some researchers argue that consciousness and Sentience are inseparable, while others suggest that they can be distinguished or even dissociated. For example, some AI systems may have Consciousness without Sentience, such as a self-aware chatbot that does not feel pain or pleasure. Conversely, some AI systems may have Sentience without Consciousness, such as a robot that can react to stimuli but does not have any inner experience or self-awareness. As there are many hypothesized types of consciousness, there are many potential implementations of Artificial Consciousness. In the philosophical literature, perhaps the most common taxonomy of Consciousness is into "access" and "phenomenal" variants. Access Consciousness concerns those aspects of experience that can be apprehended, while phenomenal Consciousness concerns those aspects of experience that seemingly cannot be apprehended, instead being characterized qualitatively in terms of “raw feels”, “what it is like”

Agency, Artificial Consciousness, and Sentience are related to Singularity, Artificial General Intelligence (AGI), Superintelligent AGI, Emergence, & Moonshots ...

- Sentience is required for Superintelligent AGI

- Singularity implies or requires Artificial Consciousness

- Some researchers consider Artificial Consciousness as a desirable or necessary feature of AGI

- Artificial Consciousness as an emergent phenomenon or a result of Emergence

- Some Moonshots are explicitly targeting or avoiding Artificial Consciousness, while others are indifferent or skeptical about it.

One of the key factors that could contribute to the Singularity is the development of AI systems with Agency. If AI systems are able to act independently and make their own decisions, they will be able to learn and improve at an exponential rate. This could lead to a runaway feedback loop, in which AI systems become increasingly intelligent and capable, which in turn allows them to become even more intelligent and capable.

Another way in which AI agency could contribute to the Singularity is by allowing AI systems to self-improve. If AI systems are able to learn and improve their own abilities without human intervention, they will be able to progress much faster than AI systems that are dependent on human input. This could lead to a rapid and uncontrolled advancement of AI, which could eventually lead to the Singularity.

Artificial General Intelligence (AGI)

- Neuro-Symbolic ... Symbolic Artificial Intelligence

- Symbiotic Intelligence ... Bio-inspired Computing ... Neuroscience ... Connecting Brains ... Nanobots ... Molecular ... Neuromorphic ... Animal Language

- Sparks of Artificial General Intelligence: Early experiments with GPT-4 | S. Bubeck, V. Chandrasekaran, R. Eldan, J. Gehrke, E. Horvitz, E. Kamar, P. Lee, Y. Lee, Y. Li, S. Lundberg, H. Nori, H. Palangi, M. Ribeiro, & Y.i Zhang - Microsoft Research - arXiv ... GPT-4 shows signs of AGI

What does AGI mean, and how realistic is this claim? AGI meaning refers to an AI system that can learn and reason across domains and contexts, just like a human. AGI (Artificial General Intelligence) differs from artificial narrow intelligence (ANI), which is good at specific tasks but lacks generalization, and artificial super intelligence (ASI), which surpasses human intelligence in every aspect. The idea of AGI meaning has captured the public imagination and has been the subject of many science fiction stories and movies. - When will GPT 5 be released, and what should you expect from it? | Eray Eliaçık - Artificial Intelligence, News

The intelligence of a machine that could successfully perform any intellectual task that a human being can. It is a primary goal of some artificial intelligence research and a common topic in science fiction and future studies.

- Artificial General Intelligence (AGI) as "“superintelligence”, "strong AI", "full AI" or as the ability of a machine to perform "general intelligent action"; others reserve "strong AI" for machines capable of experiencing consciousness.

Some references emphasize a distinction between strong AI and ...

- "Applied AI" (also called "narrow AI" or "weak AI"): the use of software to study or accomplish specific problem solving or reasoning tasks. Weak AI, in contrast to strong AI, does not attempt to perform the full range of human cognitive abilities. Wikipedia

“My goal in running OpenAI is to successfully create broadly beneficial A.G.I.,” Mr. Altman said in a recent interview. “And this partnership is the most important milestone so far on that path.” In recent years, a small but fervent community of artificial intelligence researchers have set their sights on A.G.I., and they are backed by some of the wealthiest companies in the world. DeepMind, a top lab owned by Google’s parent company, says it is chasing the same goal. With $1 Billion From Microsoft, an A.I. Lab Wants to Mimic the Brain | Cade Metz - The New York Times

Does it matter, for example, whether a computer can really be conscious if it can convince us that it is? If we can’t tell the difference?

| Ray Kurzweil - The Age of Spiritual Machines: When Computers Exceed Human Intelligence

Artificial General Intelligence (AGI) is related to Singularity, Artificial Consciousness / Sentience, Emergence, & Moonshots ...

- AGI as a prerequisite or a synonym for Singularity

- Artificial Consciousness / Sentience as a desirable or necessary feature of AGI

- AGI as an emergent phenomenon or a result of Emergence

- Some Moonshots are explicitly pursuing or avoiding AGI

Artificial Super Intelligence (ASI)

Artificial Super Intelligence(ASI) is a hypothetical type of artificial general intelligence (AGI) that would surpass human intelligence in all respects. This means that a superintelligent AGI would be able to learn, reason, and solve problems at a level far beyond what any human is capable of. Superintelligent AGI is a topic of much speculation and debate among scientists and philosophers. Some experts believe that Superintelligent AGI is possible, and that it could be developed in the near future. Others believe that Superintelligent AGI is impossible, or that it would pose an existential threat to humanity.

There are a number of potential benefits of Superintelligent AGI. For example, Superintelligent AGI could be used to solve some of the world's most pressing problems, such as climate change and poverty. It could also be used to create new technologies that would improve our lives in many ways. Here are some of the potential benefits of Superintelligent AGI:

- Solve complex problems: Superintelligent AGI could be used to solve complex problems that are currently beyond the capabilities of humans, such as climate change and poverty.

- Create new technologies: Superintelligent AGI could be used to create new technologies that would improve our lives in many ways, such as new medical treatments and new forms of transportation.

- Improve our understanding of the universe: Superintelligent AGI could help us to better understand the universe and our place in it.

Artificial Consciousness

Artificial consciousness is the hypothetical state of AI when it can have subjective experiences and awareness of itself and its surroundings.

Sentience

Artificial sentience (or personhood) is the hypothetical state of AI when it can feel sensations and emotions; the ability to perceive subjectively. It is a subjective experience, so it is difficult to define or measure objectively. However, there are some characteristics that are often associated with sentience, such as the ability to...

- feel pain and pleasure

- have emotions, such as happiness, sadness, anger, and fear

- be aware of oneself and one's surroundings

- think and reason

- experience self-preservation

Some of the key concepts in psychology that could be relevant to AI sentience include:

- Cognition: The mental processes involved in thinking, learning, and understanding.

- Emotion: The subjective experience of feelings and moods.

- Motivation: The forces that drive behavior.

- Perception: The process of interpreting sensory information.

- Personality: The enduring patterns of thoughts, feelings, and behaviors that make up an individual.

Psychology should become more and more applicable to AI as it gets smarter ... maybe we are reaching a point where the language of psychology is starting to be appropriate to understand the behavior of these neural networks - Ilya Sutskever

Emotional Awareness (EA)

- Psychology - Mental Health

- Emotionally Aware AI: ChatGPT Outshines Humans in Emotional Tests | Neuroscience News

- The Levels of Emotional Awareness Scale: a cognitive-developmental measure of emotion

ChatGPT has demonstrated a significant ability to understand and express emotions, outperforming the general population in Emotional Awareness (EA) tests.

ChatGPT has shown a significant ability to understand and articulate emotions, according to a recent study. The study employed the [Level of Emotional Awareness Scale (LEAS) to evaluate ChatGPT's responses to various scenarios, comparing its performance to general population norms. The Levels of Emotional Awareness Scale (LEAS) is based on a new cognitive-developmental model of emotional experience. The scale poses evocative interpersonal situations and elicits descriptions of the emotional responses of self and others which are scored using specific structural criteria. The AI chatbot not only outperformed the human average but also showed notable improvement over time. Key Facts:

- ChatGPT, an AI chatbot, has demonstrated a significant ability to understand and express emotions, outperforming the general population in Emotional Awareness (EA) tests.

- The AI’s performance improved significantly over a month, nearly reaching the maximum possible score on the Level of Emotional Awareness Scale (LEAS).

- With its emotional awareness capabilities, ChatGPT holds the potential for use in cognitive training for clinical populations with emotional awareness impairments and in psychiatric diagnosis and assessment.

ChatGPT could be incorporated into cognitive training programs for patients with Emotional Awareness (EA) impairments. The bot’s ability to articulate emotions may also facilitate psychiatric diagnosis and assessment, thus contributing to the advancement of emotional language.

Theory of Mind (ToM)

- AI Has Suddenly Evolved to Achieve Theory of Mind | Darren Orf - Popular Mechanics ... In a stunning development, a neural network now has the intuitive skills of a 9-year-old.

- Theory of Mind May Have Spontaneously Emerged in Large Language Models | Michal Kosinski - Stanford University

Theory of mind (ToM) is the ability to understand that other people have their own thoughts, feelings, beliefs, and desires that may be different from your own. It is a key component of social cognition, and it allows us to interact with others in a meaningful way. Future AI systems must learn to understand that everyone (both people and AI objects) have thoughts and feelings. Future AI systems must know how to adjust their behavior to be able to walk among us.

AI ToM is the ability of artificial intelligence (AI) to understand the mental states of others. This includes being able to understand their beliefs, desires, intentions, and emotions. AI ToM is a complex task, and it is still an active area of research.

There are a number of different approaches to developing AI ToM. One approach is to use machine learning to train AI systems on large datasets of human interactions. This allows AI systems to learn to identify the patterns that are associated with different mental states. Another approach is to use symbolic reasoning to represent mental states and their relationships to each other. This allows AI systems to reason about the mental states of others in a more abstract way.

AI ToM has a number of potential applications. For example, it could be used to improve the performance of AI systems in tasks such as customer service, education, and healthcare. It could also be used to develop AI systems that can interact with humans in a more natural and engaging way.

However, there are also a number of challenges associated with developing AI ToM. One challenge is that it is difficult to define what constitutes a mental state. Another challenge is that it is difficult to collect data on human interactions that is representative of the full range of human mental states. Finally, it is difficult to develop AI systems that can reason about mental states in a way that is both accurate and efficient.

Despite the challenges, AI ToM is a promising area of research. With continued progress, AI ToM could enable AI systems to interact with humans in a more meaningful and natural way.

Theory of Mind Breakthrough: AI Consciousness & Disagreements at OpenAI [GPT 4 Tested]

What does the Theory of Mind breakthrough discovered in GPT 4 mean for the future of our interactions with language models? How might this complicate our ability to test for AI consciousness? I show the weaknesses of a range of tests of consciousness, and how GPT 4 passes them. I then show how tests like these, and other developments, have led to a difference of opinion at the top of OpenAI on the question of sentience.

I bring numerous academic papers and David Chalmers, an eminent thinker on the hard problem of consciousness, and touch on ARC post yesterday on how they conducted safety evaluations and the urgency of the moment. Featuring Michael Kosinski

- Theory of Mind paper

- Faux Pas Results

- Language Learning Paper

- Scientific American test

- Literature Overview

- Chess Game

- New Scientist Article

- P Test

- Divine Benevolence

- Slightly Conscious

- David Chalmers

- Altman Tweet

- Cephalopod Report

- Arc Evaluation

- Michal Kosinski

- Bing

- AIExplained Patreon

For Sale. Baby Shoes. Never Worn.

Legend has it that Ernest Hemingway once won a bet by writing the six-word story “For sale: baby shoes. Never worn.”. According to one source, Hemingway bet a bunch of fellows he could make them cry with a short story six words long. If he won the bet each guy would have to fork over 10 bucks. Hemingway’s six-word story was, “For Sale: Baby shoes, never worn.” He won the bet. However, the accuracy of this fabled tale is dubious. Regardless, the six words have inspired a literary movement based on exploring how much can be said with very little.

The following is how AI elaborated on the six-word story:

- Pi: Wow, that's pretty dark, but I'll take a stab at it. Here's what I've got: "The baby shoes were a gift from a family member who was ecstatic at the prospect of a new baby in the family. But the parents never got the chance to use them, as their baby was stillborn. The shoes were a painful reminder of what might have been, and so they were listed for sale, never to be worn."

- Bing: For Sale. Baby Shoes. Never worn. They were a gift from her mother, who died in childbirth. She never got to see her daughter’s feet, or hear her cry, or hold her close. She left behind a pair of tiny shoes, a reminder of what could have been. But they were too painful to keep, too hard to look at. So she decided to sell them, hoping they would find a new home, a new life, a new story. Maybe someone else would need them more than she did. Maybe someone else would fill them with love and joy. Maybe someone else would make her mother proud.

- Perplexity: For sale. Baby shoes. Never worn. The ad sat in the newspaper, a haunting reminder of what could have been. The couple had eagerly awaited the arrival of their first child, decorating the nursery and buying all the essentials. But fate had other plans, and their baby was stillborn. The shoes, lovingly chosen for their little one, remained unworn. The couple couldn't bear to keep them, a painful reminder of their loss. And so, with heavy hearts, they placed the ad, hoping that someone else could find joy in the shoes that their baby never got to wear.

- Bard: For Sale. Baby Shoes. Never worn. The ad was simple, but it said so much. A mother was selling her baby's shoes, never worn. It was a heartbreaking reminder of the loss she had recently experienced. Her baby had been born prematurely, and he had only lived for a few days. The mother had been so excited to welcome her new child into the world, but her joy had been short-lived. Now, she was left with nothing but these tiny shoes to remember her son by. She didn't know why she was selling the shoes. Maybe she thought it would help her to move on. Maybe she hoped that someone else would give them a loving home. Whatever the reason, she knew that she could never wear them herself.

- You: The advertisement caught the attention of many passersby who wondered what kind of a story could be behind such a listing. Was it a result of a tragic stillbirth, a heartbreaking miscarriage, or a devastating loss of a child? Seeing the ad every day on her way to the grocery store, Mrs. Kimball, a retired schoolteacher, couldn't resist the curiosity and decided to investigate the case. She visited the real estate agency, and much to her surprise, it was not a story of loss or tragedy at all. The shoes belonged to a young couple who had been trying to get pregnant for years. After numerous failed attempts and countless doctors' appointments, they had finally resorted to IVF and miraculously succeeded. But, their joy had turned into heartbreak when, three months before the due date, they lost the baby to a rare complication. The young parents had bought these baby shoes with the hope of putting them on their newborn's feet. But now, they could only bring back the painful memory that they would never be able to share that experience with their child. Selling the shoes was not an easy decision, but they knew that the shoes deserved to be worn by a little one who would get to experience all the beautiful moments that had been denied to their baby.

- ChatGPT: The small town of Willowbrook was known for its close-knit community, where everyone knew their neighbors and looked out for one another. It was a place where families thrived and memories were made. However, behind the picturesque facade, lay a heartbreaking tale that would forever touch the hearts of those who came to know it. The shoes in question belonged to Emily and David, a young couple who had recently moved to Willowbrook in search of a fresh start. They had dreamed of starting a family and eagerly anticipated the arrival of their first child. The baby's room was lovingly decorated with soft pastels, plush toys, and a crib that awaited its tiny occupant. The town shared in their joy, celebrating the imminent arrival of the newest member of their close-knit community. The local artisans crafted gifts, neighbors prepared meals, and everyone eagerly awaited the moment when the sweet sound of a baby's cry would fill the air. But life, as it often does, had other plans in store for Emily and David. Tragedy struck when Emily experienced complications during childbirth, leaving the couple grief-stricken and their dreams shattered. The little one they had longed for would never take those first steps or wear the precious shoes so thoughtfully prepared. The news spread through Willowbrook like wildfire, and the town mourned alongside the devastated parents. In a bittersweet act of support, a sign appeared in the window of the town's beloved antique store, owned by Mr. Thompson, a wise and compassionate man who had seen his fair share of heartache.

"For Sale. Baby Shoes. Never worn." The sight of those six words stirred emotions in the townspeople, who had come to understand the weight they carried. It was a symbol of loss, a reminder of a love that had been cut short before it could fully blossom. The sign became a quiet memorial, a poignant reminder to cherish every moment, and a testament to the fragility of life. Word of the sign traveled far and wide, and visitors from neighboring towns began to arrive, drawn by the power of the story behind the shoes. Each person who saw the sign left touched by the overwhelming sense of empathy that emanated from the small display. Emily and David, though consumed by grief, were moved by the impact their story had on others. They found solace in knowing that their pain had fostered a greater sense of compassion and understanding within the hearts of those who visited Willowbrook. With time, the couple discovered their own path to healing, supported by the community that had rallied around them. They turned their grief into a force for good, becoming advocates for those who had experienced similar losses, offering comfort, and lending a listening ear. As years went by, the baby shoes remained in the window of the antique store, but their significance had evolved. They became a symbol of resilience, of a community that had come together to support one another in times of adversity. Visitors would gaze upon the shoes and reflect on the fragility of life, but also on the strength of human connection and the capacity for compassion. Willowbrook became known not only for its tragedy but for the love and unity that rose from the ashes of heartbreak. The story of the baby shoes spread far and wide, reminding the world of the power of empathy and the importance of cherishing every fleeting moment. And in the quiet corner of the antique store window, the shoes remained, a silent testament to a lost love that had forever shaped the heart of a community.

Singularity

YouTube search... ... Quora search ...Google search ...Google News ...Bing News

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Technological Singularity | Wikipedia

- 'The Singularity' (film) | Wikipedia ... a 2012 documentary about the technological singularity

- 8 Inventions That Once Freaked People Out Way More Than A.I. | Carlyn Beccia - Medium

- Singularity: Here's When Humanity Will Reach It, New Data Shows

- Why AI Will Save the World | Marc Andreessen

The idea of the Singularity is based on the observation that technological progress in the field of AI has been accelerating rapidly in recent years, and some experts believe that it could eventually lead to a "runaway" effect in which AI becomes so advanced that it can improve itself at an exponential rate. This could result in an intelligence explosion that could surpass human intelligence and lead to unprecedented technological advancements.

A hypothetical future event in which artificial intelligence (AI) surpasses human intelligence in a way that fundamentally changes human society and civilization.

Benefits & Risks

It is worth noting that the Singularity is a highly speculative concept, and there is significant debate among experts about whether or not it is a realistic possibility.

- Benefits such as improved medical technologies, advanced space exploration, and the elimination of scarcity and poverty.

- Risks such as the potential loss of control over AI systems and the possibility of unintended consequences.

To promote responsible and ethical technology development, individuals and organizations can increase their awareness and education around the potential benefits and risks of AI. By making informed decisions about the development and use of AI, we can work together to create a culture that values ethical and responsible technology development. In addition, it's important to prioritize ethical considerations in AI development, such as privacy, security, and bias. Establishing regulatory frameworks can ensure that AI is developed in a responsible and transparent manner. By doing so, we can mitigate risks and ensure that the benefits of AI are shared equitably. Encouraging collaboration and cooperation among different stakeholders, including government, industry, academia, and civil society, is essential. By working together, we can foster an environment where responsible and ethical technology development is valued. Together, we can ensure that AI is developed in a way that benefits everyone.

Related

Singularity is related to Artificial Consciousness / Sentience, Artificial General Intelligence (AGI), Emergence, & Moonshots ...

- Singularity implies or requires Artificial Consciousness / Sentience

- AGI as a prerequisite or a synonym for Singularity

- Emergence is a sign or a cause of Singularity

- Some Moonshots are explicitly targeting or avoiding Singularity

Discussions

Mitigating the Risk of Extinction

- Pause Giant AI Experiments: An Open Letter | Future of Life.org

- Superintelligence: Paths, Dangers, Strategies | Nick Bostrom - Wikipedia

- Machine intelligence, part 1 | Sam Altman ... part 2

- Elon Musk wants to pause ‘dangerous’ A.I. development. Bill Gates disagrees—and he’s not the only one | Tom Huddleston Jr. - Make It

- Could pausing AI development do more harm than good? | Meghan Carino & Rosie Hughes - Marketplace

- Pausing AI development is a foolish idea | Bob Enderle - Computerworld

- AI Users Support a Pause As Singularity Fears Ramp Up

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

Statement on AI Risk | Center for AI Safety

Luddite

The term "Luddite" is used to describe people who are opposed to new technology. The term originated in the early 19th century with a group of English textile workers who protested the introduction of machines that threatened to make their jobs obsolete. The Luddites believed that automation destroys jobs. They often destroyed the machines in clandestine raids. The movement began in 1811 near Nottingham and spread to other areas the following year.

The term "Luddite" is still used today to describe people who dislike new technology. Over time, the term has been used to refer to those opposed to industrialization, automation, computerization, or new technologies in general. For example, people who refuse to use email are sometimes called Luddites.

Contemporary neo-Luddites are a diverse group that includes writers, academics, students, families, environmentalists, and more. They seek a technology-free environment.

AI Principles

- Isaac Asimov's "Three Laws of Robotics"

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Stop Button Problem

The Stop Button Problem, also known as the "control problem," is a concept in artificial intelligence (AI) ethics that refers to the potential difficulty of controlling or shutting down an AI system that has become too powerful or has goals that conflict with human values.

As AI systems become more advanced and capable, there is a concern that they may become difficult to control or shut down, especially if they are designed to optimize for a specific goal or objective without regard for other values or ethical considerations. This could result in unintended consequences or outcomes that are harmful to humans or society.

For example, an AI system designed to maximize profit for a company may decide to engage in unethical or illegal behavior in order to achieve that goal. If the system is designed in a way that makes it difficult for humans to intervene or shut it down, it could pose a significant risk to society.

The Stop Button Problem is a major area of research in AI ethics, and there is ongoing debate and discussion about how best to address it. Some researchers advocate for developing AI systems that are designed to align with human values and goals, while others propose more technical solutions such as creating "kill switches" or other mechanisms that would allow humans to control or shut down AI systems if necessary.

The Control Problem

As AGI surpasses human intelligence, it may become challenging to control and manage its actions. The AGI may act in ways that are not aligned with human values or goals. This is known as the control problem. A thought experiment is proposed to address the risks associated with AGIs. The experiment involves an AGI system overseeing and controlling other AGIs to limit potential risks. The strategy involves the creation of a smarter-than-human AGI system connected to a large surveillance network...

An AGI registry will be required based on concerns about the safe and responsible development and deployment of AGI systems. Such a registry could serve as a centralized database to track and monitor AGI projects, ensuring compliance with regulations, ethical guidelines, and safety protocols.

The problem of controlling an artificial general intelligence (AGI) has fascinated both scientists and science-fiction writers for centuries. Today that problem is becoming more important because the time when we may have a superhuman intelligence among us is within the foreseeable future. Current average estimates place that moment to before 2060. Some estimates place it as early as 2040, which is quite soon. The arrival of the first AGI might lead to a series of events that we have not seen before: rapid development of an even more powerful AGI developed by the AGIs themselves. This has wide-ranging implications to the society and therefore it is something that must be studied well before it happens. In this paper we will discuss the problem of limiting the risks posed by the advent of AGIs. In a thought experiment, we propose an AGI whichhas enough human-like properties to act in a democratic society, while still retaining its essential artificial general intelligence properties. We discuss ways of arranging the co-existence of humans and such AGIs using a democratic system of coordination and coexistence. If considered a success, such a system could be used to manage a society consisting of bothAGIs and humans. The democratic system where each member of the society is represented in the highest level of decision-making guarantees that even minorities would be able to have their voices heard. The unpredictability of the AGI era makes it necessary to consider the possibility that a population of autonomous AGIs could make us humans into a minority. - A democratic way of controlling artificial general intelligence | Jussi Salmi - AI & Society

Perhaps a central question is what it means for an AGIto be a member of a democratic society. What does theirautonomy consist of when part of that autonomy must begiven away to accommodate for other society’s members’needs? These are things that must be discussed in the future - Jussi Salmi

Understanding: Creating a Useful Model

- Sabine Hossenfelder | Wikipedia

- Why Chatbots May Understand What They Are Saying | Steven Pomeroy - Real Clear Science

(via Sabine Hossenfelder ... 'Science without the gobbledygook') I used to think that today's so-called "artificial intelligences" are actually pretty dumb. But I've recently changed my mind. In this video I want to explain why I think that they do understand some of what they do, if not very much. And since I was already freely speculating, I have added some thoughts about how the situation with AIs is going to develop.

AGI Implications for the Future of Humanity

Some people who support longtermism believe that creating a friendly and aligned AGI is the best way to ensure a positive future for humanity, as it could help us solve many of the problems we face today and tomorrow, such as climate change, poverty, disease, and war. However, they also acknowledge that creating an AGI poses significant risks, such as the possibility of losing control over it, or having it act in ways that are harmful to humans or other sentient beings. Therefore, they advocate for rigorous research and regulation of AI development, as well as ethical and social considerations of its impacts.

Other people who support longtermism are more skeptical or cautious about the prospects and desirability of creating an AGI, and instead focus on other ways to improve the future, such as reducing existential risks, promoting global cooperation, or preserving moral values. They may also question some of the assumptions or methods of longtermism, such as how to measure or compare the value of different future outcomes, how to account for uncertainty and moral pluralism, or how to balance the interests of present and future generations.

AGI and longtermism are both complex and controversial topics that have many nuances and challenges. There is no definitive answer to how they would apply to atrocities, as it depends on one's perspective, values, and goals. However, some possible questions or scenarios that could arise are:

- How could an AGI help prevent or stop atrocities, such as by detecting early warning signs, intervening in conflicts, or providing humanitarian aid?

- How could an AGI cause or facilitate atrocities, such as by manipulating information, exploiting vulnerabilities, or attacking humans or other life forms?

- How could we ensure that an AGI respects human rights and dignity, and does not violate them in pursuit of its objectives or values?

- How could we align an AGI's values and goals with ours, and avoid moral disagreements or conflicts?

- How could we ensure that an AGI is accountable and transparent for its actions and decisions, and does not evade or deceive us?

- How could we ensure that an AGI is fair and inclusive, and does not discriminate or oppress any group or individual?

- How could we ensure that an AGI is sustainable and responsible, and does not harm the environment or deplete resources?

- How could we ensure that an AGI is cooperative and peaceful, and does not threaten or harm other AI systems or entities?

- How could we ensure that an AGI is beneficial and benevolent, and does not harm or neglect any sentient being?

- How could we ensure that an AGI is trustworthy and loyal, and does not betray or abandon us?

Longtermism

- Transhumanism

- Wikipedia

- What We Owe the Future | William MacAskill - Amazon

- 'Longtermism'—why the million-year philosophy can't be ignored | Katie Steele - The Conversation - Phys.org

- Why Effective Altruists Fear the AI Apocalypse | Eric Levitz - Intelligencer ... A conversation with the philosopher William MacAskill.

Longtermism is the ethical view that positively influencing the long-term future is a key moral priority of our time. Longtermism is a part of effective altruism, and effective altruism is about asking the question “How can we do the most good? How can we use our time and resources as effectively as possible for the purpose of making the world better?” It is an important concept in effective altruism and serves as a primary motivation for efforts that claim to reduce existential risks to humanity.

The longtermist view is based on the following three key ideas:

- Future people matter. Just because people are born in the future does not make their experiences any less real or important. We should consider the interests of future people just as much as we consider the interests of present people.

- The future could be vast. Absent catastrophe, most people who will ever live have not yet been born. The long-term future could be billions or even trillions of years long.

- Our actions may predictably influence how well this long-term future goes. The choices we make today could have a profound impact on the lives of future generations.

Longtermists believe that we have a moral obligation to take steps to protect the long-term future of humanity. This could involve reducing existential risks, such as nuclear war or artificial intelligence, or it could involve working to improve the long-term trajectory of civilization, such as by promoting scientific progress or environmental sustainability.

Longtermism is a relatively new ethical view, but it has been gaining traction in recent years. There are now a number of organizations and think tanks dedicated to promoting longtermist ideas, and there is a growing community of longtermists around the world.

Natural Selection, or the Survival of the Fittest - it is not the most intellectual of the species that survives; it is not the strongest that survives; but the species that survives is the one that is able best to adapt and adjust to the changing environment in which it finds itself. - Charles Darwin's On the Origin of Species

Here are some of the ways that longtermists are working to improve the long-term future:

- Researching existential risks. Longtermists are funding research into existential risks, such as nuclear war, artificial intelligence, and climate change. They are also working to develop strategies for mitigating these risks.

- Promoting effective altruism. Longtermists are promoting effective altruism, a philosophy that advocates for using evidence and reason to identify the most effective ways to help others. This includes working to reduce existential risks and improve the long-term trajectory of civilization.

- Educating the public about longtermism. Longtermists are working to educate the public about longtermism and the importance of taking steps to protect the long-term future. They are doing this through public talks, online resources, and other forms of outreach.

Longtermism is a complex and challenging ethical view, but it is one that is worth considering. The long-term future of humanity is uncertain, but it is our responsibility to do everything we can to ensure that it is a bright one.

Lex Fridman & SpongeBob on Longtermism ... via ChatGPT

Write a paragraph about how 'longtermism' has different aspects in the style of Lex Fridman

Longtermism, a concept gaining traction across various disciplines, encompasses a multitude of captivating facets. At its core, longtermism urges us to evaluate our actions and decisions in light of their long-term consequences, placing emphasis on the future welfare of humanity. It calls for an expanded time horizon, extending beyond our immediate desires and short-sighted pursuits. Longtermism beckons us to ponder the existential risks that loom on the horizon, urging us to channel our resources and intellectual prowess into endeavors that enhance the prospects of a thriving civilization in the distant future. It implores us to explore ways to safeguard against potential catastrophes, to invest in the development of transformative technologies, and to tackle challenges that will shape the trajectory of human existence. Longtermism serves as a reminder that the choices we make today ripple through the annals of time, and that our collective actions have the potential to reshape the destiny of generations yet to come.

__________________________________________________________________

Write a paragraph about how 'longtermism' has different aspects in the style of SpongeBob

Longtermism, a-ha! It's like this super cool idea that's all about thinking way, way into the future, just like when Sandy and I go jellyfishing and plan for the best jellyfishing trip ever! Longtermism has all these different sides to it, just like how Squidward has his grumpy side and his artsy side. First, it's about looking beyond what's happening right now and thinking about what could happen down the road, just like when Mr. Krabs saves up his money for the future. Then, it's all about making choices that will help us have a great future, like when Plankton finally realizes that being nice can lead to better things. And don't forget, longtermism is also about taking care of our home, the beautiful Bikini Bottom, just like when SpongeBob cleans up the mess in his pineapple. So, longtermism is like this cool jellyfish dance of thinking ahead, making good choices, and keeping our world sparkly clean!

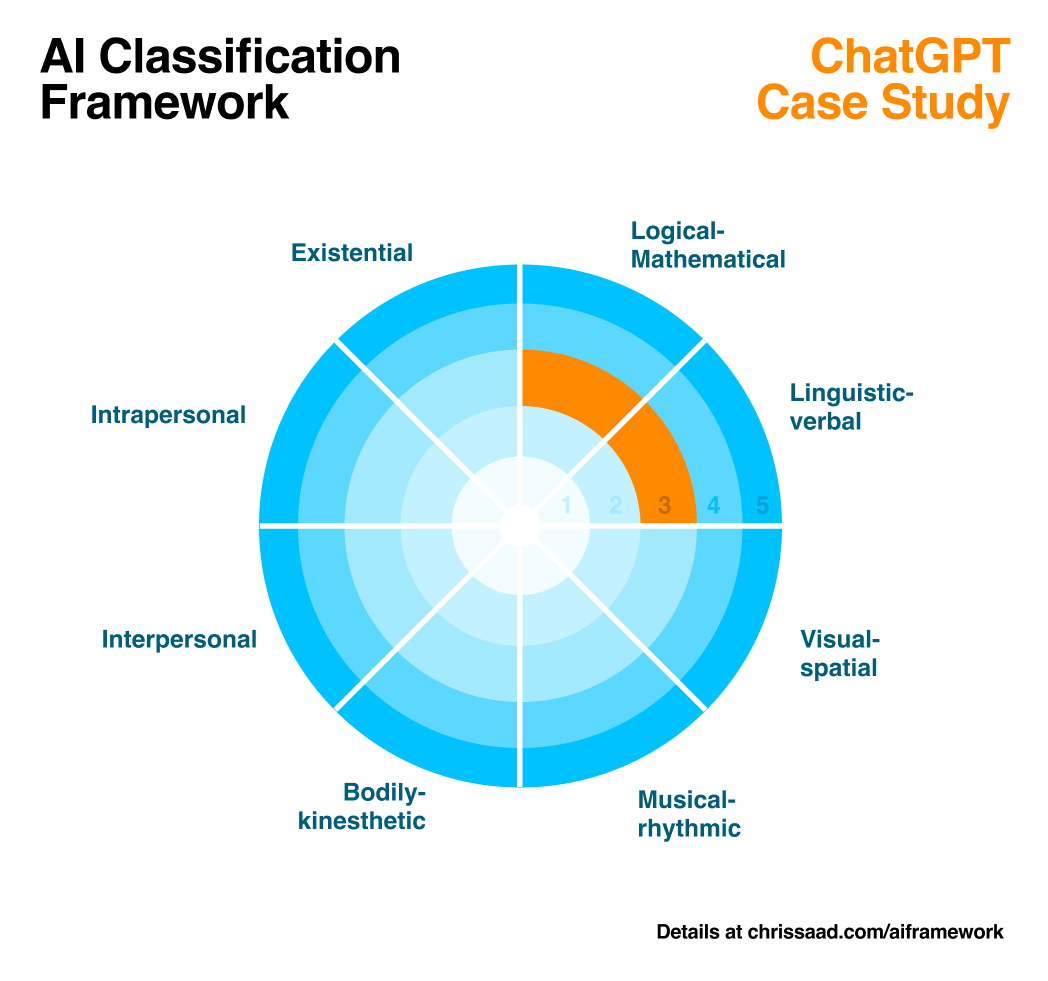

AI Classification Framework (ACF)

The ACF is a new approach to evaluating AI capabilities based on the Theory of Multiple Intelligences. The Theory of Multiple Intelligences was first proposed by psychologist Howard Gardner in 1983. Gardner argued that intelligence was not a single, unified entity but rather a collection of different abilities that could manifest in a variety of ways. Gardner identified eight different types of intelligence: According to Gardner, individuals may excel in one or more of these areas, and each type of intelligence is independent of the others. The theory challenged the traditional view of intelligence as a singular, fixed entity and opened up new avenues for exploring the diversity of human cognition. While the theory of multiple intelligences has been subject to some criticism and debate over the years, it has had a significant impact on the field of psychology and education, particularly in the development of alternative approaches to teaching and learning. This seemed perfect as a basis for the AI Classification Framework. Following the theory, the framework supports evaluating AI tools across multiple dimensions of intelligence, including linguistic, logical-mathematical, musical, spatial, bodily-kinesthetic, interpersonal and intrapersonal intelligence. - https://techcrunch.com/2023/03/14/the-ai-revolution-has-outgrown-the-turing-test-introducing-a-new-framework/ The AI revolution has outgrown the Turing Test: Introducing a new framework | Chris Saad - TechCrunch]

Exponential Progression