Bidirectional Long Short-Term Memory (BI-LSTM) with Attention Mechanism

Youtube search... ...Google search

- Recurrent Neural Network (RNN) Variants:

- Long Short-Term Memory (LSTM)

- Gated Recurrent Unit (GRU)

- Bidirectional Long Short-Term Memory (BI-LSTM)

- Bidirectional Long Short-Term Memory (BI-LSTM) with Attention Mechanism

- Average-Stochastic Gradient Descent (SGD) Weight-Dropped LSTM (AWD-LSTM)

- Hopfield Network (HN)

- Attention Mechanism ...Transformer ...Generative Pre-trained Transformer (GPT) ... GAN ... BERT

- Conversational AI ... ChatGPT | OpenAI ... Bing/Copilot | Microsoft ... Gemini | Google ... Claude | Anthropic ... Perplexity ... You ... phind ... Grok | xAI ... Groq ... Ernie | Baidu

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- Cybersecurity ... OSINT ... Frameworks ... References ... Offense ... NIST ... DHS ... Screening ... Law Enforcement ... Government ... Defense ... Lifecycle Integration ... Products ... Evaluating

- Recurrent Neural Network Language Models for Open Vocabulary Event-Level Cyber Anomaly Detection | Pacific Northwest National Laboratory (PNNL)

- Deep Learning for Unsupervised Insider Threat Detection in Structured Cybersecurity Data Streams | Pacific Northwest National Laboratory (PNNL)

- SafeKit | Pacific Northwest National Laboratory (PNNL) - GitHub

- Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification | Peng Zhou, Wei Shi, Jun Tian, Zhenyu Qi, Bingchen Li, Hongwei Hao, Bo Xu

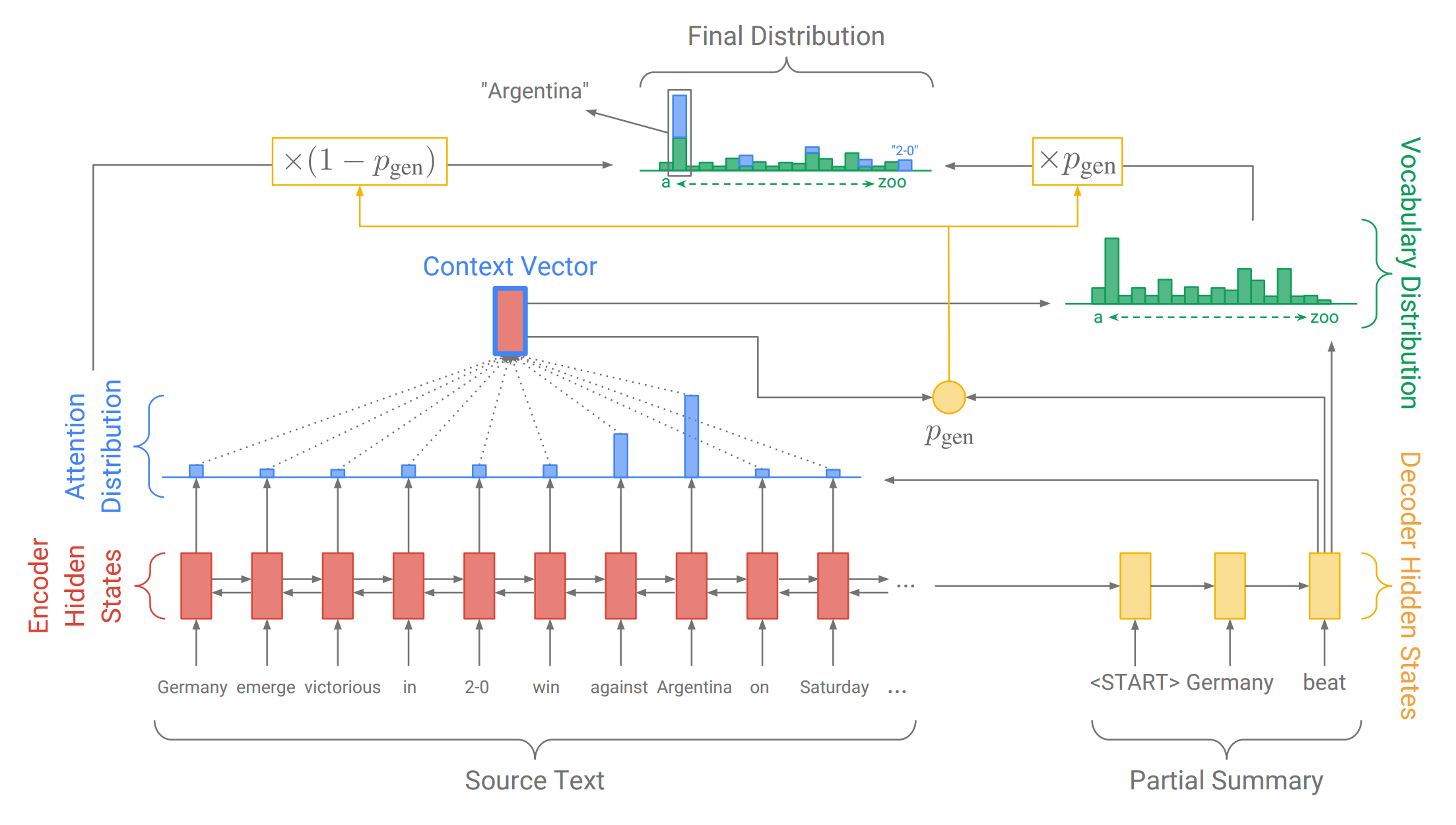

- Taming Recurrent Neural Networks for Better Summarization | Abigail See

By incorporating attention variants into an RNN (language models) opportunities are created for model introspection and analysis without sacrificing performance. Attention-equipped LSTM models have been used to improve performance on complex sequence modeling tasks. Attention provides a dynamic weighted average of values from different points in a calculation during the processing of a sequence to provide long term context for downstream discriminative or generative prediction. Recurrent Neural Network Attention Mechanisms for Interpretable System Log Anomaly Detection | Western Washington University and Pacific Northwest National Laboratory (PNNL