What is Artificial Intelligence (AI)?

YouTube ... Quora ...Google search ...Google News ...Bing News

- Artificial Intelligence (AI) ... Generative AI ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Conversational AI ... ChatGPT | OpenAI ... Bing/Copilot | Microsoft ... Gemini | Google ... Claude | Anthropic ... Perplexity ... You ... phind ... Ernie | Baidu

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

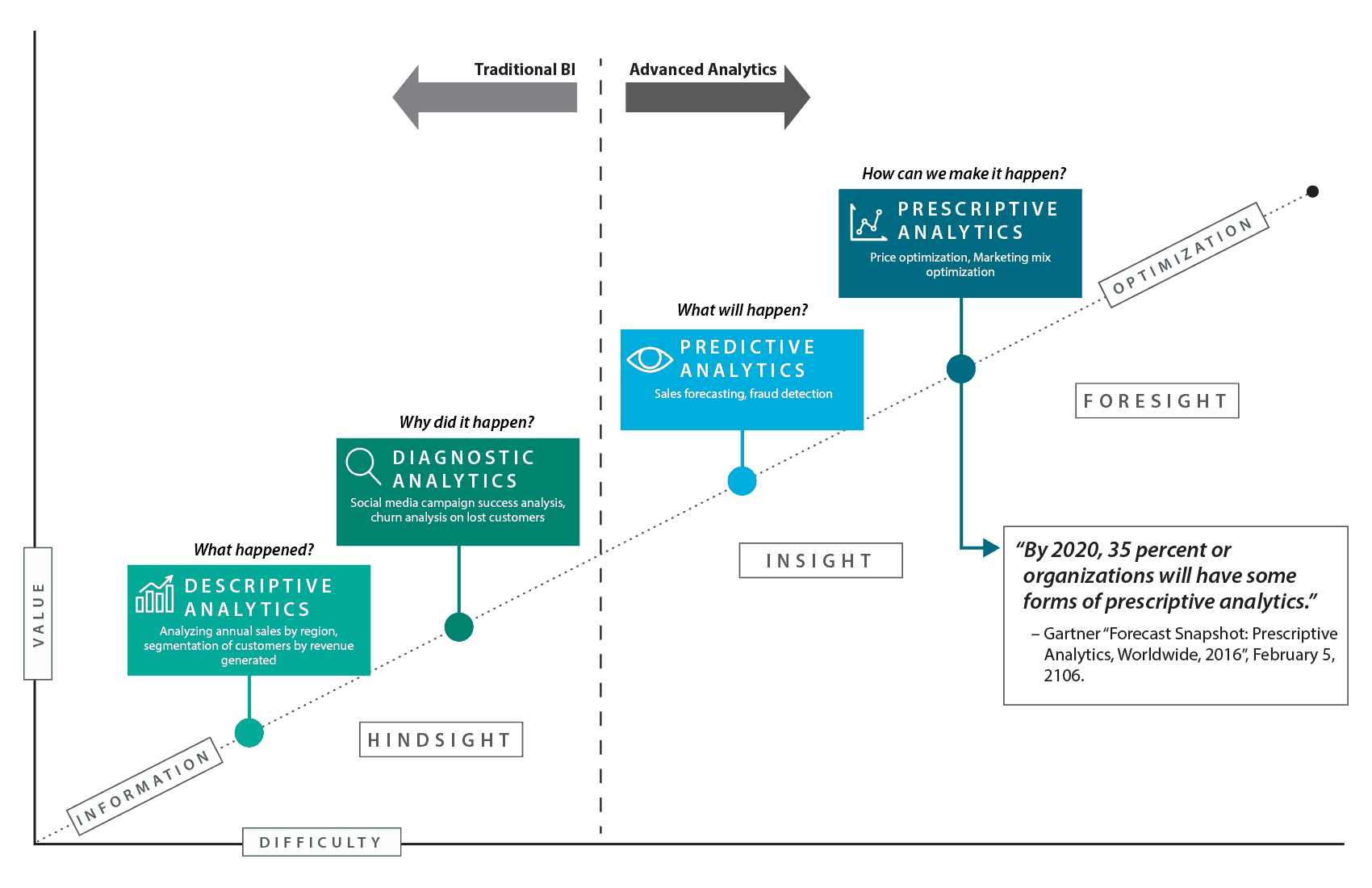

- Prescriptive Analytics ... Predictive Analytics ... Predictive Maintenance ... Forecasting ... Market Trading ... Sports Prediction ... Marketing ... Politics ... Excel

- Artificial Intelligence | Wikipedia

- Data Science ... Governance ... Preprocessing ... Exploration ... Interoperability ... Master Data Management (MDM) ... Bias and Variances ... Benchmarks ... Datasets

- Artificial Intelligence Law

- Machine Learning Roadmap 2020 | Whimsical (still 90% valid for 2023)

- Artificial Intelligence Technology Landscape | Callaghan Innovation

- What is AI? Everything you need to know about Artificial Intelligence | Nick Heath - ZDNet

- An AI Debated Its Own Potential for Good vs. Harm. Here’s What It Came up With | Vanessa Bates Ramirez | SingularityHub

- No. 3253: Machine Learning | Fitz Walker - Engines of Our Ingenuity: The University of Houston series

- Getting your head around some key AI concepts and terms | purposeand means.io

Artificial Intelligence (AI) is a broad field of computer science that focuses on creating intelligent machines that can perform tasks that typically require human-like intelligence, such as problem-solving, decision-making, and perception.

- Machine Learning (ML) is a subset of AI that focuses on creating algorithms that can automatically learn and improve from experience without being explicitly programmed. In other words, ML algorithms can learn from data and improve their performance over time without human intervention.

- Deep Learning a type of machine learning that uses artificial neural networks to enable digital systems to learn and make decisions based on unstructured, unlabeled data. It is a subset of Machine Learning (ML) and essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain, allowing it to “learn” from large amounts of data.

- Generative AI creating original content, including text, images, video, and computer code, by identifying patterns in large quantities of training data.

- Deep Learning a type of machine learning that uses artificial neural networks to enable digital systems to learn and make decisions based on unstructured, unlabeled data. It is a subset of Machine Learning (ML) and essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain, allowing it to “learn” from large amounts of data.

About

|

|

|

|

Per section 238(g) of the John S. McCain National Defense Authorization Act, 2019 (Pub. L. 115-232) August 30, 2018, in which AI is defined to include the following:

- Any artificial system that performs tasks under varying and unpredictable circumstances without significant human oversight, or that can learn from experience and improve performance when exposed to data sets;

- An artificial system developed in computer software, physical hardware, or other context that solves tasks requiring human-like perception, cognition, planning, learning, communication, or physical action;

- An artificial system designed to think or act like a human, including cognitive architectures and neural networks;

- A set of techniques, including machine learning, that is designed to approximate a cognitive task; and

- An artificial system designed to act rationally, including an intelligent software agents or embodied robot that achieves goals using perception, planning, reasoning, learning, communicating, decision making, and acting.

Posted on December 27, 2017 by Swami Chandrasekaran

Terms

Age of AI: Everything you need to know about artificial intelligence | Devin Coldewey - TechCrunch

- Artificial General Intelligence (AGI), or strong AI: an intelligence that is powerful enough not just to do what people do, but learn and improve itself like we do.

- Diffusion: models are trained by showing them images that are gradually degraded by adding digital noise until there is nothing left of the original. By observing this, diffusion models learn to do the process in reverse as well, gradually adding detail to pure noise in order to form an arbitrarily defined image.

- Fine tuning: models can be fine tuned by giving them a bit of extra training using a specialized dataset

- Foundation Model: are the big from-scratch ones that need supercomputers to run, but they can be trimmed down to fit in smaller containers, usually by reducing the number of parameters.

- Generative AI: an AI model that produces an original output, like an image or text. Some AIs summarize, some reorganize, some identify, and so on — but an AI that actually generates something (whether or not it “creates” is arguable)

- Hallucination: Originally this was a problem of certain imagery in training slipping into unrelated output, such as buildings that seemed to be made of dogs due to an an over-prevalence of dogs in the training set. Now an AI is said to be hallucinating when, because it has insufficient or conflicting data in its training set, it just makes something up.

- Inference: stating a conclusion by reasoning about available evidence. Of course it is not exactly “reasoning,” but statistically connecting the dots in the data it has ingested and, in effect, predicting the next dot.

- Large Language Model (LLM): are trained on pretty much all the text making up the web and much of English literature. Ingesting all this results in a foundation model (read on) of enormous size. LLMs are able to converse and answer questions in natural language and imitate a variety of styles and types of written documents, as demonstrated by the likes of ChatGPT, Claude, and LLaMa.

- Model: he actual collection of code that accepts inputs and returns outputs. Whatever it does or produces. Models depend on how the model is trained.

- Neural network: they are just lots of dots and lines: the dots are data and the lines are statistical relationships between those values. As in the brain, this can create a versatile system that quickly takes an input, passes it through the network, and produces an output. This system is called a model.

- Training: the neural networks making up the base of the system are exposed to a bunch of information in what’s called a dataset or corpus. In doing so, these giant networks create a statistical representation of that data. Words or images that must be analyzed and given representation in the giant statistical model. On the other hand, once the model is done cooking it can be much smaller and less demanding when it’s being used, a process called inference.