Difference between revisions of "Train, Validate, and Test"

m |

m |

||

| (26 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, GPT-4, GPT-5, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Train+Validate+Test+Neural+Network YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Train+Validate+Test+Neural+Network ...Google search] |

| + | * [[AI Solver]] ... [[Algorithms]] ... [[Algorithm Administration|Administration]] ... [[Model Search]] ... [[Discriminative vs. Generative]] ... [[Train, Validate, and Test]] | ||

| + | * [[Strategy & Tactics]] ... [[Project Management]] ... [[Best Practices]] ... [[Checklists]] ... [[Project Check-in]] ... [[Evaluation]] ... [[Evaluation - Measures|Measures]] | ||

| + | ** [[Evaluation - Measures#Accuracy|Accuracy]] | ||

| + | ** [[Evaluation - Measures#Precision & Recall (Sensitivity)|Precision & Recall (Sensitivity)]] | ||

| + | ** [[Evaluation - Measures#Specificity|Specificity]] | ||

| + | ** [[Benchmarks]] | ||

| + | ** [[Bias and Variances]] | ||

| + | ** [[Explainable / Interpretable AI]] | ||

| + | ** [[Algorithm Administration#Model Monitoring|Model Monitoring]] | ||

| + | ** [[ML Test Score]] | ||

| + | * [[AI Verification and Validation]] | ||

* [[Objective vs. Cost vs. Loss vs. Error Function]] | * [[Objective vs. Cost vs. Loss vs. Error Function]] | ||

| − | |||

* [[Overfitting Challenge]] | * [[Overfitting Challenge]] | ||

| − | * [ | + | * [https://medium.com/datadriveninvestor/data-science-essentials-why-train-validation-test-data-b7f7d472dc1f Data Science essentials: Why train-validation-test data? | Sagar Patel - Medium] |

| − | * [ | + | * [https://towardsdatascience.com/train-validation-and-test-sets-72cb40cba9e7 About Train, Validation and Test Sets in Machine Learning | Tarang Shah - Towards Data Science] |

| − | * [ | + | * [https://machinelearningmastery.com/difference-test-validation-datasets/?source=post_page--------------------------- What is the Difference Between Test and Validation Datasets? | Jason Brownlee - Machine Learning Mastery] |

* Training Dataset: The sample of data used to fit the model. | * Training Dataset: The sample of data used to fit the model. | ||

* Validation Dataset: The sample of data used to provide an unbiased evaluation of a model fit on the training dataset while tuning model hyperparameters. The evaluation becomes more biased as skill on the validation dataset is incorporated into the model configuration. | * Validation Dataset: The sample of data used to provide an unbiased evaluation of a model fit on the training dataset while tuning model hyperparameters. The evaluation becomes more biased as skill on the validation dataset is incorporated into the model configuration. | ||

* Test Dataset: The sample of data used to provide an unbiased evaluation of a final model fit on the training dataset. | * Test Dataset: The sample of data used to provide an unbiased evaluation of a final model fit on the training dataset. | ||

| + | * [https://www.calypsoai.com CalypsoAI] Validating vision ML models via tests: brightness, contrast, saturation, pixilation, compression, blurring, random noise | ||

| + | * [https://landing.ai/ Landing AI] ...LandingLens platform includes a wide array of features to help teams develop and deploy reliable and repeatable inspection systems | ||

| + | |||

| − | + | https://miro.medium.com/max/700/1*OJVhBtg5YgeW7rKXoxKQxg.png | |

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

<youtube>Zi-0rlM4RDs</youtube> | <youtube>Zi-0rlM4RDs</youtube> | ||

| + | <b>Train, Test, & Validation Sets explained | ||

| + | </b><br>In this video, we explain the concept of the different data sets used for training and testing an artificial neural network, including the training set, testing set, and validation set. We also show how to create and specify these data sets in code with [[Keras]]. | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

<youtube>MyBSkmUeIEs</youtube> | <youtube>MyBSkmUeIEs</youtube> | ||

| + | <b>Lecture 0603 Model selection and training/validation/test sets | ||

| + | </b><br>Machine Learning by [[Creatives#Andrew Ng |Andrew Ng]] [Coursera] | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| − | + | = If you have an training accuracy of 90%, and you are not happy with that, what do you do? Collect more data? Try other algorithms? Collect more diverse data? How to decide what to do? = | |

| − | = | ||

CONTENT FROM: | CONTENT FROM: | ||

| Line 31: | Line 67: | ||

<b>Structuring Machine Learning Projects; Course 3 | [[Creatives#Andrew Ng|Andrew Ng]]'s Deep Learning Series</b> | <b>Structuring Machine Learning Projects; Course 3 | [[Creatives#Andrew Ng|Andrew Ng]]'s Deep Learning Series</b> | ||

| − | * [ | + | * [https://www.coursera.org/specializations/deep-learning?utm_source=deeplearningai&utm_medium=institutions&utm_campaign=SocialYoutubeDLSC3W1L02 Orthogonalization (C3W1L02) |] [[Creatives#Andrew Ng|Andrew Ng]] |

== Chain of assumptions in Machine Learning (ML) == | == Chain of assumptions in Machine Learning (ML) == | ||

| − | # Fit training set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If this is not happening, try bigger network, or different optimization algorithm. You should achieve human level performance here. | + | # Fit training set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If this is not happening, try bigger network, or different optimization algorithm. You should achieve human level [[Evaluation - Measures|performance]] here. |

# Fit dev set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If this is not happening, it means you are [[Overfitting Challenge|overfitting]] training set. Try [[Regularization]], or train on a bigger training set. Or a different neural network (NN) architecture. | # Fit dev set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If this is not happening, it means you are [[Overfitting Challenge|overfitting]] training set. Try [[Regularization]], or train on a bigger training set. Or a different neural network (NN) architecture. | ||

# Fit test set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If fit on test set is much worse than fit on dev set, it means you have [[Overfitting Challenge|overfit]] the dev set. You should get a bigger dev set. Or try a different neural network (NN) architecture. | # Fit test set well on [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] If fit on test set is much worse than fit on dev set, it means you have [[Overfitting Challenge|overfit]] the dev set. You should get a bigger dev set. Or try a different neural network (NN) architecture. | ||

| − | # Perform well in the real world If performance on dev/test set is good, but performance in real world is bad, check if [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] is really what you care about. | + | # Perform well in the real world If [[Evaluation - Measures|performance]] on dev/test set is good, but [[Evaluation - Measures|performance]] in real world is bad, check if [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] is really what you care about. |

[[Creatives#Andrew Ng|Andrew Ng]] does not like early stopping because it affects fit on both training and dev sets, which leads to confusion. | [[Creatives#Andrew Ng|Andrew Ng]] does not like early stopping because it affects fit on both training and dev sets, which leads to confusion. | ||

| Line 51: | Line 87: | ||

Dev and test set should come from the same distribution and should reflect the data you want to do well on. | Dev and test set should come from the same distribution and should reflect the data you want to do well on. | ||

| − | When data was less abundant, <b>60/20/20</b> would be good training/dev/test was good split. In data abundant neural net scenario, <b>98/1/1</b> is good distribution. Test set should be just good enough to give high confidence in overall performance of system. Some people just omit test set too. | + | When data was less abundant, <b>60/20/20</b> would be good training/dev/test was good split. In data abundant neural net scenario, <b>98/1/1</b> is good distribution. Test set should be just good enough to give high confidence in overall [[Evaluation - Measures|performance]] of system. Some people just omit test set too. |

Sometimes you may wish to change metric mid way. While building cat vs no cat image, may be in a "better" classfier, pornographic images are classified as cat images. So, you need to change [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] to penalizing this misclassification heavily. | Sometimes you may wish to change metric mid way. While building cat vs no cat image, may be in a "better" classfier, pornographic images are classified as cat images. So, you need to change [[Objective vs. Cost vs. Loss vs. Error Function|cost function]] to penalizing this misclassification heavily. | ||

== Human level performance == | == Human level performance == | ||

| − | For perception problems, human level performance is close to bayes' error. You should try to consider the best human level performance possible. Eg. in radiology an expert radiologist could be better than average radiologist and team of experts may better than a single expert. You should consider the way which gives lowest possible error. | + | For perception problems, human level performance is close to [[bayes]]' error. You should try to consider the best human level performance possible. Eg. in radiology an expert radiologist could be better than average radiologist and team of experts may better than a single expert. You should consider the way which gives lowest possible error. |

| − | Difference between 0 and human level performance is bayes' error | + | Difference between 0 and human level performance is [[bayes]]' error |

| − | Difference between human level performance and training error is avoidable bias | + | Difference between human level performance and training error is avoidable [[Bias and Variances|bias]] |

| − | Difference between training error and dev error is variance | + | Difference between training error and dev error is [[Bias and Variances|variance]] |

| − | Difference | + | Difference between dev error and test error is [[Overfitting Challenge|overfitting]] to dev set |

You should compute all these errors and that will help you decide how to improve your algorithm. | You should compute all these errors and that will help you decide how to improve your algorithm. | ||

Tasks where machines can outperform humans: online ads, loan approvals, product recommendations, logistics. (Structured data, not natural perception problems) | Tasks where machines can outperform humans: online ads, loan approvals, product recommendations, logistics. (Structured data, not natural perception problems) | ||

| − | Also, in some | + | Also, in some [[Speech Recognition]], image recognition and radiology tasks, computers surpass single human performance. |

== Error Analysis == | == Error Analysis == | ||

| − | When training error is not good enough, you manually examine mispredictions. You should examine a subset of mispredictions and examine manually the reason for errors. Is it that dogs are being mislabeled as cats? Or is it that lion/cheetah are | + | When training error is not good enough, you manually examine mispredictions. You should examine a subset of mispredictions and examine manually the reason for errors. Is it that dogs are being mislabeled as cats? Or is it that lion/cheetah are mislabeled as cats? Or is it that blurry images are mislabeled as cats? Figure out prominent reason and try to solve that. If lots of dogs are being mislabeled as cats, make sense to put more dog images in training set. |

| − | Sometimes data could have | + | Sometimes data could have mislabeled examples. Some mislabels in training set are okay, because NN algos are robust to that, as long as errors are random. In dev/test you should first estimate how much boost you would get by correcting the labels, and then correct the labels if you find that will give you a boost. If you fix dev set, fix test set too. You should ideally fix the examples that your algo got right because of misprediction. But it is not easy for accurate algos, as there would be large number of items to examine. |

== Build first, then iterate == | == Build first, then iterate == | ||

| − | You understand data and challenges only when you iterate. Build first system quickly and use [[ | + | You understand data and challenges only when you iterate. Build first system quickly and use [[Bias and Variances|bias/variance]] analysis to prioritize next steps. |

== Mismatched training and dev/test set == | == Mismatched training and dev/test set == | ||

DL algos are data hungry. Teams want to shove in as much as data as they can get hold of. For example, you can get images from internet, or you can purchase data. You can use data from various sources to train, but dev/test set data should only contain the examples which are representative of your use case. | DL algos are data hungry. Teams want to shove in as much as data as they can get hold of. For example, you can get images from internet, or you can purchase data. You can use data from various sources to train, but dev/test set data should only contain the examples which are representative of your use case. | ||

| − | When your training and dev set are from different distributions, training error and dev error difference may not reflect [[ | + | When your training and dev set are from different distributions, training error and dev error difference may not reflect [[Bias and Variances|variance]. It may just be that training test is easy. To catch this difference, you can have training dev set carved out of training set. Now: |

Difference between training and training dev is the variance. | Difference between training and training dev is the variance. | ||

Difference between training dev and dev is a measure of mismatch between training and test data. | Difference between training dev and dev is a measure of mismatch between training and test data. | ||

| − | What if you have data mismatch problem? Perform manual inspection. May be lot of dev/test are noisy (in a | + | What if you have data mismatch problem? Perform manual inspection. May be lot of dev/test are noisy (in a [[Speech Recognition]] system). In that case you can add noise in training set. But be careful: if you have 10K hour worth of training data, you should add 10K hour worth of noise too. If you just repeat 1 hour worth of noise, you will [[Overfitting Challenge|overfit]]. Note that to human ear all noise will appear the same, but machine will [[Overfitting Challenge|overfit]]. Similarly for computer vision, you can synthesize images with background cars etc. |

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

<youtube>UEtvV1D6B3s</youtube> | <youtube>UEtvV1D6B3s</youtube> | ||

| + | <b>Orthogonalization (C3W1L02 ) | ||

| + | </b><br>[[Creatives#Andrew Ng|Andrew Ng]] Take the Deep Learning Specialization: https://bit.ly/2IohyTa Check out all our courses: https://www.deeplearning.ai | ||

| + | |} | ||

| + | |}<!-- B --> | ||

Latest revision as of 22:02, 5 March 2024

YouTube search... ...Google search

- AI Solver ... Algorithms ... Administration ... Model Search ... Discriminative vs. Generative ... Train, Validate, and Test

- Strategy & Tactics ... Project Management ... Best Practices ... Checklists ... Project Check-in ... Evaluation ... Measures

- AI Verification and Validation

- Objective vs. Cost vs. Loss vs. Error Function

- Overfitting Challenge

- Data Science essentials: Why train-validation-test data? | Sagar Patel - Medium

- About Train, Validation and Test Sets in Machine Learning | Tarang Shah - Towards Data Science

- What is the Difference Between Test and Validation Datasets? | Jason Brownlee - Machine Learning Mastery

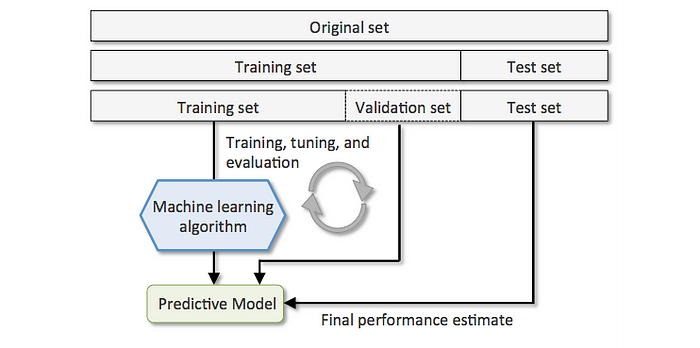

- Training Dataset: The sample of data used to fit the model.

- Validation Dataset: The sample of data used to provide an unbiased evaluation of a model fit on the training dataset while tuning model hyperparameters. The evaluation becomes more biased as skill on the validation dataset is incorporated into the model configuration.

- Test Dataset: The sample of data used to provide an unbiased evaluation of a final model fit on the training dataset.

- CalypsoAI Validating vision ML models via tests: brightness, contrast, saturation, pixilation, compression, blurring, random noise

- Landing AI ...LandingLens platform includes a wide array of features to help teams develop and deploy reliable and repeatable inspection systems

|

|

If you have an training accuracy of 90%, and you are not happy with that, what do you do? Collect more data? Try other algorithms? Collect more diverse data? How to decide what to do?

CONTENT FROM:

Structuring Machine Learning Projects; Course 3 | Andrew Ng's Deep Learning Series

Chain of assumptions in Machine Learning (ML)

- Fit training set well on cost function If this is not happening, try bigger network, or different optimization algorithm. You should achieve human level performance here.

- Fit dev set well on cost function If this is not happening, it means you are overfitting training set. Try Regularization, or train on a bigger training set. Or a different neural network (NN) architecture.

- Fit test set well on cost function If fit on test set is much worse than fit on dev set, it means you have overfit the dev set. You should get a bigger dev set. Or try a different neural network (NN) architecture.

- Perform well in the real world If performance on dev/test set is good, but performance in real world is bad, check if cost function is really what you care about.

Andrew Ng does not like early stopping because it affects fit on both training and dev sets, which leads to confusion.

Single real number evaluation metric

Have a single real number metric to compare various algorithms.

You may combined precision and recall (say by using harmonic mean of the two).

Some metrics could be 'satisficing', e.g. running time of classification should be within a threshold. Others would be optimizing.

Train/dev/test set distributions

Dev and test set should come from the same distribution and should reflect the data you want to do well on.

When data was less abundant, 60/20/20 would be good training/dev/test was good split. In data abundant neural net scenario, 98/1/1 is good distribution. Test set should be just good enough to give high confidence in overall performance of system. Some people just omit test set too.

Sometimes you may wish to change metric mid way. While building cat vs no cat image, may be in a "better" classfier, pornographic images are classified as cat images. So, you need to change cost function to penalizing this misclassification heavily.

Human level performance

For perception problems, human level performance is close to bayes' error. You should try to consider the best human level performance possible. Eg. in radiology an expert radiologist could be better than average radiologist and team of experts may better than a single expert. You should consider the way which gives lowest possible error.

Difference between 0 and human level performance is bayes' error Difference between human level performance and training error is avoidable bias Difference between training error and dev error is variance Difference between dev error and test error is overfitting to dev set You should compute all these errors and that will help you decide how to improve your algorithm.

Tasks where machines can outperform humans: online ads, loan approvals, product recommendations, logistics. (Structured data, not natural perception problems)

Also, in some Speech Recognition, image recognition and radiology tasks, computers surpass single human performance.

Error Analysis

When training error is not good enough, you manually examine mispredictions. You should examine a subset of mispredictions and examine manually the reason for errors. Is it that dogs are being mislabeled as cats? Or is it that lion/cheetah are mislabeled as cats? Or is it that blurry images are mislabeled as cats? Figure out prominent reason and try to solve that. If lots of dogs are being mislabeled as cats, make sense to put more dog images in training set.

Sometimes data could have mislabeled examples. Some mislabels in training set are okay, because NN algos are robust to that, as long as errors are random. In dev/test you should first estimate how much boost you would get by correcting the labels, and then correct the labels if you find that will give you a boost. If you fix dev set, fix test set too. You should ideally fix the examples that your algo got right because of misprediction. But it is not easy for accurate algos, as there would be large number of items to examine.

Build first, then iterate

You understand data and challenges only when you iterate. Build first system quickly and use bias/variance analysis to prioritize next steps.

Mismatched training and dev/test set

DL algos are data hungry. Teams want to shove in as much as data as they can get hold of. For example, you can get images from internet, or you can purchase data. You can use data from various sources to train, but dev/test set data should only contain the examples which are representative of your use case.

When your training and dev set are from different distributions, training error and dev error difference may not reflect [[Bias and Variances|variance]. It may just be that training test is easy. To catch this difference, you can have training dev set carved out of training set. Now:

Difference between training and training dev is the variance. Difference between training dev and dev is a measure of mismatch between training and test data. What if you have data mismatch problem? Perform manual inspection. May be lot of dev/test are noisy (in a Speech Recognition system). In that case you can add noise in training set. But be careful: if you have 10K hour worth of training data, you should add 10K hour worth of noise too. If you just repeat 1 hour worth of noise, you will overfit. Note that to human ear all noise will appear the same, but machine will overfit. Similarly for computer vision, you can synthesize images with background cars etc.

|