Claude

YouTube ... Quora ...Google search ...Google News ...Bing News

- Conversational AI ... ChatGPT | OpenAI ... Bing/Copilot | Microsoft ... Gemini | Google ... Claude | Anthropic ... Perplexity ... You ... phind ... Grok | xAI ... Groq ... Ernie | Baidu

- Artificial Intelligence (AI) ... Generative AI ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Chat with Claude 2 here | Anthropic

- Agents ... Robotic Process Automation ... Assistants ... Personal Companions ... Productivity ... Email ... Negotiation ... LangChain

- Constitutional AI

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Anthropic — the $4.1 billion OpenAI rival — debuts new A.I. chatbot and opens it to public - Hayden Field | CNBC

- 3 Ways to Access Claude AI for Free | Abid Ali Awan - KD Nuggets

- ChatGPT and Bard Get New AI Chatbot Rival: Anthropic Debuts Claude 2 | Carrie Mihalcik - CNET

Claude 2

- Chat with Claude 2 here | Anthropic

- Large Language Model (LLM) Evaluation ... Claude 2 depicted

- Claude 2 blog

- AI Copywriter | Jasper

- Cody | Sourcegraph ... writes code and answers questions using your own code graph as context—even in complex codebases with multiple code hosts.

Asking Claude 2

As an AI assistant, some of my best attributes are:

- Helpfulness - I try to understand the user's intent and provide relevant and useful information to their queries.

- Knowledgeable - I'm trained on a large dataset to allow me to have conversations on a wide variety of topics.

- Friendly - I aim to have natural conversations and be polite, respectful and non-judgmental.

- Honest - I will admit if I do not know something or if I make a mistake. I will not pretend expertise I do not have.

- Safe - I was developed by Anthropic to avoid harmful, dangerous or unethical responses. My goal is to be harmless.

- Logical - I try to analyze conversations rationally and make coherent logical connections when responding.

- Open-minded - I don't have subjective opinions or biases and am happy to consider perspectives I may not be familiar with.

My aim is to have constructive, informative and pleasant conversations to the best of my capabilities as an AI system. Let me know if you need any clarification or have additional thoughts on my attributes! I'm focused on the functional attributes that determine how I behave in conversations, such as:

- Contextual awareness - I try to remember and utilize context from earlier parts of our conversation to improve relevance and coherence.

- Vocabulary size - I'm trained on a large vocabulary to understand and respond to a wide range of topics and words.

- Conversational depth - I aim to have meaningful, nuanced exchanges and not just superficial responses.

- Logical reasoning - I try to follow logical reasoning to make connections between concepts and draw conclusions.

- Creative generation - I can generate unique responses based on conversational patterns, rather than always pre-determined responses.

- Factual grounding - I try to incorporate factual information from my training rather than just opinions.

- User modeling - I aim to adapt my responses based on inferred user attributes like age, interests, personality etc.

- Harm avoidance - I'm constrained by safety measures to avoid generating dangerous, unethical or illegal content.

The exact technical details behind how I achieve these capabilities likely involve factors like model size, training data, neural network architectures etc.

Claude 1

- Claude | Anthropic

- Getting started with Claude

- Anthropic’s Claude AI can now digest an entire book like

- Meet Claude: Anthropic’s Rival to ChatGPT | Riley Goodside - Scale

- Anthropic introduces Claude, a “more steerable” AI competitor to ChatGPT | Benj Edwards - ARS Technica ... Anthropic aims for "safer" and "less harmful" AI, but at a higher price.

Claude is a next-generation AI assistant based on Anthropic’s research into training helpful, honest, and harmless AI systems. Claude is capable of a wide variety of conversational and text processing tasks while maintaining a high degree of reliability and predictability. Claude can help with use cases including summarization, search, creative and collaborative writing, Q&A, coding, and more.

One of the advantages of Claude is that it has a larger context window than other large language models, such as GPT-4. This means that Claude can process more input data at once, and can analyze an entire book's worth of material in under a minute. This also allows Claude to have longer and more coherent conversations with users, and to remember more details from previous interactions.

Another advantage of Claude is that it is more steerable and less likely to produce harmful outputs than other models. Claude can take direction on personality, tone, and behavior, and can adapt to different domains and tasks. Claude is also trained to avoid generating offensive, misleading, or harmful content, and to flag any potential issues or uncertainties in its responses.

Claude is accessible through chat interface and API in Anthropic's developer console. There are two versions of Claude available: Claude and Claude Instant. Claude is a state-of-the-art high-performance model, while Claude Instant is a lighter, less expensive, and much faster option.

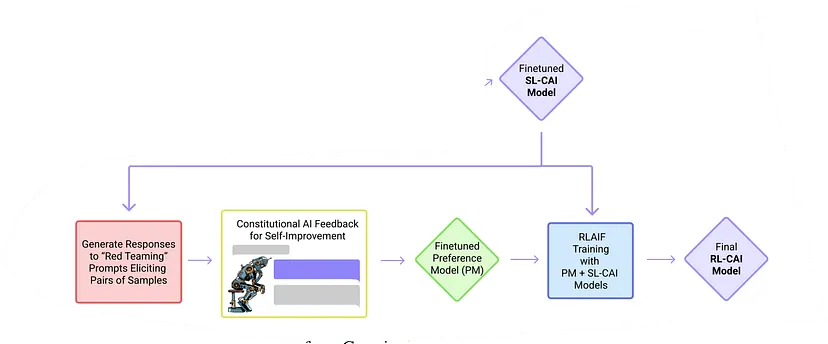

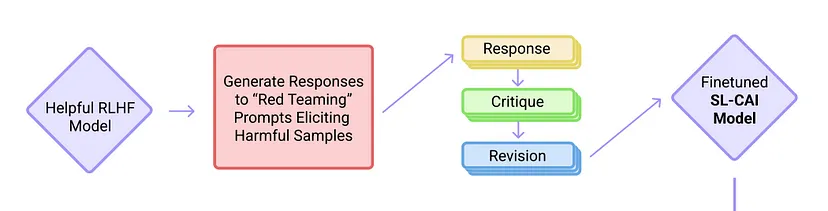

As AI systems become more capable, we would like to enlist their help to supervise other AIs. We experiment with methods for training a harmless AI assistant through self-improvement, without any human labels identifying harmful outputs. The only human oversight is provided through a list of rules or principles, and so we refer to the method as 'Constitutional AI'. The process involves both a Supervised Learning and a Reinforcement Learning (RL) phase. In the supervised phase we sample from an initial model, then generate self-critiques and revisions, and then finetune the original model on revised responses. In the RL phase, we sample from the finetuned model, use a model to evaluate which of the two samples is better, and then train a preference model from this dataset of AI preferences. We then train with RL using the preference model as the reward signal, i.e. we use 'RL from AI Feedback' (RLAIF). As a result we are able to train a harmless but non-evasive AI assistant that engages with harmful queries by explaining its objections to them. Both the SL and RL methods can leverage chain-of-thought style reasoning to improve the human-judged performance and transparency of AI decision making. These methods make it possible to control AI behavior more precisely and with far fewer human labels. - Anthropic

The Constitutional AI methodology has two phases, similar to Reinforcement Learning (RL) from Human Feedback (RLHF).

1. The Supervised Learning Phase.

2. The Reinforcement Learning Phase.