Difference between revisions of "Ridge Regression"

m |

|||

| (12 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, GPT-4, GPT-5, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

[http://www.youtube.com/results?search_query=Ridge+Regression+artificial+intelligence YouTube search...] | [http://www.youtube.com/results?search_query=Ridge+Regression+artificial+intelligence YouTube search...] | ||

[http://www.google.com/search?q=Ridge+Regression+machine+learning+ML ...Google search] | [http://www.google.com/search?q=Ridge+Regression+machine+learning+ML ...Google search] | ||

| − | * [[AI Solver]] | + | * [[AI Solver]] ... [[Algorithms]] ... [[Algorithm Administration|Administration]] ... [[Model Search]] ... [[Discriminative vs. Generative]] ... [[Train, Validate, and Test]] |

** [[...predict values]] | ** [[...predict values]] | ||

| − | * [[ | + | * [[Regression]] Analysis |

| − | |||

* [[Regularization]] | * [[Regularization]] | ||

| − | * [[ | + | ** [[Lasso Regression]] |

| + | *** [http://towardsdatascience.com/ridge-and-lasso-regression-a-complete-guide-with-python-scikit-learn-e20e34bcbf0b Ridge and Lasso Regression: A Complete Guide with Python Scikit-Learn | Saptashwa - Towards Data Science] | ||

| + | ** [[Elastic Net Regression]] | ||

| + | * [[Math for Intelligence]] ... [[Finding Paul Revere]] ... [[Social Network Analysis (SNA)]] ... [[Dot Product]] ... [[Kernel Trick]] | ||

| + | * [[Overfitting Challenge]] | ||

| + | * [[Boosting]] | ||

| + | * [http://www.analyticsvidhya.com/blog/2015/08/comprehensive-guide-regression/ 7 Types of Regression Techniques you should know! | Sunil Ray] | ||

| + | |||

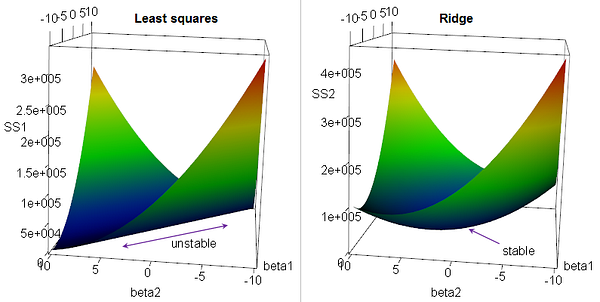

or Tikhonov Regularization, is the most commonly used regression algorithm to approximate an answer for an equation with no unique solution. This type of problem is very common in machine learning tasks, where the "best" solution must be chosen using limited data. Simply, [Regularization]] introduces additional information to an problem to choose the "best" solution for it. This algorithm is used for analyzing multiple regression data that suffer from multicollinearity. Multicollinearity, or collinearity, is the existence of near-linear relationships among the independent variables. When multicollinearity occurs, least squares estimates are unbiased, but their variances are large so they may be far from the true value. By adding a degree of bias to the regression estimates, ridge regression reduces the standard errors. | or Tikhonov Regularization, is the most commonly used regression algorithm to approximate an answer for an equation with no unique solution. This type of problem is very common in machine learning tasks, where the "best" solution must be chosen using limited data. Simply, [Regularization]] introduces additional information to an problem to choose the "best" solution for it. This algorithm is used for analyzing multiple regression data that suffer from multicollinearity. Multicollinearity, or collinearity, is the existence of near-linear relationships among the independent variables. When multicollinearity occurs, least squares estimates are unbiased, but their variances are large so they may be far from the true value. By adding a degree of bias to the regression estimates, ridge regression reduces the standard errors. | ||

Latest revision as of 21:58, 5 March 2024

YouTube search... ...Google search

- AI Solver ... Algorithms ... Administration ... Model Search ... Discriminative vs. Generative ... Train, Validate, and Test

- Regression Analysis

- Regularization

- Math for Intelligence ... Finding Paul Revere ... Social Network Analysis (SNA) ... Dot Product ... Kernel Trick

- Overfitting Challenge

- Boosting

- 7 Types of Regression Techniques you should know! | Sunil Ray

or Tikhonov Regularization, is the most commonly used regression algorithm to approximate an answer for an equation with no unique solution. This type of problem is very common in machine learning tasks, where the "best" solution must be chosen using limited data. Simply, [Regularization]] introduces additional information to an problem to choose the "best" solution for it. This algorithm is used for analyzing multiple regression data that suffer from multicollinearity. Multicollinearity, or collinearity, is the existence of near-linear relationships among the independent variables. When multicollinearity occurs, least squares estimates are unbiased, but their variances are large so they may be far from the true value. By adding a degree of bias to the regression estimates, ridge regression reduces the standard errors.