Difference between revisions of "Autoencoder (AE) / Encoder-Decoder"

(Created page with "[http://www.youtube.com/results?search_query=deep+learning+encoder+decoder+autoencoder YouTube search...] <youtube>6KHSPiYlZ-U</youtube> <youtube>H1AllrJ-_30</youtube> <youtu...") |

m |

||

| (62 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | {{#seo: |

| + | |title=PRIMO.ai | ||

| + | |titlemode=append | ||

| + | |keywords=ChatGPT, artificial, intelligence, machine, learning, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

| + | |||

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

| + | }} | ||

| + | [https://www.youtube.com/results?search_query=deep+learning+encoder+decoder+autoencoder YouTube search...] | ||

| + | [https://www.google.com/search?q=encoder+decoder+autoencoder+deep+machine+learning+ML ...Google search] | ||

| + | |||

| + | * [https://www.asimovinstitute.org/author/fjodorvanveen/ Neural Network Zoo | Fjodor Van Veen] | ||

| + | * [[Sequence to Sequence (Seq2Seq)]] | ||

| + | * [[Attention]] Mechanism ...[[Transformer]] ...[[Generative Pre-trained Transformer (GPT)]] ... [[Generative Adversarial Network (GAN)|GAN]] ... [[Bidirectional Encoder Representations from Transformers (BERT)|BERT]] | ||

| + | ** [[Transformer-XL]] | ||

| + | ** [[Large Language Model (LLM)]] ... [[Large Language Model (LLM)#Multimodal|Multimodal]] ... [[Foundation Models (FM)]] ... [[Generative Pre-trained Transformer (GPT)|Generative Pre-trained]] ... [[Transformer]] ... [[Attention]] ... [[Generative Adversarial Network (GAN)|GAN]] ... [[Bidirectional Encoder Representations from Transformers (BERT)|BERT]] | ||

| + | * [[Natural Language Processing (NLP)]] ...[[Natural Language Generation (NLG)|Generation]] ... [[Natural Language Classification (NLC)|Classification]] ... [[Natural Language Processing (NLP)#Natural Language Understanding (NLU)|Understanding]] ... [[Language Translation|Translation]] ... [[Natural Language Tools & Services|Tools & Services]] | ||

| + | * [[Variational Autoencoder (VAE)]] | ||

| + | * [[Autoregressive]] | ||

| + | * [[Autocorrelation]] | ||

| + | * [[What is Artificial Intelligence (AI)? | Artificial Intelligence (AI)]] ... [[Generative AI]] ... [[Machine Learning (ML)]] ... [[Deep Learning]] ... [[Neural Network]] ... [[Reinforcement Learning (RL)|Reinforcement]] ... [[Learning Techniques]] | ||

| + | * [[Conversational AI]] ... [[ChatGPT]] | [[OpenAI]] ... [[Bing/Copilot]] | [[Microsoft]] ... [[Gemini]] | [[Google]] ... [[Claude]] | [[Anthropic]] ... [[Perplexity]] ... [[You]] ... [[phind]] ... [[Ernie]] | [[Baidu]] | ||

| + | * [https://betanews.com/2019/04/13/autoencoder/ In praise of the autoencoder | Rosaria Silipo] | ||

| + | * [[End-to-End Speech]] | ||

| + | * [https://www.cs.toronto.edu/~fritz/absps/transauto6.pdf Transforming Auto-encoders | G. E. Hinton, A. Krizhevsky & S. D. Wang - University of Toronto] | ||

| + | * [https://towardsdatascience.com/understanding-encoder-decoder-sequence-to-sequence-model-679e04af4346 Understanding Encoder-Decoder Sequence to Sequence Model | Simeon Kostadinov - Towards Data Science] | ||

| + | * [https://towardsdatascience.com/nlp-sequence-to-sequence-networks-part-2-seq2seq-model-encoderdecoder-model-6c22e29fd7e1? NLP - Sequence to Sequence Networks - Part 2 - Seq2seq Model (EncoderDecoder Model) | Mohammed Ma'amari - Towards Data Science] | ||

| + | * [[Self-Supervised]] Learning | ||

| + | * [https://pathmind.com/wiki/deep-autoencoder Deep Autoencoders | Chris Nicholson - A.I. Wiki pathmind] | ||

| + | * [https://pathmind.com/wiki/denoising-autoencoder Denoising Autoencoders | Chris Nicholson - A.I. Wiki pathmind] | ||

| + | |||

| + | <b>Convert words to numbers, then convert numbers to words</b> | ||

| + | |||

| + | Autoencoders (AE) (or auto-associator, as it was classically known as) are somewhat similar to [[Feed Forward Neural Network (FF or FFNN)]] as AEs are more like a different use of FFNNs than a fundamentally different architecture. The basic idea behind autoencoders is to encode information (as in compress, not encrypt) automatically, hence the name. The entire network always resembles an hourglass like shape, with smaller hidden layers than the input and output layers. AEs are also always symmetrical around the middle layer(s) (one or two depending on an even or odd amount of layers). The smallest layer(s) is|are almost always in the middle, the place where the information is most compressed (the chokepoint of the network). Everything up to the middle is called the [[Data Quality#Data Encoding|encoding]] part, everything after the middle the decoding and the middle (surprise) the code. One can train them using backpropagation by feeding input and setting the error to be the difference between the input and what came out. AEs can be built symmetrically when it comes to weights as well, so the [[Data Quality#Data Encoding|encoding]] weights are the same as the decoding weights. Bourlard, Hervé, and Yves Kamp. “Auto-association by multilayer perceptrons and singular value decomposition.” Biological cybernetics 59.4-5 (1988): 291-294. | ||

| + | |||

| + | A general example of self-supervised learning algorithms are autoencoders. These are a type of neural network that is used to create a compact or compressed representation of an input sample. They achieve this via a model that has an encoder and a decoder element separated by a bottleneck that represents the internal compact representation of the input. These autoencoder models are trained by providing the input to the model as both input and the target output, requiring that the model reproduce the input by first [[Data Quality#Data Encoding|encoding]] it to a compressed representation then decoding it back to the original. Once trained, the decoder is discarded and the encoder is used as needed to create compact representations of input. Although autoencoders are trained using a supervised learning method, they solve an unsupervised learning problem, namely, they are a type of [[Dimensional Reduction#Projection |Projection]] method for reducing the dimensionality of input data. [https://machinelearningmastery.com/types-of-learning-in-machine-learning/ 14 Different Types of Learning in Machine Learning | Jason Brownlee - Machine Learning Mastery] | ||

| + | |||

| + | Autoencoders are useful for some things, but turned out not to be nearly as necessary as we once thought. Around 10 years ago, we thought that deep nets would not learn correctly if trained with only backprop of the supervised cost. We thought that deep nets would also need an unsupervised cost, like the autoencoder cost, to regularize them. When Google Brain built their first very large neural network to recognize objects in images, it was an autoencoder (and it didn’t work very well at recognizing objects compared to later approaches). Today, we know we are able to recognize images just by using backprop on the supervised cost as long as there is enough labeled data. There are other tasks where we do still use autoencoders, but they’re not the fundamental solution to training deep nets that people once thought they were going to be. PS. just to be clear, I’m not endorsing the view that “autoencoders are a failure.” I’m explaining why autoencoders are not as prominent a part of the deep learning landscape as they were in 2006–2012. Autoencoders are successful at some things, just not as many as they were expected to be. [https://en.wikipedia.org/wiki/Ian_Goodfellow Ian Goodfellow - Wikipedia] | ||

| + | |||

| + | <img src="https://www.asimovinstitute.org/wp-content/uploads/2016/09/ae.png" width="200" height="200"> | ||

| + | |||

| + | <img src="https://miro.medium.com/max/799/0*BpmYIit1tmLKlpDm.png" width="700" height="300"> | ||

| + | |||

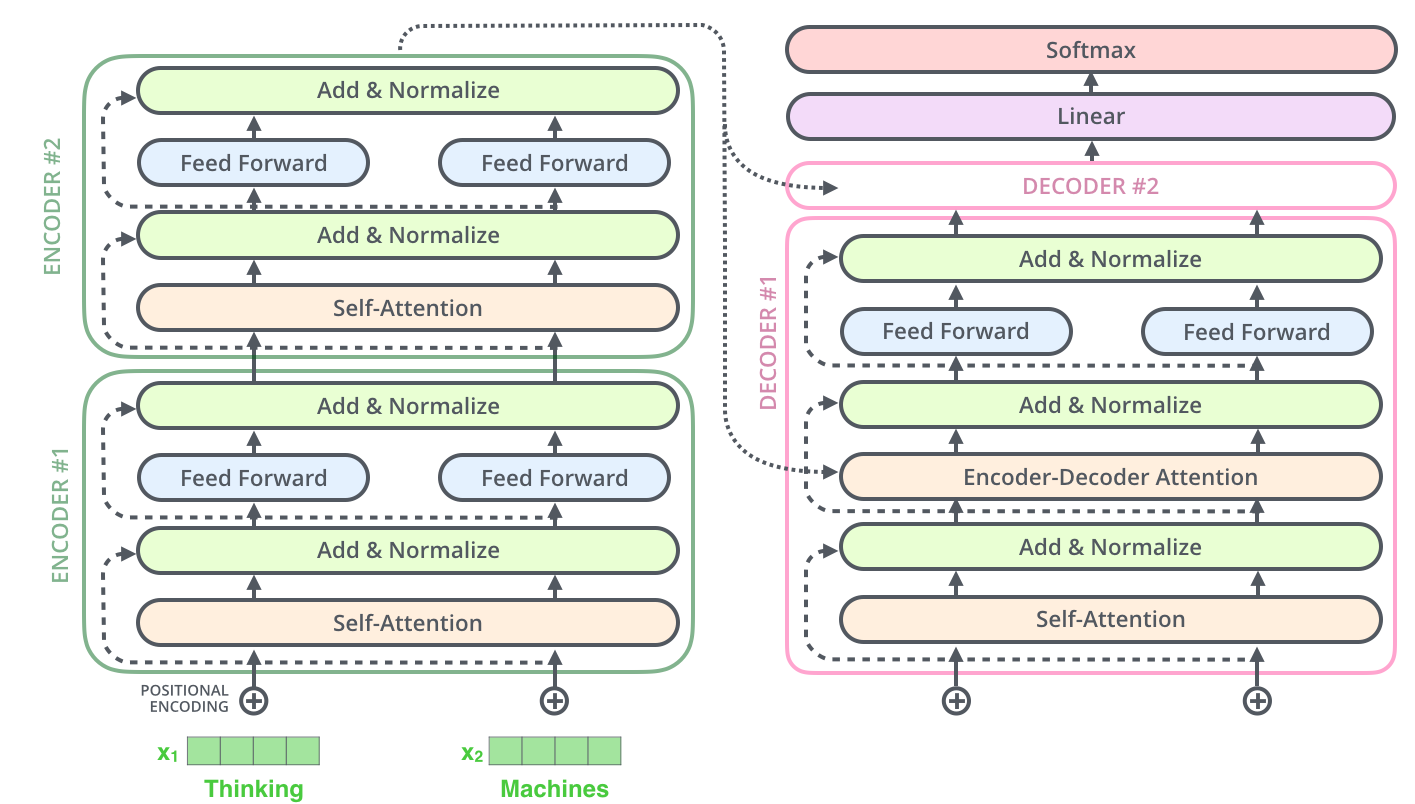

| + | <img src="https://jalammar.github.io/images/t/transformer_resideual_layer_norm_3.png" width="800" height="500"> | ||

| + | |||

| − | |||

| − | |||

<youtube>s96mYcicbpE</youtube> | <youtube>s96mYcicbpE</youtube> | ||

<youtube>GWn7vD2Ud3M</youtube> | <youtube>GWn7vD2Ud3M</youtube> | ||

<youtube>ar4Fm1V65Fw</youtube> | <youtube>ar4Fm1V65Fw</youtube> | ||

| − | <youtube> | + | <youtube>Rdpbnd0pCiI</youtube> |

| + | <youtube>FsAvo0E5Pmw</youtube> | ||

| + | <youtube>D-96CM4chHc</youtube> | ||

| + | <youtube>7XchCsYtYMQ</youtube> | ||

| + | <youtube>6KHSPiYlZ-U</youtube> | ||

| + | <youtube>H1AllrJ-_30</youtube> | ||

| + | |||

| + | == Is there a difference between autoencoders and encoder-decoder == | ||

| + | [https://www.quora.com/Is-there-a-difference-between-autoencoders-and-encoder-decoder-in-deep-learning Provided by Alexander Ororbia] | ||

| + | |||

| + | Here is how I would view these two terms (informally). Think of the encoder-decoder as a very general framework/architecture design. In this design, you have some function that maps an input space, whatever it may be, to a different/[[latent]] space (the “encoder”). The decoder is simply the complementary function that creates a map from the (encoder’s) [[latent]] space to another target space (what is it we want to decode from the [[latent]] space). Note by simply mapping spaces, and linking them through a shared [[latent]] space, you could do something like map a sequence of tokens in English (i.e., an English sentence) to a sequence of tokens in French (i.e., the translation of that English sentence to French). In some neural translation models, you map an English sequence to a fixed vector (say the last state, found upon reaching a punctuation mark, of the recurrent network you use to process the sentence iteratively), from which you will decode to a French sequence. | ||

| + | |||

| + | An autoencoder (or auto-associator, as it was classically known as) is a special case of an encoder-decoder architecture — first, the target space is the same as the input space (i.e., English inputs to English targets) and second, the target is to be equal to the input. So we would be mapping something like vectors to vectors (note that this could still be a sequence, as they are recurrent autoencoders, but you are now in this case, not predicting the future but simply reconstructing the present given a state/[[memory]] and the present). Now, an autoencoder is really meant to do auto-association, so we are essentially trying to build a model to “recall” the input, which allows the autoencoder to do things like pattern completion so if we give our autoencoder a partially corrupted input, it would be able to “retrieve” the correct pattern from [[memory]]. | ||

| + | |||

| + | Also, generally, we build autoencoders because we are more interested in getting a representation rather than learning a predictive model (though one could argue we get pretty useful representations from predictive models as well…). | ||

| + | |||

| + | But the short story is simple: an autoencoder is really a special instance of an encoder-decoder. This is especially useful when we want to decouple the encoder and decoder to create something like a [[Variational Autoencoder (VAE)]], which also frees us from having to make the decoder symmetrical in design to the encoder (i.e., the encoder could be a 2-layer convolutional network while the decoder could be a 3-layer deconvolutional network). In a variational autoencoder, the idea of [[latent]] space becomes more clear, because now we truly map the input (such as an image or document vector) to a [[latent]] variable, from which we will reconstruct the original/same input (such as the image or document vector). | ||

| + | |||

| + | I also think a great deal of confusion comes from misuse of terminology. Nowadays, ML folk especially tend to mix and match words (some do so to make things sound cooler or find buzzwords that will attract readers/funders/fame/glory/etc.), but this might be partly due to the re-branding of artificial [[Neural Network]]s as “deep learning” ;-) [since, in the end, everyone wants the money to keep working] | ||

| + | |||

| + | == Masked Autoencoder == | ||

| + | * [[Bidirectional Encoder Representations from Transformers (BERT)]] | ||

| + | <youtube>lNW8T0W-xeE</youtube> | ||

Latest revision as of 09:23, 28 May 2025

YouTube search... ...Google search

- Neural Network Zoo | Fjodor Van Veen

- Sequence to Sequence (Seq2Seq)

- Attention Mechanism ...Transformer ...Generative Pre-trained Transformer (GPT) ... GAN ... BERT

- Transformer-XL

- Large Language Model (LLM) ... Multimodal ... Foundation Models (FM) ... Generative Pre-trained ... Transformer ... Attention ... GAN ... BERT

- Natural Language Processing (NLP) ...Generation ... Classification ... Understanding ... Translation ... Tools & Services

- Variational Autoencoder (VAE)

- Autoregressive

- Autocorrelation

- Artificial Intelligence (AI) ... Generative AI ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Conversational AI ... ChatGPT | OpenAI ... Bing/Copilot | Microsoft ... Gemini | Google ... Claude | Anthropic ... Perplexity ... You ... phind ... Ernie | Baidu

- In praise of the autoencoder | Rosaria Silipo

- End-to-End Speech

- Transforming Auto-encoders | G. E. Hinton, A. Krizhevsky & S. D. Wang - University of Toronto

- Understanding Encoder-Decoder Sequence to Sequence Model | Simeon Kostadinov - Towards Data Science

- NLP - Sequence to Sequence Networks - Part 2 - Seq2seq Model (EncoderDecoder Model) | Mohammed Ma'amari - Towards Data Science

- Self-Supervised Learning

- Deep Autoencoders | Chris Nicholson - A.I. Wiki pathmind

- Denoising Autoencoders | Chris Nicholson - A.I. Wiki pathmind

Convert words to numbers, then convert numbers to words

Autoencoders (AE) (or auto-associator, as it was classically known as) are somewhat similar to Feed Forward Neural Network (FF or FFNN) as AEs are more like a different use of FFNNs than a fundamentally different architecture. The basic idea behind autoencoders is to encode information (as in compress, not encrypt) automatically, hence the name. The entire network always resembles an hourglass like shape, with smaller hidden layers than the input and output layers. AEs are also always symmetrical around the middle layer(s) (one or two depending on an even or odd amount of layers). The smallest layer(s) is|are almost always in the middle, the place where the information is most compressed (the chokepoint of the network). Everything up to the middle is called the encoding part, everything after the middle the decoding and the middle (surprise) the code. One can train them using backpropagation by feeding input and setting the error to be the difference between the input and what came out. AEs can be built symmetrically when it comes to weights as well, so the encoding weights are the same as the decoding weights. Bourlard, Hervé, and Yves Kamp. “Auto-association by multilayer perceptrons and singular value decomposition.” Biological cybernetics 59.4-5 (1988): 291-294.

A general example of self-supervised learning algorithms are autoencoders. These are a type of neural network that is used to create a compact or compressed representation of an input sample. They achieve this via a model that has an encoder and a decoder element separated by a bottleneck that represents the internal compact representation of the input. These autoencoder models are trained by providing the input to the model as both input and the target output, requiring that the model reproduce the input by first encoding it to a compressed representation then decoding it back to the original. Once trained, the decoder is discarded and the encoder is used as needed to create compact representations of input. Although autoencoders are trained using a supervised learning method, they solve an unsupervised learning problem, namely, they are a type of Projection method for reducing the dimensionality of input data. 14 Different Types of Learning in Machine Learning | Jason Brownlee - Machine Learning Mastery

Autoencoders are useful for some things, but turned out not to be nearly as necessary as we once thought. Around 10 years ago, we thought that deep nets would not learn correctly if trained with only backprop of the supervised cost. We thought that deep nets would also need an unsupervised cost, like the autoencoder cost, to regularize them. When Google Brain built their first very large neural network to recognize objects in images, it was an autoencoder (and it didn’t work very well at recognizing objects compared to later approaches). Today, we know we are able to recognize images just by using backprop on the supervised cost as long as there is enough labeled data. There are other tasks where we do still use autoencoders, but they’re not the fundamental solution to training deep nets that people once thought they were going to be. PS. just to be clear, I’m not endorsing the view that “autoencoders are a failure.” I’m explaining why autoencoders are not as prominent a part of the deep learning landscape as they were in 2006–2012. Autoencoders are successful at some things, just not as many as they were expected to be. Ian Goodfellow - Wikipedia

Is there a difference between autoencoders and encoder-decoder

Here is how I would view these two terms (informally). Think of the encoder-decoder as a very general framework/architecture design. In this design, you have some function that maps an input space, whatever it may be, to a different/latent space (the “encoder”). The decoder is simply the complementary function that creates a map from the (encoder’s) latent space to another target space (what is it we want to decode from the latent space). Note by simply mapping spaces, and linking them through a shared latent space, you could do something like map a sequence of tokens in English (i.e., an English sentence) to a sequence of tokens in French (i.e., the translation of that English sentence to French). In some neural translation models, you map an English sequence to a fixed vector (say the last state, found upon reaching a punctuation mark, of the recurrent network you use to process the sentence iteratively), from which you will decode to a French sequence.

An autoencoder (or auto-associator, as it was classically known as) is a special case of an encoder-decoder architecture — first, the target space is the same as the input space (i.e., English inputs to English targets) and second, the target is to be equal to the input. So we would be mapping something like vectors to vectors (note that this could still be a sequence, as they are recurrent autoencoders, but you are now in this case, not predicting the future but simply reconstructing the present given a state/memory and the present). Now, an autoencoder is really meant to do auto-association, so we are essentially trying to build a model to “recall” the input, which allows the autoencoder to do things like pattern completion so if we give our autoencoder a partially corrupted input, it would be able to “retrieve” the correct pattern from memory.

Also, generally, we build autoencoders because we are more interested in getting a representation rather than learning a predictive model (though one could argue we get pretty useful representations from predictive models as well…).

But the short story is simple: an autoencoder is really a special instance of an encoder-decoder. This is especially useful when we want to decouple the encoder and decoder to create something like a Variational Autoencoder (VAE), which also frees us from having to make the decoder symmetrical in design to the encoder (i.e., the encoder could be a 2-layer convolutional network while the decoder could be a 3-layer deconvolutional network). In a variational autoencoder, the idea of latent space becomes more clear, because now we truly map the input (such as an image or document vector) to a latent variable, from which we will reconstruct the original/same input (such as the image or document vector).

I also think a great deal of confusion comes from misuse of terminology. Nowadays, ML folk especially tend to mix and match words (some do so to make things sound cooler or find buzzwords that will attract readers/funders/fame/glory/etc.), but this might be partly due to the re-branding of artificial Neural Networks as “deep learning” ;-) [since, in the end, everyone wants the money to keep working]

Masked Autoencoder