Difference between revisions of "Graph"

m |

|||

| (176 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, GPT-4, GPT-5, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Knowledge+Graph+AI Youtube search...] |

| − | [ | + | [https://www.google.com/search?q=Knowledge+Graph+AI ...Google search] |

| + | |||

| + | * [[Excel]] ... [[LangChain#Documents|Documents]] ... [[Database|Database; Vector & Relational]] ... [[Graph]] ... [[LlamaIndex]] | ||

| + | * [[Analytics]] ... [[Visualization]] ... [[Graphical Tools for Modeling AI Components|Graphical Tools]] ... [[Diagrams for Business Analysis|Diagrams]] & [[Generative AI for Business Analysis|Business Analysis]] ... [[Requirements Management|Requirements]] ... [[Loop]] ... [[Bayes]] ... [[Network Pattern]] | ||

| + | * [[Agents]] ... [[Robotic Process Automation (RPA)|Robotic Process Automation]] ... [[Assistants]] ... [[Personal Companions]] ... [[Personal Productivity|Productivity]] ... [[Email]] ... [[Negotiation]] ... [[LangChain]] | ||

| + | * [[Perspective]] ... [[Context]] ... [[In-Context Learning (ICL)]] ... [[Transfer Learning]] ... [[Out-of-Distribution (OOD) Generalization]] | ||

| + | * [[Causation vs. Correlation]] ... [[Autocorrelation]] ...[[Convolution vs. Cross-Correlation (Autocorrelation)]] | ||

| + | * [[Data Governance#Implementing Data Governance with Knowledge Graphs|Implementing Data Governance with Knowledge Graphs]] | ||

| + | * [[Architectures]] for AI ... [[Generative AI Stack]] ... [[Enterprise Architecture (EA)]] ... [[Enterprise Portfolio Management (EPM)]] ... [[Architecture and Interior Design]] | ||

| + | * [[Artificial General Intelligence (AGI) to Singularity]] ... [[Inside Out - Curious Optimistic Reasoning| Curious Reasoning]] ... [[Emergence]] ... [[Moonshots]] ... [[Explainable / Interpretable AI|Explainable AI]] ... [[Algorithm Administration#Automated Learning|Automated Learning]] | ||

| + | * [[Telecommunications]] ... [[Computer Networks]] ... [[Telecommunications#5G|5G]] ... [[Satellite#Satellite Communications|Satellite Communications]] ... [[Quantum Communications]] ... [[Agents#Communication | Agents]] ... [[AI Generated Broadcast Content|AI Broadcast; Radio, Stream, TV]] | ||

| + | * [[Graph Convolutional Network (GCN), Graph Neural Networks (Graph Nets), Geometric Deep Learning]] | ||

| + | * [[Embedding]] ... [[Fine-tuning]] ... [[Retrieval-Augmented Generation (RAG)|RAG]] ... [[Agents#AI-Powered Search|Search]] ... [[Clustering]] ... [[Recommendation]] ... [[Anomaly Detection]] ... [[Classification]] ... [[Dimensional Reduction]]. [[...find outliers]] | ||

| + | * [[Data Science]] ... [[Data Governance|Governance]] ... [[Data Preprocessing|Preprocessing]] ... [[Feature Exploration/Learning|Exploration]] ... [[Data Interoperability|Interoperability]] ... [[Algorithm Administration#Master Data Management (MDM)|Master Data Management (MDM)]] ... [[Bias and Variances]] ... [[Benchmarks]] ... [[Datasets]] | ||

| + | * [[Papers Search#Connected Papers | Connected Papers]] using [[Papers Search#Semantic Scholar| Semantic Scholar]] to explore connected papers in a visual graph | ||

| + | * [https://en.wikipedia.org/wiki/Knowledge_graph Knowledge graph | Wikipedia] | ||

| + | * [https://ai.stanford.edu/blog/introduction-to-knowledge-graphs An Introduction to Knowledge Graphs | SAIL Blog] | ||

| + | * [https://pathmind.com/wiki/graph-analysis A Beginner's Guide to Graph Analytics and Deep Learning | Chris Nicholson - A.I. Wiki pathmind] | ||

| + | * [https://neo4j.com/blog/7-ways-data-is-graph/ 7 Ways Your Data Is Telling You It’s a Graph | Karen Lopez - InfoAdvisors - Neo4j] | ||

| + | * [https://patterns.dataincubator.org/book/linked-data-patterns.pdf Linked Data Patterns book | leigh Dodds and Ian Davis] | ||

| + | * [https://www.topbots.com/guide-to-knowledge-graphs/ A Guide To Knowledge Graphs | Mohit Mayank - TOPBOTS] | ||

| + | * [https://blog.resolute.ai/graph-databases-and-knowledge-graphs Graph databases and knowledge graphs with examples | Resolute] | ||

| + | * [https://www.bcs.org/articles-opinion-and-research/what-is-a-knowledge-graph-and-how-are-they-changing-data-analytics What is a knowledge graph and how are they changing data analytics?] | ||

| + | * [https://stackoverflow.com/questions/68398040/when-to-use-graph-databases-ontologies-and-knowledge-graphs When to use graph databases, ontologies, and knowledge graphs] | ||

| + | |||

| + | = Graph Use Cases = | ||

| + | * [https://medium.com/@dmccreary/a-taxonomy-of-graph-use-cases-2ba34618cf78 A Taxonomy of Graph Use Cases | Dan McCreary - Medium] | ||

| + | * [https://www.slideshare.net/maxdemarzi/graph-database-use-cases Graph database Use Cases | Max De Marzi - Slideshare] | ||

| + | |||

| + | |||

| + | https://miro.medium.com/max/759/1*6k0TELu2ewH7KIFERhj-DA.png | ||

| + | |||

| + | * Machine Learning | ||

| + | * Portfolio Analytics (Asset Management) | ||

| + | * Master Data Management | ||

| + | * Data Integration | ||

| + | * Social Networks | ||

| + | * Genomics (Gene Sequencing) BioInformatics | ||

| + | * Epidemiology | ||

| + | * Web Browsing | ||

| + | * Semantic Web | ||

| + | * [[Agents#Communication | communication]] Networks (Network Cell Analysis) | ||

| + | * Internet of Things (Sensor Networks) | ||

| + | * Recommendations | ||

| + | * Fraud Detection (Money Laundering) | ||

| + | * Geo Routing (Public Transport) | ||

| + | * Customer 360 | ||

| + | * Insurance Risk Analysis | ||

| + | * Content Management & Access Control | ||

| + | * [[Privacy]], Risk and Compliance | ||

| + | |||

| + | [https://www.comparethecloud.net/articles/adding-context-will-take-ai-to-the-next-level/ Adding Context Will Take AI to the Next Level | Neo4j] | ||

| + | |||

| + | <img src="https://www.comparethecloud.net/wp-content/uploads/2019/09/AIGraphic.jpg" width="800"> | ||

| + | |||

| + | |||

| + | |||

| + | <img src="https://1.bp.blogspot.com/-nmL6-ewUCDY/YKbGXaZSYUI/AAAAAAAAHoY/nViZMBlmECA5SkkO1nPpa2Mxl6m7wW-QQCLcBGAsYHQ/w640-h382/image1.gif" width="800"> | ||

| + | |||

| + | |||

| + | = Knowledge Graph with Large Language Models (LLM) = | ||

| + | * [[Generative AI]] | ||

| + | * [[Large Language Model (LLM)]] | ||

| + | * [https://medium.com/@peter.lawrence_47665/large-language-model-knowledge-graph-store-yes-by-fine-tuning-llm-with-kg-f88b556959e6 Large Language Model = Knowledge Graph Store? Yes, by fine-tuning LLM with KG | Peter Lawrence - Medium] | ||

| + | * [https://thecaglereport.com/2023/03/24/chatgpt-llms-vs-knowledge-graphs/ ChatGPT (LLMs) vs. Knowledge Graphs | Kurt Cagle - The Cagle Report] | ||

| + | * [https://www.linkedin.com/pulse/using-knowledge-graphs-reduce-output-errors-large-language-doug-dunn Using Knowledge Graphs to Reduce Output Errors in Large Language Models | Doug Dunn] | ||

| + | |||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>WqYBx2gB6vA</youtube> | ||

| + | <b>The Future of Knowledge Graphs in a World of [[Large Language Model (LLM)]] | ||

| + | </b><br>Post-conference recording of the keynote for May 11 at the Knowledge Graph Conference 2023 in New York, NY. How do Knowledge Graphs fit into the quickly changing world that large language models are shaping? | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>FRFqSDs1-3s</youtube> | ||

| + | <b>Train GPT 3 on Any Corpus of Data with [[ChatGPT]] and Knowledge Graphs SCOTUS Opinions | ||

| + | </b><br>Train GPT 3 on Any Corpus of Data with [[ChatGPT]] and Knowledge Graphs SCOTUS Opinions #ariyelacademy #chatgpt #chatgpt3 Ariyel Academy is an Institute of Higher Education on a Mission to Empower Individuals | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>RHA0-djaiA8</youtube> | ||

| + | <b>The Cagle Report. Issue #4. Interview With [[ChatGPT]] | ||

| + | </b><br>In which editor Kurt Cagle interviews [[ChatGPT]] about large language models and knowledge graphs. [https://thecaglereport.com/2023/04/02/an-interview-with-chatgpt-about-knowledge-graphs/ Posted on April 2, 2023 by Kurt Cagle] | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>mYCIRcobukI</youtube> | ||

| + | <b>GraphGPT: Transform Text into Knowledge Graphs with GPT-3 | ||

| + | </b><br>GraphGPT converts unstructured natural language into a knowledge graph. Pass in the synopsis of your favorite movie, a passage from a confusing Wikipedia page, or a transcript from a video to generate a graph visualization of entities and their relationships. A classic use case of prompt engineering. | ||

| + | |||

| + | * Github: https://github.com/varunshenoy/GraphGPT | ||

| + | * Demo: https://graphgpt.vercel.app/ | ||

| + | |||

| + | Enjoy reading articles? then consider subscribing to Medium membership, it just 5$ a month for unlimited access to all free/paid content. | ||

| + | Subscribe now - https://prakhar-mishra.medium.com/membership | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | |||

| + | = <span id="Knowledge Graph"></span>Knowledge Graph = | ||

| + | * [[Wiki]] | ||

| + | * [https://www.manning.com/books/ai-powered-search AI Powered Search | Trey Grainger] | ||

| + | * [https://lucene.apache.org/solr/ Apache Solr] ...open source enterprise search platform built on [https://lucene.apache.org/[https://www.w3schools.com/js/js_json_http.asp Apache Lucene™]. Solr is highly scalable, providing fully fault tolerant distributed indexing, search and analytics. It exposes Lucene's features through easy to use [https://www.w3schools.com/js/js_json_http.asp JSON/HTTP] interfaces or native clients for [https://www.java.com/en/ Java] and other languages. The [https://lucene.apache.org/pylucene/ PyLucene] sub project provides [[Python]] bindings for Lucene. | ||

| + | * [https://www.manning.com/books/relevant-search Relevant Search: With applications for Solr and Elasticsearch | Doug Turnbull and John Berryman] | ||

| + | * [https://blog.mikemccandless.com/ Changing Bits] and [https://www.manning.com/books/lucene-in-action-second-edition Lucene in Action | Michael McCandless] | ||

| + | * [https://ceur-ws.org/Vol-2873/paper12.pdf Knowledge Graph Lifecycle: Building and Maintaining Knowledge Graphs] | ||

| + | * [https://arxiv.org/abs/2303.13948 2303.13948 Knowledge Graphs: Opportunities and Challenges | arXiv.org] | ||

| + | * [https://dl.acm.org/doi/pdf/10.1145/3331166 Industry-scale knowledge graphs: lessons and challenges] | ||

| + | * [https://arxiv.org/abs/2206.07472 2206.07472 Collaborative Knowledge Graph Fusion by Exploiting the | arXiv.org] | ||

| + | * [https://openreview.net/pdf?id=GumxKk-3fV- Knowledge Graph Lifecycle: Building and maintaining Knowledge Graphs] | ||

| + | |||

| + | A knowledge graph is a directed labeled graph in which we have associated domain specific meanings with nodes and edges. A knowledge graph formally represents semantics by describing entities and their relationships. Knowledge graphs may make use of ontologies as a schema layer. By doing this, they allow logical inference for retrieving implicit knowledge rather than only allowing queries requesting explicit knowledge. Knowledge graphs give you the tools to analyze and visualize the information in a graph database. | ||

| + | |||

| + | <youtube>XYoGb5MbDsc</youtube> | ||

| + | <youtube>7qIBex7a0kE</youtube> | ||

| + | |||

| + | Some of the challenges of building and maintaining knowledge graphs are: data acquisition, data representation, data quality, data integration, data alignment, data evolution, data scalability, data security, and data usability. | ||

| + | |||

| + | Here are some more details about the challenges of building and maintaining knowledge graphs: | ||

| + | |||

| + | * <b>Data acquisition</b>: is the process of collecting and extracting data from various sources to populate a knowledge graph. Data acquisition can be challenging because the sources may be heterogeneous, incomplete, inconsistent, noisy, or unstructured. Data acquisition may also involve different methods, such as crawling, scraping, parsing, annotating, or linking data. | ||

| + | * <b>Data representation</b>: is the process of modeling and encoding data in a suitable format for a knowledge graph. Data representation can be challenging because the format may vary depending on the type of knowledge graph (e.g., RDF-based or LPG-based), the schema or ontology used, the level of granularity or abstraction, and the trade-offs between expressiveness and efficiency. | ||

| + | * <b>Data quality</b>: is the process of ensuring that the data in a knowledge graph is accurate, consistent, complete, and up-to-date. Data quality can be challenging because the data may contain errors, ambiguities, redundancies, or conflicts that need to be detected and resolved. Data quality may also involve different techniques, such as validation, verification, cleaning, deduplication, or reconciliation. | ||

| + | * <b>Data integration</b>: is the process of combining and aligning data from multiple sources into a unified knowledge graph. Data integration can be challenging because the sources may have different schemas, vocabularies, formats, or semantics that need to be harmonized and mapped. Data integration may also involve different approaches, such as schema-based, instance-based, or hybrid-based integration. | ||

| + | * <b>Data alignment</b>: is the process of identifying and linking equivalent or related entities or concepts across different sources or knowledge graphs. Data alignment can be challenging because the entities or concepts may have different names, identifiers, descriptions, or attributes that need to be matched and aligned. Data alignment may also involve different methods, such as rule-based, similarity-based, learning-based, or crowdsourcing-based alignment. | ||

| + | * <b>Data evolution</b>: is the process of updating and maintaining the data in a knowledge graph over time. Data evolution can be challenging because the data may change due to new facts, events, sources, or requirements that need to be incorporated and reflected in the knowledge graph. Data evolution may also involve different aspects, such as versioning, provenance, change detection, change propagation, or change notification. | ||

| + | * <b>Data scalability</b>: is the process of ensuring that the knowledge graph can handle large volumes of data and queries without compromising performance or quality. Data scalability can be challenging because the data may grow rapidly and exceed the capacity or resources of the system that stores and processes it. Data scalability may also involve different solutions, such as distributed computing, parallel processing, caching, indexing, or compression. | ||

| + | * <b>Data security</b>: is the process of protecting the data in a knowledge graph from unauthorized access or misuse. Data security can be challenging because the data may contain sensitive or confidential information that needs to be safeguarded and controlled. Data security may also involve different measures, such as encryption, authentication, authorization, auditing, or anonymization. | ||

| + | * <b>Data usability</b>: is the process of ensuring that the data in a knowledge graph is accessible and understandable by users and applications. Data usability can be challenging because the data may be complex or abstract that needs to be simplified and explained. Data usability may also involve different features, such as query languages, APIs, interfaces, visualizations, or explanations. | ||

| + | |||

| + | == Applications of Knowledge Graphs == | ||

| + | Knowledge graphs have started to play a central role in representing the information extracted using natural language processing and computer vision. Knowledge graphs can also be used for data integration, data quality, data governance, data discovery, data analytics, and machine learning. | ||

| − | * [ | + | * A semantic knowledge graph can make use of an ontology to describe entities and their relationships, but it also contains factual knowledge and definitions based on business needs, focused on the data integration and analysis of the domain |

| + | * An ontology is a formal and standardized representation of knowledge that defines the concepts and relationships in a domain. An ontology can be used as a schema layer for a knowledge graph. | ||

| + | |||

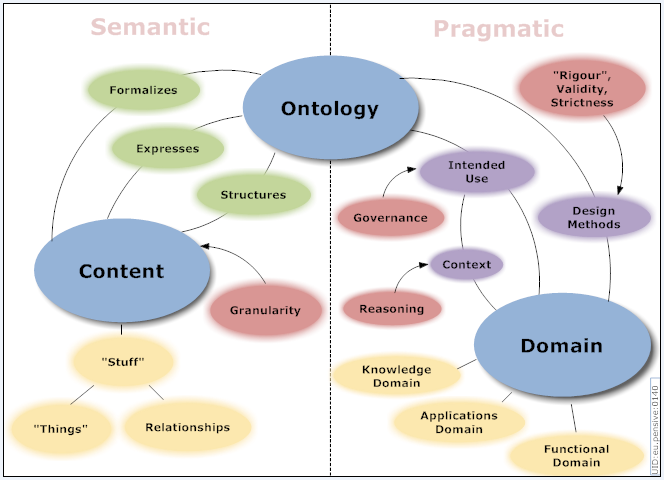

| + | === <span id="Ontology"></span>Ontology === | ||

| + | * [https://en.wikipedia.org/wiki/Ontology Ontology | Wikipedia] | ||

| + | * [https://research-methodology.net/research-philosophy/ontology Ontology - Research-Methodology] | ||

| + | * [https://protegewiki.stanford.edu/wiki/Protege_Ontology_Library Protege Ontology Library] | ||

| + | * [https://protege.stanford.edu/publications/ontology_development/ontology101-noy-mcguinness.html What is an ontology and why we need it - Protégé] | ||

| + | * [https://protege.stanford.edu/ Protégé | Stanford] plug-in architecture can be adapted to build both simple and complex ontology-based applications. Developers can integrate the output of Protégé with rule systems or other problem solvers to construct a wide range of intelligent systems. | ||

| + | |||

| + | An ontology is a formal and standardized representation of knowledge that defines the concepts and relationships in a domain. An ontology can be used to break down data silos and enable interoperability among heterogeneous data sources. An ontology can also provide a common vocabulary and a shared understanding for a domain. An ontology can enable interoperability and inference among heterogeneous data sources, supporting a semantic knowledge graph to enable data quality, governance, discovery, analytics, and machine learning. Can incorporate computable descriptions that can bring insight in a wide set of compelling applications including more precise knowledge capture, semantic data integration, sophisticated query answering, and powerful association mining - thereby delivering key value for health care and the life sciences. Ontology learning (ontology extraction, ontology generation, or ontology acquisition) is the automatic or semi-automatic creation of ontologies, including extracting the corresponding domain's terms and the relationships between the concepts that these terms represent from a corpus of natural language text, and [[Data Quality#Data Encoding|encoding]] them with an ontology language for easy retrieval. As building ontologies manually is extremely labor-intensive and time-consuming, there is great motivation to automate the process. Typically, the process starts by extracting terms and concepts or noun phrases from plain text using linguistic processors such as part-of-speech tagging and phrase chunking. Then statistical or symbolic techniques are used to extract relation signatures, often based on pattern-based or definition-based [https://en.m.wikipedia.org/wiki/Hyponymy_and_hypernymy hypernym] extraction techniques. [https://en.wikipedia.org/wiki/Ontology_learning Ontology learning | Wikipedia] | ||

| + | |||

| + | https://ontolog.cim3.net/file/work/OntologySummit2007/workshop/ontology-dimensions-map_20070423b.png | ||

| + | |||

| + | <youtube>WayalrOkCkY</youtube> | ||

| + | <youtube>k5X12mdEvb8</youtube> | ||

| + | <youtube>dVZFlg1pN8A</youtube> | ||

| + | |||

| + | |||

| + | ==== Components of Ontology ==== | ||

| + | * [https://en.wikipedia.org/wiki/Ontology_components Ontology components | Wikipedia] | ||

| + | An ontology typically consists of the following components: classes, instances, properties, axioms, and annotations. Classes are the concepts or categories in the domain. Instances are the individual members of the classes. Properties are the attributes or relations that describe the classes or instances. Axioms are the logical rules or constraints that define the semantics of the ontology. Annotations are the metadata or comments that provide additional information about the ontology elements. | ||

| + | |||

| + | * <b>Classes</b>: are the concepts or categories in the domain. Classes represent sets, collections, types, or kinds of things. Classes can be organized into a hierarchy using subsumption relations, such as subclass-of or superclass-of. Classes can also have multiple inheritance, meaning that a class can have more than one superclass. For example, in an ontology of movies, some classes could be: Movie, Director, Actor, Genre, etc. | ||

| + | * <b>Instances</b>: are the individual members of the classes. Instances are also known as objects or particulars. Instances represent the basic or "ground level" components of an ontology. Instances can have properties and relations that describe them. For example, in an ontology of movies, some instances could be: Titanic (an instance of Movie), James Cameron (an instance of Director), Leonardo DiCaprio (an instance of Actor), Romance (an instance of Genre), etc. | ||

| + | * <b>Properties</b>: are the attributes or relations that describe the classes or instances. Properties are also known as features, characteristics, parameters, or slots. Properties can have values that are either data types (such as numbers or strings) or other instances. Properties can also have cardinality constraints that specify how many values a property can have for a given class or instance. For example, in an ontology of movies, some properties could be: title (a property of Movie with a string value), directedBy (a property of Movie with an instance value of Director), hasGenre (a property of Movie with an instance value of Genre), etc. | ||

| + | * <b>Axioms</b>: are the logical rules or constraints that define the semantics of the ontology. Axioms are also known as statements or assertions. Axioms can be used to express the necessary and sufficient conditions for class membership, the equivalence or disjointness of classes, the transitivity or symmetry of properties, the domain and range restrictions of properties, and other logical inferences that can be drawn from the ontology. For example, in an ontology of movies, some axioms could be: Movie is equivalent to Film, Director is disjoint from Actor, directedBy is inverse of directs, hasGenre has domain Movie and range Genre, etc. | ||

| + | * <b>Annotations</b>: are the metadata or comments that provide additional information about the ontology elements. Annotations are also known as labels or descriptions. Annotations can be used to document the source, provenance, purpose, meaning, usage, or history of the ontology elements. Annotations can also be used to attach natural language labels or definitions to the ontology elements for human readability. For example, in an ontology of movies, some annotations could be: Movie has label "Movie" and definition "A motion picture", directedBy has label "Directed by" and comment "Indicates who directed a movie", Titanic has label "Titanic" and comment "A movie released in 1997", etc. | ||

| + | |||

| + | ==== Types of Ontology ==== | ||

| + | There are different types of ontologies depending on their scope and level of abstraction. Some examples are: top-level ontology, domain ontology, task ontology, application ontology, and foundational ontology. | ||

| + | |||

| + | * <b>Top-level ontology</b>: is a general and abstract ontology that provides a common foundation for more specific ontologies. A top-level ontology defines the most basic and universal concepts and relations that are applicable to any domain, such as time, space, causality, identity, etc. Examples of top-level ontologies are: Basic Formal Ontology (BFO), Descriptive Ontology for Linguistic and Cognitive Engineering (DOLCE), General Formal Ontology (GFO), and Suggested Upper Merged Ontology (SUMO). | ||

| + | * <b>Domain ontology</b>: is a specific and concrete ontology that describes the concepts and relations that are relevant to a particular domain or area of interest. A domain ontology can be derived from or aligned with a top-level ontology, but it also introduces new terms and definitions that are specific to the domain. Examples of domain ontologies are: Gene Ontology (GO) for biology, Financial Industry Business Ontology (FIBO) for finance, Foundational Model of Anatomy (FMA) for medicine, and Cyc for common sense knowledge. | ||

| + | * <b>Task ontology</b>: is a specialized ontology that defines the concepts and relations that are needed to perform a certain task or activity. A task ontology can be independent of or dependent on a domain ontology, depending on whether the task is generic or domain-specific. Examples of task ontologies are: Process Specification Language (PSL) for manufacturing processes, Simple Knowledge Organization System (SKOS) for knowledge organization, and Dublin Core Metadata Initiative (DCMI) for resource description. | ||

| + | * <b>Application ontology</b>: is a customized ontology that combines a domain ontology and a task ontology to support a specific application or system. An application ontology can be tailored to the needs and requirements of the application, such as the data sources, the user interface, the functionality, etc. Examples of application ontologies are: Friend of a Friend (FOAF) for social networking, Schema.org for web markup, and Music Ontology for music information retrieval. | ||

| + | * <b>Foundational ontology</b>: is a meta-level ontology that provides a formal and rigorous framework for developing and evaluating other ontologies. A foundational ontology aims to clarify the ontological commitments and assumptions of other ontologies, as well as to resolve inconsistencies and ambiguities among them. Examples of foundational ontologies are: OntoClean for ontological analysis, OntoUML for ontological modeling, and OntoGraph for ontological visualization. | ||

| + | |||

| + | = Knowledge Graph Computing = | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>Np768VAe_7I</youtube> | ||

| + | <b>Knowledge Graphs and Deep Learning 102 | ||

| + | </b><br>In this video, we are going to look into not so exciting developments that connect Deep Learning with Knowledge Graph and GANs… let’s just hope it’s more fun than “Machine Learning Memes for Convolutional Teens”. [https://www.youtube.com/watch?v=hQv8FNaJHEA GAN Explained Link] | ||

| + | The bot in the video is R2D2, which comes after OB1's 2nd gen in Star Wars. Audio change was a bit tricky. Topics Covered in the video 1. Graph Convolutional Networks 2. Semi-supervised Learning 3. Knowledge Graphs and Ontology 4. [[Embedding]] in Knowledge Graphs 5. Adversarial Learning in Knowledge Graphs (KBGANs) Please contribute to the initiative by donating to us via Patreon because we need the money to scale up our efforts and bring creative weirdos and nerdy dreamers together. Patreon Link: https://www.patreon.com/crazymuse Even something as small as 1$ per creation can make a collective difference. Join us on slack if you want to contribute to the scripts that we write for the video. Slack Link : https://goo.gl/GFW2My | ||

| + | Contributors for the Video 1. Script Writer : Jaley Dholakiya 2. Reviewers : Arjun Shetty, Sidharth Aiyar, Saikat Paul 3. Animator and Moderator : Jaley Dholakiya Trans-D [[embedding]] : https://www.aclweb.org/anthology/P15-1067 KBGANs : https://arxiv.org/pdf/1711.04071.pdf | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>D-bTGefJj0A</youtube> | ||

| + | <b>Knowledge Graphs & Deep Learning at YouTube | ||

| + | </b><br>Aurelien explains how you can combine Knowledge Graphs and Deep Learning to dramatically improve Search & Discovery systems. By using a combination of signals (audiovisual content, title & description and context), it is possible to find the main topics of a video. These topics can then be used to improve recommendations, search, structured browsing, ads, and much more. EVENT: dotAI 2018 SPEAKER: Aurelien Geron PERMISSIONS: dotconference Organizer provider Coding Tech with the permission to republish this video. | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>_9CdTRxVRSc</youtube> | ||

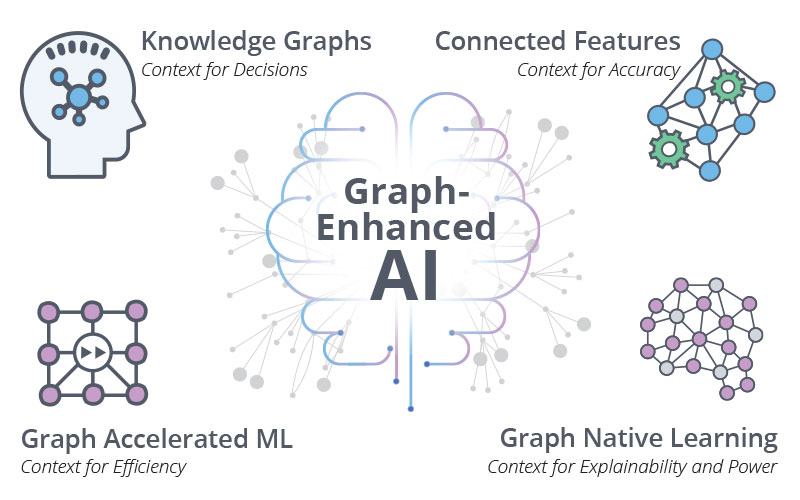

| + | <b>How Graphs are Changing AI | ||

| + | </b><br>Speaker: Amy Hodler, Neo4j | ||

| + | Abstract: Graph enhancements to Artificial Intelligence and Machine Learning are changing the landscape of intelligent applications. Beyond improving accuracy and modeling speed, graph technologies make building AI solutions more accessible and explainable. Join us to hear about the areas at the forefront of graph enhanced AI and ML, and find out which techniques are commonly used today and which hold the potential for disrupting industries. We'll look at the phases of graph enhanced AI as well as future-looking trends | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>2ZzGMzitNgo</youtube> | ||

| + | <b>Graph databases: The best kept secret for effective AI | ||

| + | </b><br>Emil Eifrem, Neo4j Co-Founder and CEO explains why connected data is the key to more accurate, efficient and credible learning systems. Using real world use cases ranging from space engineering to investigative journalism, he will outline how a relationships-first approach adds context to data - the key to explainable, well-informed predictions. Wish you were here? Sign up for 2 for 1 discount code for #WebSummit 2019 now: https://news.websummit.com/live-stream | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>vZi-Ca9QBME</youtube> | ||

| + | <b>How Graph Technology Is Changing Artificial Intelligence and Machine Learning | ||

| + | </b><br>Graph enhancements to Artificial Intelligence and Machine Learning are changing the landscape of intelligent applications. Beyond improving accuracy and modeling speed, graph technologies make building AI solutions more accessible. Join us to hear about 6 areas at the forefront of graph enhanced AI and ML, and find out which techniques are commonly used today and which hold the potential for disrupting industries. Amy Hodler and Jake Graham, Neo4j #ArtificialIntelligence #GraphTechnology #GraphConnect | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>PlFcOJkKSLA</youtube> | ||

| + | <b>Graphs for AI and ML | ||

| + | </b><br>Presented by Jim Webber, Chief Scientist at Neo4j. Graph enhancements to Artificial Intelligence and Machine Learning are changing the landscape of intelligent applications. Beyond improving accuracy and modeling speed, graph technologies make building AI solutions more accessible. | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>GekQqFZm7mA</youtube> | ||

| + | <b>Graph Databases Will Change Your Freakin' Life (Best Intro Into Graph Databases) | ||

| + | </b><br>Ed Finkler https://nodevember.org/talk/Ed%20Finkler ## WTF is a graph database - Euler and Graph Theory - Math -- it's hard, let's skip it - It's about data -- lots of it - But let's zoom in and look at the basics ## Relational model vs graph model - How do we represent THINGS in DBs - Relational vs Graph - Nodes and Relationships ## Why use a graph over a relational DB or other NoSQL? | ||

| + | - Very simple compared to RDBMS, and much more flexible - The real power is in relationship-focused data (most NoSQL dbs don't treat relationships as first-order) | ||

| + | * As related-ness and amount of data increases, so does advantage of Graph DBs - Much closer to our whiteboard model - Answering questions you didn't expect ## Let's look at some examples | ||

| + | * A bit o' live code | ||

| + | * Based on OSMI mental health in tech survey graph ## How do we use this from a programming lang? | ||

| + | * Neo4j 3.x uses a RESTful API and a native protocol (BOLT) * All client libraries are wrappers for this * Show a couple code examples with popular wrappers ## Resources * Graph Story | ||

| + | * OSMI Graph Blog | ||

| + | * Neo4j docs # [https://neo4j.com/blog/7-ways-data-is-graph/ More Resources] | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>zCEYiCxrL_0</youtube> | ||

| + | <b>An Introduction to Graph Neural Networks: Models and Applications | ||

| + | </b><br>MSR Cambridge, AI Residency Advanced Lecture Series An Introduction to Graph Neural Networks: Models and Applications Got it now: "Graph Neural Networks (GNN) are a general class of networks that work over graphs. By representing a problem as a graph — encoding the information of individual elements as nodes and their relationships as edges — GNNs learn to capture patterns within the graph. These networks have been successfully used in applications such as chemistry and program analysis. In this introductory talk, I will do a deep dive in the neural message-passing GNNs, and show how to create a simple GNN implementation. Finally, I will illustrate how GNNs have been used in applications. [https://www.microsoft.com/en-us/research/video/msr-cambridge-lecture-series-an-introduction-to-graph-neural-networks-models-and-applications/ More info] | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>GNAKDBy_K-U</youtube> | ||

| + | <b>GraphConnect SF 2015 / Karen Lopez, InfoAdvisors - 7 Ways Your Data Is Telling You It’s a Graph | ||

| + | </b><br>Karen Lopez, Sr. Project Manager, InfoAdvisors | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>YrhBZUtgG4E</youtube> | ||

| + | <b>Graph Representation Learning (Stanford University) | ||

| + | </b><br>Machine Learning TV [https://snap.stanford.edu/class/cs224w-2018/handouts/09-node2vec.pdf Slide link] | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>UGPT64wo7lU</youtube> | ||

| + | <b>[[Creatives#Yann LeCun|Yann LeCun]] - Graph [[Embedding]], Content Understanding, and Self-Supervised Learning | ||

| + | </b><br>Institut des Hautes Études Scientifiques (IHÉS) | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>yOYodfN84N4</youtube> | ||

| + | <b>A Skeptics Guide to Graph Databases - David Bechberger | ||

| + | </b><br>Graph databases are one of the hottest trends in tech, but is it hype or can they actually solve real problems? Well, the answer is both. In this talk, Dave will pull back the covers and show you the good, the bad, and the ugly of solving real problems with graph databases. He will demonstrate how you can leverage the power of graph databases to solve difficult problems or existing problems differently. He will then discuss when to avoid them and just use your favorite RDBMS. We will then examine a few of his failures so that we can all learn from his mistakes. By the end of this talk, you will either be excited to use a graph database or run away screaming, either way, you will be armed with the information you need to cut through the hype and know when to use one and when to avoid them. Check out more of our talks in the following links! NDC Conferences https://ndcoslo.com https://ndcconferences.com | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | |||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>8XJes6XFjxM</youtube> | ||

| + | <b>The Unreasonable Effectiveness of Spectral Graph Theory: A Confluence of Algorithms, Geometry, and Physics | ||

| + | </b><br>James R. Lee, University of Washington [https://simons.berkeley.edu/events/openlectures2014-fall-4 Simons Institute Open Lectures] Spectral geometry has long been a powerful tool in many areas of mathematics and physics. In a similar way, spectral graph theory has played an important role in algorithms. But the last decade has seen a revolution of sorts: Fueled by fundamental computational questions, spectral methods have been challenged to address new kinds of problems, and have proved their worth by providing a remarkable set of new ideas and solutions. Starting with a basic physical process (heat diffusion), we will recall how the evolution of the system can be understood in terms of eigenmodes and their associated eigenvalues. The physical view has immediate computational import; for instance, Google models its users as agents diffusing over the web graph. This family of ideas describes the "reasonable" effectiveness of spectral graph theory. But then we will see that spectral objects can also precisely describe other phenomena in a surprisingly unreasonable way. This will lead us to confront some of the most fundamental problems in algorithms and complexity theory from a spectral [[perspective]]. | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | |||

| + | == Graph Databases == | ||

| + | * [https://en.wikipedia.org/wiki/Graph_database Graph Databases (GDB) | Wikipedia] | ||

| + | * [https://www.linkedin.com/pulse/sql-now-gql-alastair-green/?trackingId=JHusYOenSoa23wu2xoAOvw%3D%3D SQL ... and now GQL | Alastair Green] | ||

| + | * [https://aws.amazon.com/nosql/graph/ What Is a Graph Database? | Amazon AWS] | ||

| + | * [https://www.revolvy.com/folder/Graph-databases/197279 Graph databases] | ||

| + | |||

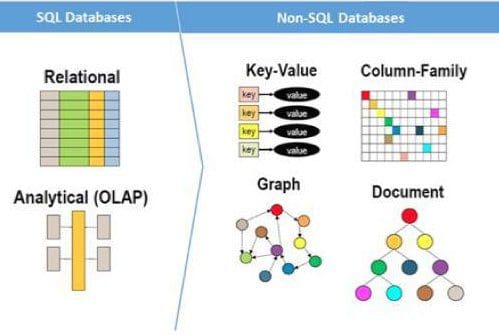

| + | A graph database is a database that can store graph data, which primarily has three types of elements: nodes, edges, and properties. A graph database is easier to understand and manage than a relational database when relationships are complex, inherited, inferred with varying degrees of confidence, and likely to change. A graph database is also more performant for certain tasks that involve traversing or querying the graph structure. | ||

| + | |||

| + | ...offer a more efficient way to model relationships and networks than relational (SQL) databases or other kinds of NoSQL databases (document, wide column, and so on). | ||

| + | |||

| + | === Types of Graph Databases === | ||

| + | Two popular types of graph databases are: Resource Description Framework (RDF)-based graph databases and Label Propagation Graph (LPG)-based graph databases. RDF represents knowledge in the form of subject-verb-object triplets. LPG represents knowledge in the form of edges, nodes, and attributes where nodes and edges can hold properties in the form of key:value pairs. | ||

| + | |||

| + | Offering: | ||

| + | |||

| + | === [https://neo4j.com/ Neo4J] === | ||

| + | <youtube>oRtVdXvtD3o</youtube> | ||

| + | === [https://aws.amazon.com/neptune/ Amazon Neptune] === | ||

| + | ...from [https://www.blazegraph.com/ Blazegraph] | ||

| + | |||

| + | <youtube>f7FSpT7jrX4</youtube> | ||

| + | |||

| + | === [https://janusgraph.org/ JanusGraph] === | ||

| + | <youtube>_3YP3QI_cYk</youtube> | ||

| + | === [https://titan.thinkaurelius.com/ TitanDB] === | ||

| + | <youtube>CvO9i3D1di8</youtube> | ||

| + | === [https://orientdb.com/ OrientDB] === | ||

| + | <youtube>kpLqfFGubKM</youtube> | ||

| + | === [https://giraph.apache.org/ Giraph] === | ||

| + | <youtube>y5WxwVZXvs4</youtube> | ||

| + | === [https://www.tigergraph.com/ TigerGraph] === | ||

| + | <youtube>ylqO-lOP9pE</youtube> | ||

| + | === [https://docs.cambridgesemantics.com/home.htm AnzoGraph] === | ||

| + | <youtube>YDI-Xb0VDrE</youtube> | ||

| + | === [https://dgraph.io/ Dgraph] === | ||

| + | - written in Go | ||

| + | |||

| + | <youtube>CjkKRbtwWXA</youtube> | ||

| + | |||

| + | === [https://www.analyticsvidhya.com/blog/2015/12/started-graphlab-python/ Dato GraphLab] === | ||

| + | |||

| + | <youtube>oRIn2vOK3Tw</youtube> | ||

| + | |||

| + | |||

| + | === Advantages and Disadvantages of Graph Databases === | ||

| + | * [https://neo4j.com/why-graph-databases Why Graph Databases? The Advantages of Using a Graph Database] | ||

| + | * [https://dzone.com/articles/what-are-the-pros-and-cons-of-using-a-graph-databa What Are the Major Advantages of Using a Graph Database?] | ||

| + | * [https://www.techtarget.com/searchdatamanagement/feature/The-top-5-graph-database-advantages-for-enterprises The top 5 graph database advantages for enterprises] | ||

| + | * [https://www.techtarget.com/searchdatamanagement/feature/Advantages-of-graph-databases-Easier-data-modeling-analytics Advantages of graph databases: Easier data modeling, analytics] | ||

| + | * [https://www.techtarget.com/searchdatamanagement/feature/Graph-database-vs-relational-database-Key-differences Graph database vs. relational database: Key differences.] | ||

| + | |||

| + | Some of the advantages of graph databases are: flexibility, expressiveness, scalability, performance, and schema evolution. | ||

| + | |||

| + | * <b>Flexibility</b>: Graph databases are flexible because they do not require a predefined schema or structure to store data. Graph databases can accommodate heterogeneous and dynamic data, as well as complex and evolving relationships². Graph databases can also handle semi-structured or unstructured data, such as text, images, or videos. | ||

| + | * <b>Expressiveness</b>: Graph databases are expressive because they can model data in a natural and intuitive way that reflects the real world. Graph databases can capture the richness and diversity of data, as well as the semantics and context of relationships. Graph databases can also support various types of queries, such as pattern matching, path finding, subgraph extraction, traversal strategies, etc. | ||

| + | * <b>Scalability</b>: Graph databases are scalable because they can handle large volumes of data and relationships without compromising performance or quality. Graph databases can distribute data across multiple nodes or clusters, and use parallel processing and caching techniques to optimize query execution². Graph databases can also scale horizontally or vertically, depending on the needs and resources. | ||

| + | * <b>Performance</b>: Graph databases are performant because they can provide fast and efficient query responses for complex and interconnected data. Graph databases use index-free adjacency, which means that each node stores a pointer to its adjacent nodes, eliminating the need for global indexes or joins. Graph databases can also leverage in-[[memory]] computing and graph algorithms to speed up query processing. | ||

| + | * <b>Schema evolution</b>: Graph databases support schema evolution because they can adapt to changing data and requirements without affecting existing data or queries. Graph databases allow adding, removing, or modifying nodes, edges, or properties without altering the whole database schema. Graph databases can also provide schema validation and migration tools to ensure data consistency and quality. | ||

| + | |||

| + | Some of the disadvantages of graph databases are: complexity, lack of standardization, maturity, and support. | ||

| + | |||

| + | * <b>Complexity</b>: Graph databases are complex because they require a different mindset and skillset from relational databases. Graph databases involve learning new concepts, such as nodes, edges, properties, labels, etc., as well as new query languages or APIs, such as SPARQL, Cypher, Gremlin, GraphQL, etc. Graph databases also require understanding the trade-offs and challenges of graph modeling and analysis. | ||

| + | * <b>Lack of standardization</b>: Graph databases lack standardization because there is no universal agreement or consensus on how to design, implement, or query graph data. Graph databases have different types (RDF-based or LPG-based), different formats (triples or key-value pairs), different languages (declarative or imperative), and different features (schema-less or schema-full). Graph databases also have different vendors and products that may not be compatible or interoperable with each other. | ||

| + | * <b>Maturity</b>: Graph databases are relatively immature compared to relational databases that have been around for decades. Graph databases are still evolving and developing new features and functionalities to meet the growing demands and expectations of users. Graph databases may also have some limitations or drawbacks that need to be addressed or resolved in the future. | ||

| + | * <b>Support</b>: Graph databases have less support than relational databases that have a large and established community of users and developers. Graph databases may have fewer resources or documentation available to help users learn or troubleshoot graph data issues. Graph databases may also have fewer tools or integrations available to work with other systems or applications. | ||

| + | |||

| + | == Graph Data Models == | ||

| + | * [https://medium.com/terminusdb/graph-fundamentals-part-2-labelled-property-graphs-ba9a8edb5dfe Graph Fundamentals | Kevin Feeney TerminusDB - Medium] | ||

| + | * [https://neo4j.com/blog/rdf-triple-store-vs-labeled-property-graph-difference/ RDF Triple Stores vs. Labeled Property Graphs: What’s the Difference? | Jesús Barrasa - Neo4j] | ||

| + | ** <i>Labeled</i> Property Graph (LPG) | ||

| + | ** [https://en.wikipedia.org/wiki/Resource_Description_Framework Resource Description Framework (RDF)] Graph | ||

| + | ** Others | ||

| + | |||

| + | https://www.kdnuggets.com/wp-content/uploads/sql-nosql-dbs.jpg | ||

| + | |||

| + | <img src="https://miro.medium.com/max/1920/1*FAK8MU1sYf6yrVpVmNQDzA.png" width="700" height="400"> | ||

| + | |||

| + | == Transformers - positional encodings specifically designed for directed graphs == | ||

| + | * [[Attention]] Mechanism ... [[Transformer]] ... [[Generative Pre-trained Transformer (GPT)]] ... [[Generative Adversarial Network (GAN)|GAN]] ... [[Bidirectional Encoder Representations from Transformers (BERT)|BERT]] | ||

| + | * [[Few Shot Learning#One-Shot Learning|One-Shot Learning]] | ||

| + | * [https://arxiv.org/abs/2302.00049 Transformers Meet Directed Graphs | S. Geisler, Y. Li, D. Mankowitz, A. Cemgil, S. Günnemann, & C. Paduraru] | ||

| + | |||

| + | A team of researchers has proposed two direction- and structure-aware positional encodings specifically designed for directed graphs. [[Embedding#AI Encoding & AI Embedding |Click here for AI encodings and AI embedding]] | ||

| + | |||

| + | The Magnetic Laplacian, a direction-aware extension of the Combinatorial Laplacian, provides the foundation for the first positional encoding that has been proposed. The provided eigenvectors capture crucial structural information while taking into consideration the directionality of edges in a graph. The transformer model becomes more cognizant of the directionality of the graph by including these eigenvectors in the positional encoding method, which enables it to successfully represent the semantics and dependencies found in directed graphs. Directional random walk encodings are the second positional encoding technique that has been suggested. Random walks are a popular method for exploring and analyzing graphs in which the model learns more about the directional structure of a directed graph by taking random walks in the graph and incorporating the walk information into the positional encodings. Given that it aids the model’s comprehension of the links and information flow inside the graph, this knowledge is used in a variety of downstream activities. The team has shared that the empirical analysis has shown how the direction- and structure-aware positional encodings have performed well in a number of downstream tasks. The correctness testing of sorting networks which is one of these tasks, entails figuring out whether a particular set of operations truly constitutes a sorting network. The suggested model outperforms the previous state-of-the-art method by 14.7%, as measured by the Open Graph Benchmark Code2, by utilizing the directionality information in the graph representation of sorting networks. - [https://www.marktechpost.com/2023/07/16/a-new-ai-research-from-deepmind-proposes-two-direction-and-structure-aware-positional-encodings-for-directed-graphs/ A New AI Research From DeepMind Proposes Two Direction And Structure-Aware Positional Encodings For Directed Graphs | Tanya Malhotra - MarkTechPost] | ||

| + | |||

| + | |||

| + | <hr><center><b><i> | ||

| + | |||

| + | Transformer models have shown great versatility in modalities, such as images, audio, video, and undirected graphs, but transformers for directed graphs still lack attention. | ||

| + | |||

| + | </i></b></center><hr> | ||

| + | |||

| + | |||

| + | Propose two direction- and structure-aware positional encodings for directed graphs: | ||

| + | # the eigenvectors of the Magnetic Laplacian - a direction-aware generalization of the combinatorial Laplacian; | ||

| + | # directional random walk encodings. | ||

| + | |||

| + | Empirically, the extra directionality information is useful in various downstream tasks, including correctness testing of sorting networks and source code understanding. | ||

| + | |||

| + | |||

| + | === Magnetic Laplacian === | ||

| + | The magnetic Laplacian is a non-negative matrix, which means that it can be used to define a graph kernel. This makes the magnetic Laplacian a more efficient tool for machine learning tasks on graphs. The term "magnetic" in the term "magnetic Laplacian" refers to the fact that the matrix captures the directional information in a graph. This is similar to the way that a magnetic field can exert a force on a charged particle, the magnetic Laplacian can exert a force on the flow of information in a graph. The Laplacian is a differential operator that is used to measure the "curvature" of a function. In the context of graphs, the Laplacian can be used to measure the connectivity of a graph. | ||

| + | |||

| + | The Laplacian is named after Pierre-Simon Laplace, a French mathematician and physicist who lived from 1749 to 1827. Laplace was one of the most important mathematicians of the 18th century, and his work had a profound impact on the development of mathematics and physics. | ||

| + | |||

| + | The magnetic potential in the definition of the magnetic Laplacian can be thought of as a magnetic field. The strength of the magnetic field is determined by the parameter q. If q is positive, then the magnetic field will encourage the flow of information from the first node to the second node. If q is negative, then the magnetic field will discourage the flow of information from the first node to the second node. | ||

| + | |||

| + | <hr><center><b><i> | ||

| + | |||

| + | A graph kernel is a function that measures the similarity between two graphs. The magnetic Laplacian can be used to define a graph kernel that takes into account the directional information in the graphs. | ||

| + | |||

| + | </i></b></center><hr> | ||

| + | |||

| + | |||

| + | The magnetic Laplacian has been used in a number of AI applications on directed graphs, including: | ||

| + | |||

| + | * <b>Node classification</b>: captures the directional information in a graph, which is important for tasks such as node classification. | ||

| + | * <b>Link prediction</b>: is the task of predicting whether or not two nodes in a graph will be connected by an edge in the future. The magnetic Laplacian can be used for link prediction by first computing the eigenvalues and eigenvectors of the magnetic Laplacian. The eigenvalues of the magnetic Laplacian can be used to measure the similarity between pairs of nodes, and the eigenvectors of the magnetic Laplacian can be used to represent the features of each node. Once the eigenvalues and eigenvectors of the magnetic Laplacian have been computed, they can be used to train a machine learning model to predict whether or not two nodes will be connected by an edge in the future. The machine learning model can be trained using a variety of algorithms, such as support vector machines, logistic regression, or neural networks. | ||

| + | * <b>Recommender systems</b>: use it to define a graph kernel. The magnetic Laplacian can be used to define a graph kernel that takes into account the directional information in a graph. This makes the magnetic Laplacian a more powerful tool for recommender systems that need to take into account the order in which users interact with items. Another way to use the magnetic Laplacian with recommender systems is to use it to learn a representation of the users and items in the system. The magnetic Laplacian can be used to learn a low-dimensional representation of the users and items that captures the directional information in the graph. This representation can then be used to make recommendations. Finally, the magnetic Laplacian can be used to improve the performance of other recommender systems. For example, the magnetic Laplacian can be used to regularize a collaborative filtering algorithm. This can help to improve the performance of the algorithm by preventing it from overfitting the data. | ||

| + | * <b>Community detection</b>: The Laplacian can be used to detect communities in a graph. | ||

| + | * <b>Forecasting</b>: the graph kernel more powerful for tasks such as forecasting, where the direction of the edges in the graph is important. Another way to use the magnetic Laplacian with forecasting is to use it to train a machine learning model. The magnetic Laplacian can be used to represent the structure of the graph, and the machine learning model can be trained to predict the future state of the graph. This approach has been shown to be effective for forecasting a variety of phenomena, such as traffic flow and social network dynamics. | ||

| + | * <b>Diffusion of information</b>: The Laplacian can be used to analyze how information spreads through a graph. | ||

| + | * <b>Social network analysis</b>: can be used to identify influential nodes, detect communities, predict links, and analyze the diffusion of information in social networks. | ||

| + | ** Identify influential nodes: The magnetic Laplacian can be used to identify nodes that are important in a social network. This can be done by finding the nodes that have the highest eigenvalues of the magnetic Laplacian. These nodes are likely to be influential because they have a lot of connections and they are able to exert a lot of influence over other nodes in the network. | ||

| + | ** Detect communities: The magnetic Laplacian can be used to detect communities in a social network. This can be done by finding the eigenvectors of the magnetic Laplacian that correspond to the smallest eigenvalues. These eigenvectors will represent the different communities in the network. | ||

| + | ** Predict links: The magnetic Laplacian can be used to predict which nodes are likely to be connected in a social network. This can be done by finding the nodes that have the highest correlation with each other in the magnetic Laplacian. These nodes are likely to be connected because they have similar properties and they are likely to interact with each other in the future. | ||

| + | ** In addition to these specific applications, the magnetic Laplacian can also be used for a variety of other tasks in social network analysis. For example, it can be used to: | ||

| + | *** Analyze the diffusion of information: The magnetic Laplacian can be used to analyze how information spreads through a social network. This can be done by tracking the flow of information through the network using the magnetic Laplacian. | ||

| + | *** Study the evolution of social networks: The magnetic Laplacian can be used to study how social networks evolve over time. This can be done by tracking the changes in the eigenvalues and eigenvectors of the magnetic Laplacian over time. | ||

| + | |||

| + | The magnetic Laplacian is a powerful tool for AI on directed graphs. It is able to capture the directional information in a graph, which is important for a wide variety of tasks. As a result, the magnetic Laplacian is becoming increasingly popular in AI research. In addition, the magnetic Laplacian is a non-negative matrix, which means that it can be used to define a graph kernel. This makes the magnetic Laplacian a more efficient tool for machine learning tasks on graphs. | ||

| + | |||

| + | <center><img src="https://www.researchgate.net/publication/343807278/figure/fig1/AS:927145446633472@1598060108357/The-framework-of-GAGCN.png" width="600"></center> | ||

| + | |||

| + | == Graph Query Programming == | ||

| + | Graph query programming is a way of writing queries to retrieve or manipulate data from a graph database. Graph query programming languages can be declarative or imperative. Declarative languages specify what data to retrieve without specifying how to retrieve it. Imperative languages specify both what and how to retrieve or manipulate data. Some features of graph query programming languages are: pattern matching, filtering, projection, aggregation, ordering, grouping, path finding, subgraph extraction, traversal strategies. Some challenges of graph query programming languages are: expressiveness, efficiency, optimization, standardization. | ||

| + | |||

| + | <youtube>4fMZnunTRF8</youtube> | ||

| + | <youtube>5noi2VM9F-g</youtube> | ||

| + | |||

| + | === Examples of Graph Query Programming Languages === | ||

| + | * [https://www.w3.org/standards/semanticweb/ Semantic Web] technologies: | ||

| + | * [https://www.w3.org/OWL/ Web Ontology Language (OWL) | W3C] designed to represent rich and complex knowledge about things, groups of things, and relations between things | ||

| + | * [https://www.w3.org/RDF/ Resource Description Framework (RDF) | W3C] a standard model for data interchange on the Web | ||

| + | * [https://en.wikipedia.org/wiki/SPARQL SPARQL Protocol and RDF Query Language] queries against what can loosely be called "key-value" data or, more specifically, data that follow the RDF specification | ||

| + | |||

| + | Some examples of declarative graph query programming languages are: SPARQL (for RDF-based graph databases), Cypher (for LPG-based graph databases), Gremlin (for both RDF-based and LPG-based graph databases), GraphQL (for any type of graph database). Some examples of imperative graph query programming languages are: Java (for any type of graph database), Python (for any type of graph database), Neo4j (for LPG-based graph databases). | ||

| + | |||

| + | ===== <span id="GraphQL"></span>GraphQL ===== | ||

| + | [https://www.youtube.com/results?search_query=GraphQL+Graph+Query+Language Youtube search...] | ||

| + | [https://www.google.com/search?q=GraphQL+Graph+Query+Language ...Google search] | ||

| + | |||

| + | * [https://graphql.org/ GraphQL] | ||

* [https://github.com/graphql/graphiql GraphiQL] is the reference implementation of GraphQL IDE, an official project under the GraphQL Foundation | * [https://github.com/graphql/graphiql GraphiQL] is the reference implementation of GraphQL IDE, an official project under the GraphQL Foundation | ||

* [[Git - GitHub and GitLab#GitHub GraphQL API| GitHub GraphQL API]] | * [[Git - GitHub and GitLab#GitHub GraphQL API| GitHub GraphQL API]] | ||

| + | * [[Graph Convolutional Network (GCN), Graph Neural Networks (Graph Nets), Geometric Deep Learning]] | ||

GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. GraphQL provides a complete and understandable description of the data in your API, gives clients the power to ask for exactly what they need and nothing more, makes it easier to evolve APIs over time, and enables powerful developer tools. GraphQL queries access not just the properties of one resource but also smoothly follow references between them. While typical REST APIs require loading from multiple URLs, GraphQL APIs get all the data your app needs in a single request. Apps using GraphQL can be quick even on slow mobile network connections. | GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. GraphQL provides a complete and understandable description of the data in your API, gives clients the power to ask for exactly what they need and nothing more, makes it easier to evolve APIs over time, and enables powerful developer tools. GraphQL queries access not just the properties of one resource but also smoothly follow references between them. While typical REST APIs require loading from multiple URLs, GraphQL APIs get all the data your app needs in a single request. Apps using GraphQL can be quick even on slow mobile network connections. | ||

| Line 19: | Line 484: | ||

<youtube>ed8SzALpx1Q</youtube> | <youtube>ed8SzALpx1Q</youtube> | ||

| − | == With Python == | + | ====== With Python ====== |

<youtube>DWgD5iloSHs</youtube> | <youtube>DWgD5iloSHs</youtube> | ||

<youtube>LxoKPGvMXf0</youtube> | <youtube>LxoKPGvMXf0</youtube> | ||

<youtube>cKTbHph-wlk</youtube> | <youtube>cKTbHph-wlk</youtube> | ||

<youtube>i7HVyEJbvxo</youtube> | <youtube>i7HVyEJbvxo</youtube> | ||

| + | <youtube>nPQE5B51DQ8</youtube> | ||

| + | <youtube>MNHc0j8PDnE</youtube> | ||

| + | |||

| + | ===== Cipher ===== | ||

| + | [https://www.youtube.com/results?search_query=Cypher+Cipher+Neo4j+Graph+Query+Language Youtube search...] | ||

| + | [https://www.google.com/search?q=Cypher+Cipher+Neo4j+Graph+Query+Language ...Google search] | ||

| + | |||

| + | * [https://www.opencypher.org openCypher.org] - Originally contributed by Neo4j; transition planning from openCypher implementations to the developing graph query language standard, GQL | ||

| + | |||

| + | <youtube>l76udM3wB4U</youtube> | ||

| + | <youtube>pMjwgKqMzi8</youtube> | ||

| + | |||

| + | ===== Graph Query Language (GQL) ===== | ||

| + | [https://www.youtube.com/results?search_query=GQL+Graph+Query+Language Youtube search...] | ||

| + | [https://www.google.com/search?q=GQL+Graph+Query+Language ...Google search] | ||

| + | |||

| + | * [https://graphdatamodeling.com/Graph%20Data%20Modeling/GraphDataModeling/page/PropertyGraphs.html Property Graphs Explained | Thomas Frisendal] | ||

| + | |||

| + | Will enable SQL users to use property graph style queries on top of SQL tables. GQL draws heavily on existing languages. The main inspirations have been Cypher (now with over ten implementations, including six commercial products), Oracle's PGQL and SQL itself, as well as new extensions for read-only property graph querying to SQL. [https://www.linkedin.com/pulse/sql-now-gql-alastair-green/?trackingId=JHusYOenSoa23wu2xoAOvw%3D%3D SQL ... and now GQL | Alastair Green] | ||

| + | |||

| + | <youtube>Iq518iZXxA4</youtube> | ||

| + | |||

| + | ===== Gremlin ===== | ||

| + | [https://www.youtube.com/results?search_query=Gremlin+Graph+TinkerPop Youtube search...] | ||

| + | [https://www.google.com/search?q=Gremlin+Graph+TinkerPop ...Google search] | ||

| + | |||

| + | * [https://en.wikipedia.org/wiki/Gremlin_(programming_language) Wikipedia] | ||

| + | * [https://www.slideshare.net/calebwjones/intro-to-graph-databases-using-tinkerpops-titandb-and-gremlin Intro to Graph Databases Using Tinkerpop, TitanDB, and Gremlin | Caleb Jones] | ||

| + | * [https://tinkerpop.apache.org/gremlin.html Gremlin] Graph Traversal Machine and Language | ||

| + | * [https://tinkerpop.apache.org/ TinkerPop] | ||

| + | |||

| + | is a graph traversal language and virtual machine developed by Apache TinkerPop of the Apache Software Foundation. Gremlin works for both OLTP-based graph databases as well as OLAP-based graph processors. As an explanatory analogy, Apache TinkerPop and Gremlin are to graph databases what the JDBC and SQL are to relational databases. Likewise, the Gremlin traversal machine is to graph computing as what the Java virtual machine is to general purpose computing. | ||

| + | |||

| + | <youtube>mZmVnEzsDnY</youtube> | ||

| + | |||

| + | ===== Oracle Property Graph (PGQL) ===== | ||

| + | [https://www.youtube.com/results?search_query=Oracle+Property+Graph+PGQL Youtube search...] | ||

| + | [https://www.google.com/search?q=Oracle+Property+Graph+PGQL ...Google search] | ||

| + | |||

| + | * [https://pgql-lang.org/ Property Graph Query Language] is a query language built on top of SQL, bringing graph pattern matching capabilities to existing SQL users as well as to new users who are interested in graph technology but who do not have an SQL background. | ||

| + | |||

| + | <youtube>HDuLYiTimMo</youtube> | ||

| + | |||

| + | ===== SPARQL ===== | ||

| + | [https://www.youtube.com/results?search_query=SPARQL+Graph+RDF Youtube search...] | ||

| + | [https://www.google.com/search?q=SPARQL+Graph+RDF ...Google search] | ||

| + | |||

| + | * [https://en.wikipedia.org/wiki/SPARQL SPARQL] a query language for [https://en.wikipedia.org/wiki/Resource_Description_Framework RDF] databases | ||

| + | |||

| + | <youtube>RoogS47Fp8o</youtube> | ||

| + | <youtube>A2kkR1-qn5k</youtube> | ||

| + | |||

| + | === Graph Algorithms === | ||

| + | Graph algorithms are algorithms that operate on graphs or use graphs as data structures. Graph algorithms can be used for various purposes such as finding shortest paths, detecting communities or clusters, measuring centrality or importance, identifying patterns or anomalies. | ||

| + | |||

| + | <youtube>09_LlHjoEiY</youtube> | ||

| + | <youtube>RqQBh_Wbcu4</youtube> | ||

| + | <youtube>fPH-WJ-kEpY</youtube> | ||

| + | <youtube>_nHAa3j8xTE</youtube> | ||

| + | |||

| + | ==== Categories of Graph Algorithms ==== | ||

| + | Graph algorithms can be categorized into different types based on their functionality or complexity. Some examples are: traversal algorithms (e.g., breadth-first search), path | ||

| + | |||

| + | ===== Breadth First Search (BFS) ===== | ||

| + | <youtube>E_V71Ejz3f4</youtube> | ||

| + | ===== Depth-First Search Algorithm (DFS) ===== | ||

| + | <youtube>tlPuVe5Otio</youtube> | ||

| + | ===== Dijkstras Algorithm for Single-Source Shortest Path ===== | ||

| + | <youtube>U9Raj6rAqqs</youtube> | ||

| + | <youtube>k1kLCB7AZbM</youtube> | ||

| + | ===== Prims Algorithm for Minimum Spanning Trees ===== | ||

| + | <youtube>MaaSoZUEoos</youtube> | ||

| + | ===== Kruskals Algorithm for Minimum Spanning Trees ===== | ||

| + | <youtube>Rc6SIG2Q4y0</youtube> | ||

| + | ===== Bellman-Ford Single-Source Shortest-Path Algorithm ===== | ||

| + | <youtube>dp-Ortfx1f4</youtube> | ||

| + | <youtube>vzBtJOdoRy8</youtube> | ||

| + | ===== Floyd Warshall Algorithm ===== | ||

| + | <youtube>KQ9zlKZ5Rzc</youtube> | ||

| + | <youtube>B06q2yjr-Cc</youtube> | ||

| + | |||

| + | = <span id="Cybersecurity - Visualization"></span>Cybersecurity - Visualization = | ||

| + | [https://www.youtube.com/results?search_query=Cyber+Cybersecurity+Graph+Visualization+~tool+ai YouTube search...] | ||

| + | [https://www.google.com/search?q=Cyber+Cybersecurity+Graph+Visualization+~tool+ai ...Google search] | ||

| + | |||

| + | * [[Cybersecurity]] | ||

| + | * [https://www.graphistry.com/use-cases/threat-hunting Threat Hunting | Graphistry] | ||

| + | |||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>Dhh1Dfm9Eao</youtube> | ||

| + | <b>Supercharged graph visualization for cyber security | ||

| + | </b><br>Cyber security analysts face data overload. They work with information on a massive scale, generated at millisecond levels of resolution detailing increasingly complex attacks. To make sense of this data, analysts need an intuitive and engaging way to explore it: that’s where graph visualization plays a role. Using KeyLines 3.0 to visualize your cyber data at scale During this session, Corey will show examples of how graph visualization can help users explore, understand and derive insight from real-world cyber security datasets. You will learn: - How graph visualization can help you extract insight from cyber data - How to visualize your cyber security graph data at scale using WebGL - Why KeyLines 3.0 is the go-to tool for large-scale cyber graph visualization. This session is suitable for a non-technical audience. | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>narU7ej4yjE</youtube> | ||

| + | <b>Finding Needles in a Needlestack with Graph Analytics and [[Predictive Analytics|Predictive Models]] | ||

| + | </b><br>RSA Conference Kevin Mahaffey, Chief Technology Officer, Lookout Tim Wyatt, Director, Security Engineering, Lookout Good or bad? Security systems answer this question daily: good code vs. malware, legit clients vs. API abuse, etc. In the past, preset rules and heuristics have often been the first (and only) line of defense. In this talk, we'll share learnings you can take home from our experience using big datasets, graph analytics, and [[Predictive Analytics|predictive models]] to secure millions of mobile devices around the world. | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | {|<!-- T --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>Z2tuadJQMow</youtube> | ||

| + | <b>Applying graph visualization to cyber-security analysis | ||

| + | </b><br>Security information and event management/log management (SIEM/LM) evolve continuously to match new security threats. Nevertheless, these solutions often lack appropriate forensics tools to investigate the massive volumes of data they generate. This makes it difficult for security analysts to quickly and efficiently extract the information they need. Modeling this data into a graph database and adding a graph visualization solution like Linkurious on top of the company’s security dashboard can solve this problem. In this webinar, based on a real-world example, you will learn how Linkurious can help: | ||

| + | detect and investigate visually suspicious patterns using the power of graph; perform advanced post attack forensics analysis and locate vulnerabilities; | ||

| + | work collaboratively and locate suspicious IP’s using the geospatial localization feature. | ||

| + | |} | ||

| + | |<!-- M --> | ||

| + | | valign="top" | | ||

| + | {| class="wikitable" style="width: 550px;" | ||

| + | || | ||

| + | <youtube>LBfRnVVdKWo</youtube> | ||

| + | <b>RAPIDS Academy | ||

| + | </b><br>Graphistry Home | ||

| + | |} | ||

| + | |}<!-- B --> | ||

| + | |||

| + | == The Trick That Solves Rubik’s Cubes and Breaks Ciphers (Meet in the Middle) == | ||

| + | [https://www.youtube.com/results?search_query=Cipher+Rubik+Cube+Graph Youtube search...] | ||

| + | [https://www.google.com/search?q=Cipher+Rubik+Cube+Graph ...Google search] | ||

| + | |||

| + | <youtube>wL3uWO-KLUE</youtube> | ||

Latest revision as of 15:58, 28 April 2024

Youtube search... ...Google search

- Excel ... Documents ... Database; Vector & Relational ... Graph ... LlamaIndex

- Analytics ... Visualization ... Graphical Tools ... Diagrams & Business Analysis ... Requirements ... Loop ... Bayes ... Network Pattern

- Agents ... Robotic Process Automation ... Assistants ... Personal Companions ... Productivity ... Email ... Negotiation ... LangChain

- Perspective ... Context ... In-Context Learning (ICL) ... Transfer Learning ... Out-of-Distribution (OOD) Generalization

- Causation vs. Correlation ... Autocorrelation ...Convolution vs. Cross-Correlation (Autocorrelation)

- Implementing Data Governance with Knowledge Graphs

- Architectures for AI ... Generative AI Stack ... Enterprise Architecture (EA) ... Enterprise Portfolio Management (EPM) ... Architecture and Interior Design

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Telecommunications ... Computer Networks ... 5G ... Satellite Communications ... Quantum Communications ... Agents ... AI Broadcast; Radio, Stream, TV

- Graph Convolutional Network (GCN), Graph Neural Networks (Graph Nets), Geometric Deep Learning

- Embedding ... Fine-tuning ... RAG ... Search ... Clustering ... Recommendation ... Anomaly Detection ... Classification ... Dimensional Reduction. ...find outliers

- Data Science ... Governance ... Preprocessing ... Exploration ... Interoperability ... Master Data Management (MDM) ... Bias and Variances ... Benchmarks ... Datasets

- Connected Papers using Semantic Scholar to explore connected papers in a visual graph

- Knowledge graph | Wikipedia

- An Introduction to Knowledge Graphs | SAIL Blog

- A Beginner's Guide to Graph Analytics and Deep Learning | Chris Nicholson - A.I. Wiki pathmind

- 7 Ways Your Data Is Telling You It’s a Graph | Karen Lopez - InfoAdvisors - Neo4j

- Linked Data Patterns book | leigh Dodds and Ian Davis

- A Guide To Knowledge Graphs | Mohit Mayank - TOPBOTS

- Graph databases and knowledge graphs with examples | Resolute

- What is a knowledge graph and how are they changing data analytics?

- When to use graph databases, ontologies, and knowledge graphs

Contents

- 1 Graph Use Cases

- 2 Knowledge Graph with Large Language Models (LLM)

- 3 Knowledge Graph

- 4 Knowledge Graph Computing

- 4.1 Graph Databases

- 4.2 Graph Data Models

- 4.3 Transformers - positional encodings specifically designed for directed graphs

- 4.4 Graph Query Programming

- 4.4.1 Examples of Graph Query Programming Languages

- 4.4.2 Graph Algorithms

- 4.4.2.1 Categories of Graph Algorithms

- 4.4.2.1.1 Breadth First Search (BFS)

- 4.4.2.1.2 Depth-First Search Algorithm (DFS)

- 4.4.2.1.3 Dijkstras Algorithm for Single-Source Shortest Path

- 4.4.2.1.4 Prims Algorithm for Minimum Spanning Trees

- 4.4.2.1.5 Kruskals Algorithm for Minimum Spanning Trees

- 4.4.2.1.6 Bellman-Ford Single-Source Shortest-Path Algorithm