Difference between revisions of "Ethics"

m |

m |

||

| Line 11: | Line 11: | ||

** [[Government Services]] | ** [[Government Services]] | ||

* [[Policy]] ... [[Policy vs Plan]] ... [[Constitutional AI]] ... [[Trust Region Policy Optimization (TRPO)]] ... [[Policy Gradient (PG)]] ... [[Proximal Policy Optimization (PPO)]] | * [[Policy]] ... [[Policy vs Plan]] ... [[Constitutional AI]] ... [[Trust Region Policy Optimization (TRPO)]] ... [[Policy Gradient (PG)]] ... [[Proximal Policy Optimization (PPO)]] | ||

| − | * [[Risk, Compliance and Regulation]] ... [[Ethics]] ... [[Privacy]] ... [[Law]] ... [[AI Governance]] ... [[ | + | * [[Risk, Compliance and Regulation]] ... [[Ethics]] ... [[Privacy]] ... [[Law]] ... [[AI Governance]] ... [[AI Verification and Validation]] |

*** [https://www.nextgov.com/emerging-tech/2020/01/white-house-proposes-light-touch-regulatory-approach-artificial-intelligence/162276/ U.S. 10 Principles - White House Proposes 'Light-Touch Regulatory Approach' for Artificial Intelligence | Brandi Vincent - Nextgov] | *** [https://www.nextgov.com/emerging-tech/2020/01/white-house-proposes-light-touch-regulatory-approach-artificial-intelligence/162276/ U.S. 10 Principles - White House Proposes 'Light-Touch Regulatory Approach' for Artificial Intelligence | Brandi Vincent - Nextgov] | ||

*** [https://www.xinhuanet.com/english/2019-05/26/c_138091724.htm Beijing publishes AI ethical standards, calls for int'l cooperation | Xinhua] | *** [https://www.xinhuanet.com/english/2019-05/26/c_138091724.htm Beijing publishes AI ethical standards, calls for int'l cooperation | Xinhua] | ||

| Line 18: | Line 18: | ||

*** [https://www.ai.mil/docs/08_21_20_responsible_ai_champions_pilot.pdf Responsible AI Champions Pilot |] [[Defense#Joint Artificial Intelligence Center (JAIC)|Department of Defense Joint Artificial Intelligence Center (JAIC)]] ...DoD AI Principles ...Themes ...Tactics | *** [https://www.ai.mil/docs/08_21_20_responsible_ai_champions_pilot.pdf Responsible AI Champions Pilot |] [[Defense#Joint Artificial Intelligence Center (JAIC)|Department of Defense Joint Artificial Intelligence Center (JAIC)]] ...DoD AI Principles ...Themes ...Tactics | ||

*** [https://media.defense.gov/2021/May/27/2002730593/-1/-1/0/IMPLEMENTING-RESPONSIBLE-ARTIFICIAL-INTELLIGENCE-IN-THE-DEPARTMENT-OF-DEFENSE.PDF Implementing Responsible Artificial Intelligence in the Department of Defense] May 26, 2021 | *** [https://media.defense.gov/2021/May/27/2002730593/-1/-1/0/IMPLEMENTING-RESPONSIBLE-ARTIFICIAL-INTELLIGENCE-IN-THE-DEPARTMENT-OF-DEFENSE.PDF Implementing Responsible Artificial Intelligence in the Department of Defense] May 26, 2021 | ||

| + | * [[Singularity]] ... [[Moonshots]] ... [[Emergence]] ... [[Explainable / Interpretable AI]] ... [[Artificial General Intelligence (AGI)| AGI]] ... [[Inside Out - Curious Optimistic Reasoning]] ... [[Algorithm Administration#Automated Learning|Automated Learning]] | ||

* [[Other Challenges]] in Artificial Intelligence | * [[Other Challenges]] in Artificial Intelligence | ||

* [[Bias and Variances]] | * [[Bias and Variances]] | ||

Revision as of 15:11, 20 April 2023

YouTube search... ...Google search

- Case Studies

- Policy ... Policy vs Plan ... Constitutional AI ... Trust Region Policy Optimization (TRPO) ... Policy Gradient (PG) ... Proximal Policy Optimization (PPO)

- Risk, Compliance and Regulation ... Ethics ... Privacy ... Law ... AI Governance ... AI Verification and Validation

- Defense

- Responsible AI Champions Pilot | Department of Defense Joint Artificial Intelligence Center (JAIC) ...DoD AI Principles ...Themes ...Tactics

- Implementing Responsible Artificial Intelligence in the Department of Defense May 26, 2021

- Singularity ... Moonshots ... Emergence ... Explainable / Interpretable AI ... AGI ... Inside Out - Curious Optimistic Reasoning ... Automated Learning

- Other Challenges in Artificial Intelligence

- Bias and Variances

- Partnership on AI brings together diverse, global voices to realize the promise of artificial intelligence

- Amazon joins Microsoft in calling for regulation of facial recognition tech | Saqib Shah - engadget

- The Internet needs new rules. Let’s start in these four areas. | Mark Zuckerberg

- How Big Tech funds the debate on AI ethics | Oscar Williams - NewStatesman and NS Tech

- Europe is making AI rules now to avoid a new tech crisis | Ivana Kottasová - CNN Business

- OECD members, including U.S., back guiding principles to make AI safer | Leigh Thomas - Reuters

- 3 Practical Solutions to Offset Automation’s Impact on Work | Moran Cerf, Ryan Burke and Scott Payne - Singularity Hub

- EU backs AI regulation while China and US favour technology | Siddharth Venkataramakrishnan - The Financial Times Limited

- Could tough new rules to regulate big tech backfire? | Harry de Quetteville & Matthew Field - The Telegraph

- Don’t let industry write the rules for AI | Yochai Benkler - Nature

- The Algorithmic Accountability Act of 2019: Taking the Right Steps Toward AI Success | Colin Priest - DataRobot

There are many efforts underway to address the ethical issues raised by artificial intelligence. Some of these efforts are focused on developing ethical guidelines for the development and use of AI, while others are focused on developing technical solutions to mitigate the risks of AI. The development of ethical guidelines and technical solutions is just one part of the effort to address the ethical issues raised by AI. It is also important to have open and transparent discussions about the potential risks and benefits of AI, and to involve stakeholders from all sectors of society in the development of AI technologies.

|

|

|

|

|

|

|

|

|

|

Contents

[hide]Values, Rights, & Religion

Montreal Declaration for Responsible AI

- Montreal AI Ethics Institute creating tangible and applied technical and policy research in the ethical, safe, and inclusive development of AI.

- Montreal Declaration for Responsible AI

Effort to develop ethical guidelines for AI is the Montreal Declaration for Responsible AI. This declaration was developed by a group of experts from around the world in 2018. The declaration calls for the development of AI that is beneficial to humanity, and that respects human rights and dignity.

Partnership on AI

The Partnership on AI (PAI) is a non-profit coalition committed to the responsible use of artificial intelligence. It researches best practices for artificial intelligence systems and to educate the public about AI. Efforts underway to develop technical solutions to mitigate the risks of AI. The partnership has developed a set of AI principles, and is working on projects to address issues such as bias in AI systems and the safety of autonomous vehicles.

Publicly announced September 28, 2016, its founding members are Amazon, Facebook, Google, DeepMind, Microsoft, and IBM, with interim co-chairs Eric Horvitz of Microsoft Research and Mustafa Suleyman of DeepMind. Apple joined the consortium as a founding member in January 2017. More than 100 partners from academia, civil society, industry, and nonprofits are member organizations in 2019. In January 2017, Apple head of advanced development for Siri, Tom Gruber, joined the Partnership on AI's board. In October 2017, Terah Lyons joined the Partnership on AI as the organization's founding executive director.

The PAI's mission is to promote the beneficial use of AI through research, education, and public engagement. The PAI works to ensure that AI is developed and used in a way that is safe, ethical, and beneficial to society.

The PAI's work is guided by a set of AI principles, which were developed by the PAI's members and endorsed by the PAI's board of directors. The principles are:

- AI should be developed and used for beneficial purposes.

- AI should be used in a way that respects human rights and dignity.

- AI should be developed and used in a way that is safe and secure.

- AI should be developed and used in a way that is fair and unbiased.

- AI should be developed and used in a way that is transparent and accountable.

- AI should be developed and used in a way that is understandable and interpretable.

- AI should be developed and used in a way that is aligned with societal values.

The PAI's work is divided into four areas:

- Research: The PAI supports research on the societal and ethical implications of AI.

- Education: The PAI provides educational resources on AI to the public.

- Public engagement: The PAI engages with the public about AI through events, publications, and other activities.

- Policy: The PAI works to develop and promote policies that promote the beneficial use of AI.

The PAI is a valuable resource for anyone who is interested in the responsible use of artificial intelligence. The PAI's work is helping to ensure that AI is developed and used in a way that is safe, ethical, and beneficial to society.

Asilomar AI Principles

- AI Principles | Future of Life Institute

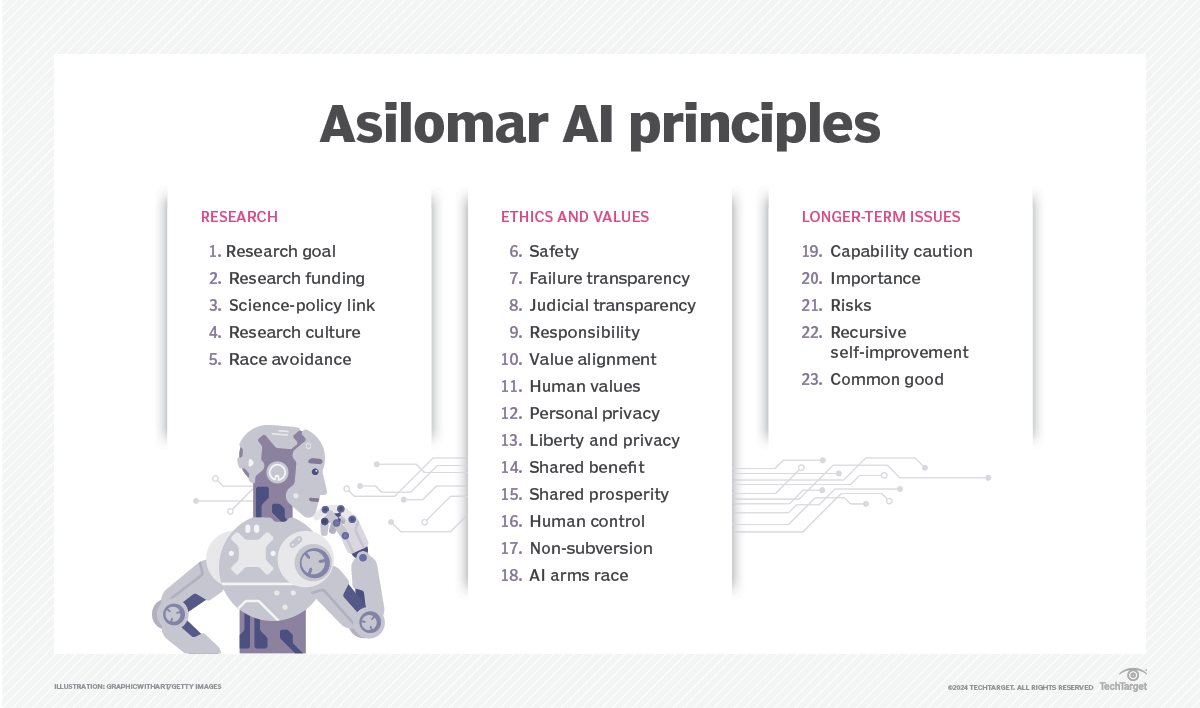

- Asilomar AI Principles | Alexander Gillis - WhatIs.com ... Asilomar AI Principles are 23 guidelines for the research and development of artificial intelligence (AI). The Asilomar Principles outline developmental issues, ethics and guidelines for the development of AI, with the goal of guiding the development of beneficial AI. The tenets were created at the Asilomar Conference on Beneficial AI in 2017 in Pacific Grove, Calif. The conference was organized by the Future of Life Institute.

One of the most well-known efforts to develop ethical guidelines for AI is the Asilomar AI Principles. These principles were developed by a group of experts in AI, ethics, and law in 2017. The principles outline a set of values that should guide the development and use of AI, including safety, transparency, accountability, and fairness.

Debating

YouTube search... ...Google search

|

|

|

|