Difference between revisions of "Bidirectional Encoder Representations from Transformers (BERT)"

m |

m |

||

| Line 24: | Line 24: | ||

* [[Attention]] Mechanism/[[Transformer]] Model | * [[Attention]] Mechanism/[[Transformer]] Model | ||

** [[Generative Pre-trained Transformer (GPT)]]2/3 | ** [[Generative Pre-trained Transformer (GPT)]]2/3 | ||

| − | * [[Generative AI]] ... [[OpenAI]]'s [[ChatGPT]] ... [[Perplexity]] ... [[Microsoft]]'s [[Bing]] ... [[You]] ...[[Google]]'s [[Bard]] ... [[Baidu]]'s [[Ernie]] | + | * [[Generative AI]] ... [[Conversational AI]] ... [[OpenAI]]'s [[ChatGPT]] ... [[Perplexity]] ... [[Microsoft]]'s [[Bing]] ... [[You]] ...[[Google]]'s [[Bard]] ... [[Baidu]]'s [[Ernie]] |

** [https://www.technologyreview.com/2023/02/08/1068068/chatgpt-is-everywhere-heres-where-it-came-from/ ChatGPT is everywhere. Here’s where it came from | Will Douglas Heaven - MIT Technology Review] | ** [https://www.technologyreview.com/2023/02/08/1068068/chatgpt-is-everywhere-heres-where-it-came-from/ ChatGPT is everywhere. Here’s where it came from | Will Douglas Heaven - MIT Technology Review] | ||

* [[Transformer-XL]] | * [[Transformer-XL]] | ||

Revision as of 12:11, 15 April 2023

Youtube search... ...Google search

- Assistants ... Hybrid Assistants ... Agents ... Negotiation ... HuggingGPT ... LangChain

- Natural Language Processing (NLP) ...Generation ...LLM ...Tools & Services

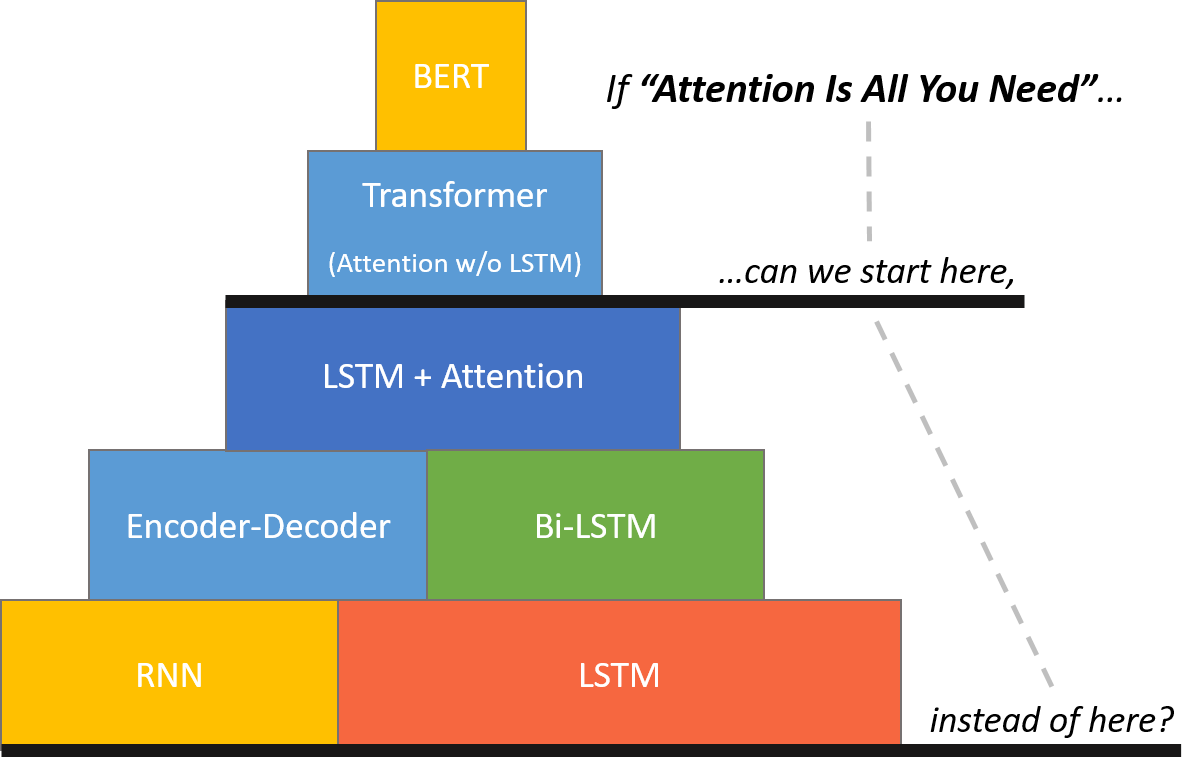

- Attention Mechanism ...Transformer Model ...Generative Pre-trained Transformer (GPT)

- SMART - Multi-Task Deep Neural Networks (MT-DNN)

- Deep Distributed Q Network Partial Observability

- TaBERT

- Google is improving 10 percent of searches by understanding language context - Say hello to BERT | Dieter Bohn - The Verge ...the old Google search algorithm treated that sentence as a “Bag-of-Words (BoW)”

- Google AI’s ALBERT claims top spot in multiple NLP performance benchmarks | Khari Johnson - VentureBeat

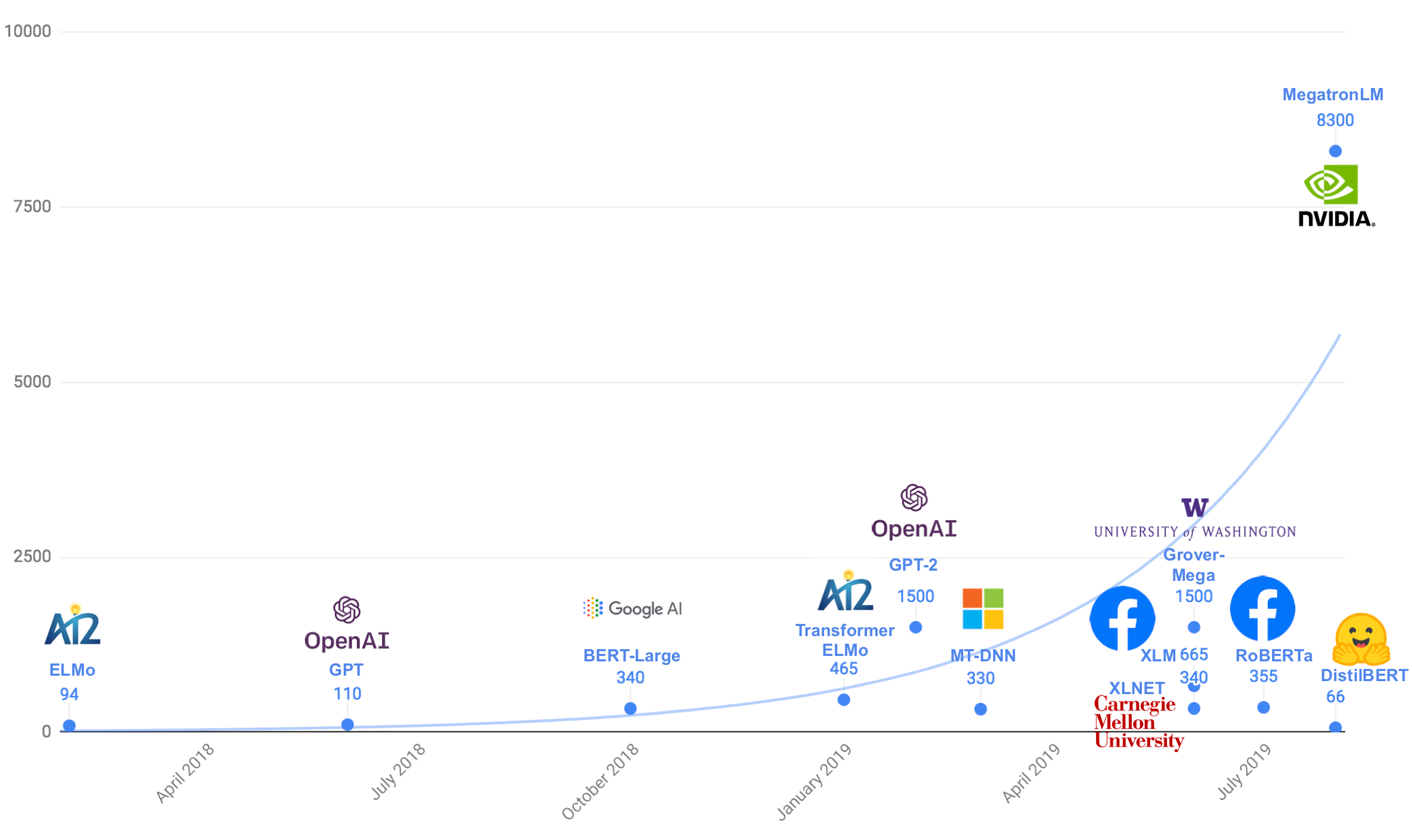

- RoBERTa:

- RoBERTa: A Robustly Optimized BERT Pretraining Approach | Y. Li, M. Ott, N. Goyal, J. Du, M. Joshi, D. Chen, O. Levy, M. Lewis, L. Zettlemoyer, and V. Stoyanov

- RoBERTa: A Robustly Optimized BERT Pretraining Approach | GitHub - iterates on BERT's pretraining procedure, including training the model longer, with bigger batches over more data; removing the next sentence prediction objective; training on longer sequences; and dynamically changing the masking pattern applied to the training data.

- Facebook AI’s RoBERTa improves Google’s BERT pretraining methods | Khari Johnson - VentureBeat

- Google's BERT - built on ideas from ULMFiT, ELMo, and OpenAI

- Attention Mechanism/Transformer Model

- Generative AI ... Conversational AI ... OpenAI's ChatGPT ... Perplexity ... Microsoft's Bing ... You ...Google's Bard ... Baidu's Ernie

- Transformer-XL

- Microsoft makes Google’s BERT NLP model better | Khari Johnson - VentureBeat

- Watch me Build a Finance Startup | Siraj Raval

- Smaller, faster, cheaper, lighter: Introducing DistilBERT, a distilled version of BERT | Victor Sanh - Medium

- TinyBERT: Distilling BERT for Natural Language Understanding | X. Jiao, Y. Yin, L. Shang, X. Jiang, X. Chen, L. Li, F. Wang, and Q. Liu researchers at Huawei produces a model called TinyBERT that is 7.5 times smaller and nearly 10 times faster than the original. It also reaches nearly the same language understanding performance as the original.

- Understanding BERT: Is it a Game Changer in NLP? | Bharat S Raj - Towards Data Science

- Allen Institute for Artificial Intelligence, or AI2’s Aristo AI system finally passes an eighth-grade science test | Alan Boyle - GeekWire

- 7 Leading Language Models for NLP in 2020 | Mariya Yao - TOPBOTS

- BERT Inner Workings | George Mihaila - TOPBOTS

BERT Research | Chris McCormick

- BERT Research | Chris McCormick

- ChrisMcCormickAI online education