Neural Architecture

YouTube search... ...Google search

- Artificial Intelligence (AI) ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Hierarchical Temporal Memory (HTM)

- Codeless Options, Code Generators, Drag n' Drop

- Artificial General Intelligence (AGI) to Singularity ... Curious Reasoning ... Emergence ... Moonshots ... Explainable AI ... Automated Learning

- Auto Keras

- Symbiotic Intelligence ... Bio-inspired Computing ... Neuroscience ... Connecting Brains ... Nanobots ... Molecular ... Neuromorphic ... Evolutionary/Genetic

- Hyperparameters Optimization

- Model Search

- Google AutoML

- MIT’s AI can train neural networks faster than ever before | Christine Fisher - Engadget

Neural Architecture Search (NAS)

YouTube search... ...Google search

- Literature on Neural Architecture Search | AutoML.org

- Awesome NAS; a curated list

- Neural Architecture Search (NAS) with Reinforcement Learning | Wikipedia

- Neural Architecture Search (NAS) with Evolution | Wikipedia

- Multi-objective Neural architecture search | Wikipedia

- Neural Architecture Search for Deep Face Recognition | Ning Zhu

An alternative to manual design is “neural architecture search” (NAS), a series of machine learning techniques that can help discover optimal neural networks for a given problem. Neural architecture search is a big area of research and holds a lot of promise for future applications of deep learning. * Need to find the best AI model for your problem? Try neural architecture search | Ben Dickson - TDW NAS algorithms are efficient problem solvers ... What is neural architecture search (NAS)? | Ben Dickson - TechTalks

Various approaches to Neural Architecture Search (NAS) have designed networks that are on par or even outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used:

- The search space defines which type of artificial neural networks (ANN} can be designed and optimized in principle.

- The search strategy defines which strategy is used to find optimal ANN's within the search space.

- Obtaining the performance of an ANN is costly as this requires training the ANN first. Therefore, performance estimation strategies are used obtain less costly estimates of a model's performance. Neural Architecture Search | Wikipedia

Differentiable Neural Computer (DNC)

Neural Operator

YouTube search... ...Google search

- Geology: Mining, Oil & Gas

- Neural Operator: Learning Maps Between Function Spaces | N. Kovachki, Z. Li, B. Liu, K. Azizzadenesheli, K. Bhattacharya, A. Stuart, Animashree (Anima) Anandkumar

- Neural Operator – Solving PDEs; Partial Differential Equations | Animashree (Anima) Anandkumar, Andrew Stuart, & Kaushik Bhattacharya]

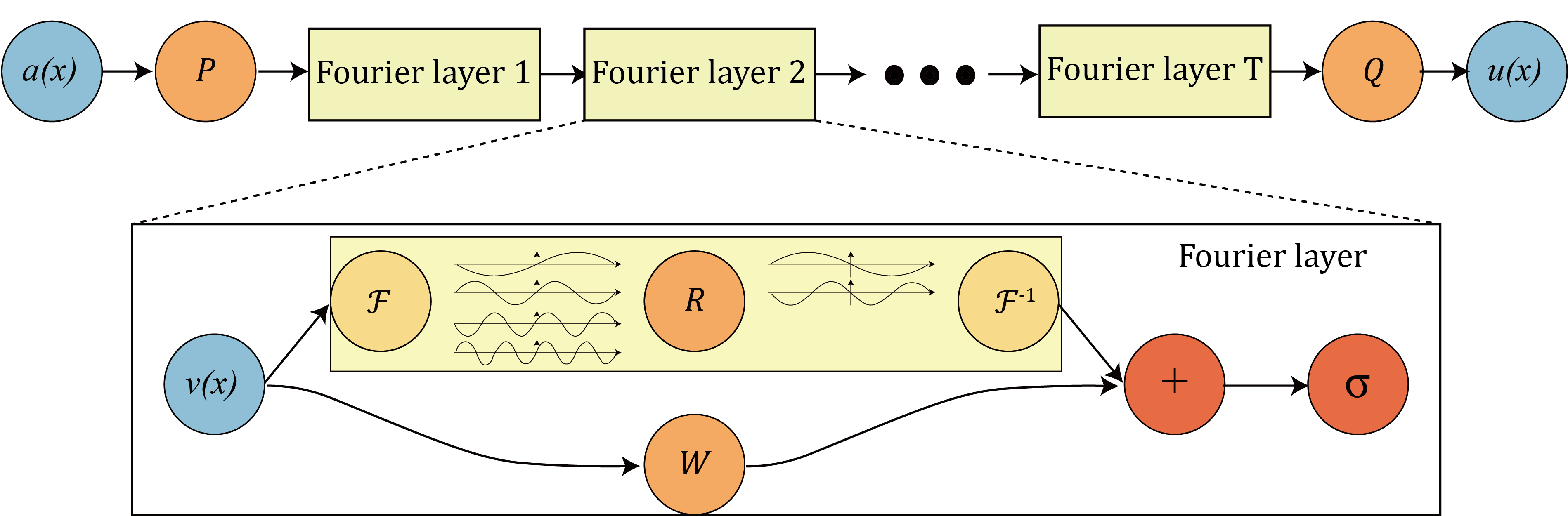

A generalization of neural networks to learn operators, termed neural operators, that map between infinite dimensional function spaces. A universal approximator in the function space. the Fourier neural operator model has shown state-of-the-art performance with 1000x speedup in learning turbulent Navier-Stokes equation, as well as promising applications in weather forecast and CO2 migration, as shown in the figure below. Neural Operator Machine learning for scientific computing | Zongy Li