Difference between revisions of "Perceptron (P)"

m |

m |

||

| Line 35: | Line 35: | ||

The history of the Perceptron dates back to the late 1950s when it was introduced by Frank Rosenblatt, an American psychologist and computer scientist. Rosenblatt was inspired by the functioning of the human brain and aimed to develop a computational model that emulates the behavior of biological neurons. | The history of the Perceptron dates back to the late 1950s when it was introduced by Frank Rosenblatt, an American psychologist and computer scientist. Rosenblatt was inspired by the functioning of the human brain and aimed to develop a computational model that emulates the behavior of biological neurons. | ||

| − | The Perceptron is based on the concept of a threshold logic unit (TLU), which mimics the behavior of a biological neuron. It takes a set of input values, applies weights to them, and produces an output based on a threshold activation function. The activation function determines whether the neuron fires or not based on the weighted sum of the inputs. | + | The Perceptron is based on the concept of a threshold logic unit (TLU), which mimics the behavior of a biological neuron. It takes a set of input values, applies [[Activation Functions#Weights|weights]] to them, and produces an output based on a threshold activation function. The activation function determines whether the neuron fires or not based on the [[Activation Functions#Weights|weighted]] sum of the inputs. |

| − | Rosenblatt's Perceptron was designed as a single-layer neural network with adjustable weights, capable of learning from training examples through a process called supervised learning. The weights are updated iteratively to minimize the error between the predicted output and the desired output. This learning process is known as the perceptron learning rule or the delta rule. | + | Rosenblatt's Perceptron was designed as a single-layer neural network with adjustable [[Activation Functions#Weights|weights]], capable of learning from training examples through a process called supervised learning. The [[Activation Functions#Weights|weights]] are updated iteratively to minimize the error between the predicted output and the desired output. This learning process is known as the perceptron learning rule or the delta rule. |

The Perceptron gained attention in the AI community because it demonstrated the capability of learning and making decisions based on training data. Rosenblatt and his colleagues conducted experiments with Perceptrons to show their ability to classify simple patterns, such as distinguishing between different shapes. These early successes raised hopes that the Perceptron could solve more complex problems and even replicate human-like intelligence. | The Perceptron gained attention in the AI community because it demonstrated the capability of learning and making decisions based on training data. Rosenblatt and his colleagues conducted experiments with Perceptrons to show their ability to classify simple patterns, such as distinguishing between different shapes. These early successes raised hopes that the Perceptron could solve more complex problems and even replicate human-like intelligence. | ||

| Line 52: | Line 52: | ||

= Threshold Logic Unit (TLU) = | = Threshold Logic Unit (TLU) = | ||

| − | A Threshold Logic Unit (TLU) is a simple processing unit for real-valued numbers with multiple inputs and a single output. The unit has a threshold and each input is associated with a weight. The TLU computes a weighted sum of its inputs, compares this to the threshold, and outputs 1 if the threshold is exceeded. | + | A Threshold Logic Unit (TLU) is a simple processing unit for real-valued numbers with multiple inputs and a single output. The unit has a threshold and each input is associated with a [[Activation Functions#Weights|weight]]. The TLU computes a [[Activation Functions#Weights|weighted]] sum of its inputs, compares this to the threshold, and outputs 1 if the threshold is exceeded. |

The TLU is a basic form of machine learning model and is the most basic form of AI-neuron or computational unit. It is also known as a perceptron. | The TLU is a basic form of machine learning model and is the most basic form of AI-neuron or computational unit. It is also known as a perceptron. | ||

The history of Deep Learning is often traced back to 1943 when Walter Pitts and Warren McCulloch created a computer model that supported the neural networks of the human brain. They used a mixture of algorithms and arithmetic they called “threshold logic” to mimic the thought process. | The history of Deep Learning is often traced back to 1943 when Walter Pitts and Warren McCulloch created a computer model that supported the neural networks of the human brain. They used a mixture of algorithms and arithmetic they called “threshold logic” to mimic the thought process. | ||

| Line 70: | Line 70: | ||

* [http://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/two-class-averaged-perceptron Two-Class Averaged Perceptron | Microsoft] | * [http://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/two-class-averaged-perceptron Two-Class Averaged Perceptron | Microsoft] | ||

| − | The averaged perceptron method is an early and very simple version of a neural network. In this approach, inputs are classified into several possible outputs based on a linear function, and then combined with a set of weights that are derived from the feature vector—hence the name "perceptron." | + | The averaged perceptron method is an early and very simple version of a neural network. In this approach, inputs are classified into several possible outputs based on a linear function, and then combined with a set of [[Activation Functions#Weights|weights]] that are derived from the feature vector—hence the name "perceptron." |

<youtube>iVbBIAgTJ2M</youtube> | <youtube>iVbBIAgTJ2M</youtube> | ||

Revision as of 09:13, 6 August 2023

YouTube search... ...Google search

- AI Solver ... Algorithms ... Administration ... Model Search ... Discriminative vs. Generative ... Optimizer ... Train, Validate, and Test

- Artificial Intelligence (AI) ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- History of Artificial Intelligence (AI) ... Neural Network History ... Creatives

- Neural Network Zoo | Fjodor Van Veen

- Wikipedia

___________________________________________________

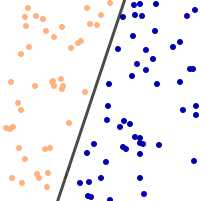

A linear classifier (binary) helps to classify the given input data into two parts.

The Perceptron is a fundamental concept in the field of artificial intelligence (AI) and machine learning. It is a type of neural network that models the behavior of a single artificial neuron, and it played a significant role in the development of AI algorithms and neural networks.

The history of the Perceptron dates back to the late 1950s when it was introduced by Frank Rosenblatt, an American psychologist and computer scientist. Rosenblatt was inspired by the functioning of the human brain and aimed to develop a computational model that emulates the behavior of biological neurons.

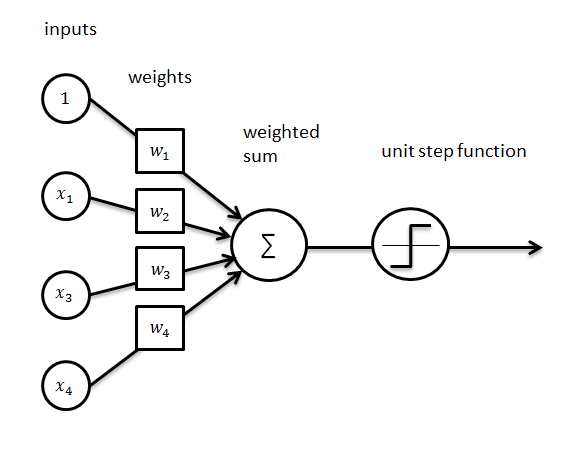

The Perceptron is based on the concept of a threshold logic unit (TLU), which mimics the behavior of a biological neuron. It takes a set of input values, applies weights to them, and produces an output based on a threshold activation function. The activation function determines whether the neuron fires or not based on the weighted sum of the inputs.

Rosenblatt's Perceptron was designed as a single-layer neural network with adjustable weights, capable of learning from training examples through a process called supervised learning. The weights are updated iteratively to minimize the error between the predicted output and the desired output. This learning process is known as the perceptron learning rule or the delta rule.

The Perceptron gained attention in the AI community because it demonstrated the capability of learning and making decisions based on training data. Rosenblatt and his colleagues conducted experiments with Perceptrons to show their ability to classify simple patterns, such as distinguishing between different shapes. These early successes raised hopes that the Perceptron could solve more complex problems and even replicate human-like intelligence.

However, the initial excitement surrounding the Perceptron was short-lived. In 1969, Marvin Minsky and Seymour Papert published a book called "Perceptrons," in which they pointed out the limitations of the Perceptron and its inability to solve certain problems known as linearly non-separable problems. They showed that a single-layer Perceptron could not learn to classify patterns that are not linearly separable.

This criticism led to a decline in interest in Perceptrons, and for a time, neural network research was largely abandoned. However, the Perceptron remained an important milestone in the development of AI and machine learning. It paved the way for future advancements in neural network architectures and learning algorithms.

In the 1980s, with the introduction of multi-layer neural networks and more sophisticated learning algorithms, the limitations of the Perceptron were overcome. Researchers developed techniques like backpropagation, which allowed for the training of multi-layer networks, enabling the Perceptron to handle non-linearly separable problems.

Since then, the Perceptron has been a foundational concept in neural networks and machine learning. It has served as the basis for more advanced models, such as deep neural networks, which are capable of solving complex problems and achieving state-of-the-art results in various AI applications, including image recognition, natural language processing, and reinforcement learning.

Today, the Perceptron remains an important concept in AI education and provides a fundamental understanding of neural networks and their learning capabilities. Although the term "Perceptron" is often used more broadly to refer to a single artificial neuron, it originally referred to a specific type of neural network.

Threshold Logic Unit (TLU)

A Threshold Logic Unit (TLU) is a simple processing unit for real-valued numbers with multiple inputs and a single output. The unit has a threshold and each input is associated with a weight. The TLU computes a weighted sum of its inputs, compares this to the threshold, and outputs 1 if the threshold is exceeded. The TLU is a basic form of machine learning model and is the most basic form of AI-neuron or computational unit. It is also known as a perceptron. The history of Deep Learning is often traced back to 1943 when Walter Pitts and Warren McCulloch created a computer model that supported the neural networks of the human brain. They used a mixture of algorithms and arithmetic they called “threshold logic” to mimic the thought process.

Two-Class Averaged Perceptron

The averaged perceptron method is an early and very simple version of a neural network. In this approach, inputs are classified into several possible outputs based on a linear function, and then combined with a set of weights that are derived from the feature vector—hence the name "perceptron."