Difference between revisions of "Constitutional AI"

m |

m |

||

| Line 28: | Line 28: | ||

| − | <img src="https://miro.medium.com/v2/resize:fit:828/format:webp/1*6zHXwFeUiwK3WeUyIQKktw.png" width=" | + | <img src="https://miro.medium.com/v2/resize:fit:828/format:webp/1*6zHXwFeUiwK3WeUyIQKktw.png" width="700"> |

# The Reinforcement Learning Phase. | # The Reinforcement Learning Phase. | ||

| − | <img src="https://miro.medium.com/v2/resize:fit:828/format:webp/1*thP_MQQ-pLmZn_s4nsnfeg.png" width=" | + | <img src="https://miro.medium.com/v2/resize:fit:828/format:webp/1*thP_MQQ-pLmZn_s4nsnfeg.png" width="1000"> |

Revision as of 14:32, 16 April 2023

YouTube ... Quora ...Google search ...Google News ...Bing News

- Reinforcement Learning (RL)

- Assistants ... Hybrid Assistants ... Agents ... Negotiation ... HuggingGPT ... LangChain

- Generative AI ... Conversational AI ... OpenAI's ChatGPT ... Perplexity ... Microsoft's Bing ... You ...Google's Bard ... Baidu's Ernie

- Reinforcement Learning (RL) from Human Feedback (RLHF)

- Claude | Anthropic

- Meet Claude: Anthropic’s Rival to ChatGPT | Riley Goodside - Scale

- Paper Review: Constitutional AI, Training LLMs using Principles

Constitutional AI is a method for training AI systems using a set of rules or principles that act as a “constitution” for the AI system. This approach allows the AI system to operate within a societally accepted framework and aligns it with human intentions1.

Some benefits of using Constitutional AI include allowing a model to explain why it is refusing to provide an answer, improving transparency of AI decision making, and controlling AI behavior more precisely with fewer human labels.

The Constitutional AI methodology has two phases, similar to Reinforcement Learning (RL) from Human Feedback (RLHF).

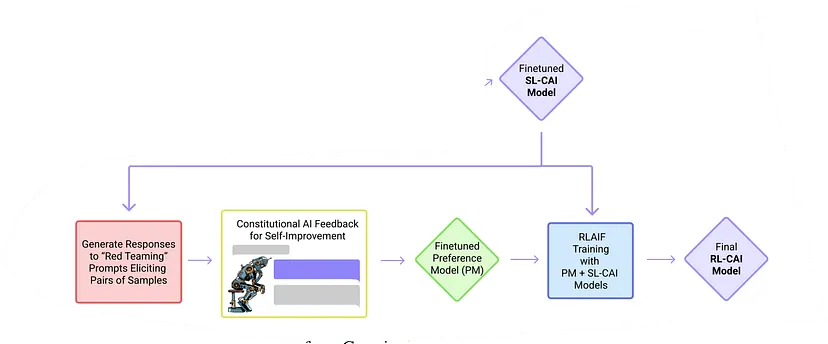

- The Supervised Learning Phase.

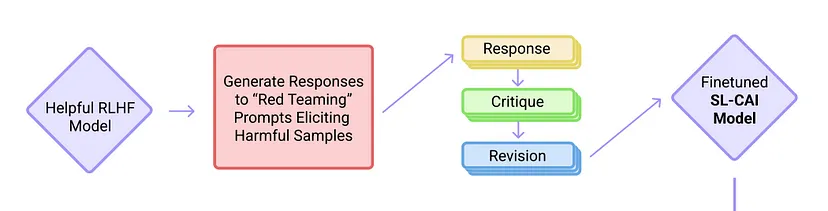

- The Reinforcement Learning Phase.