Difference between revisions of "Reinforcement Learning (RL) from Human Feedback (RLHF)"

m |

m |

||

| Line 100: | Line 100: | ||

|} | |} | ||

|}<!-- B --> | |}<!-- B --> | ||

| + | <youtube>bSvTVREwSNw</youtube> | ||

Revision as of 11:04, 13 June 2023

YouTube ... Quora ...Google search ...Google News ...Bing News

- Reinforcement Learning (RL)

- Human-in-the-Loop (HITL) Learning

- Assistants ... Agents ... Negotiation ... LangChain

- Generative AI ... Conversational AI ... OpenAI's ChatGPT ... Perplexity ... Microsoft's Bing ... You ...Google's Bard ... Baidu's Ernie

- Policy ... Policy vs Plan ... Constitutional AI ... Trust Region Policy Optimization (TRPO) ... Policy Gradient (PG) ... Proximal Policy Optimization (PPO)

- Introduction to Reinforcement Learning with Human Feedback | Edwin Chen - Surge

- What is Reinforcement Learning with Human Feedback (RLHF)? | Michael Spencer

- Compendium of problems with RLHF | Raphael S - LessWrong

- Reinforcement Learning from Human Feedback(RLHF)-ChatGPT | Sthanikam Santhosh - Medium

- Learning through human feedback | Google DeepMind

- Paper Review: Summarization using Reinforcement Learning From Human Feedback | - Towards AI ... AI Alignment, Reinforcement Learning from Human Feedback, Proximal Policy Optimization (PPO)

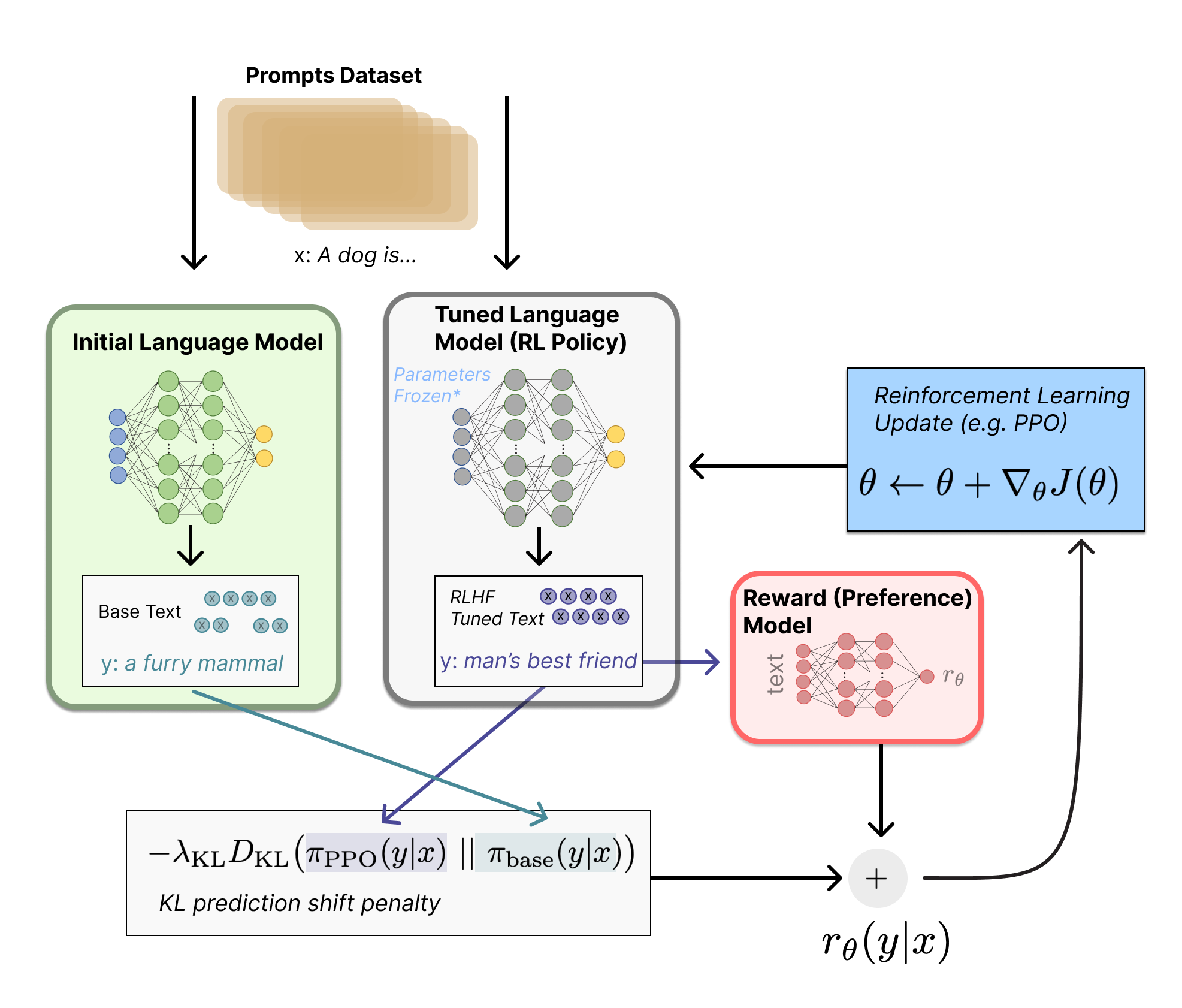

Reinforcement Learning from Human Feedback (RLHF) - a simplified explanation | Joao Lages

Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla - [[Hugging Face]

|

|