Difference between revisions of "Reinforcement Learning (RL) from Human Feedback (RLHF)"

m |

m |

||

| (27 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models, algorithms, data, singularity, moonshot, Tensorflow, Google, Nvidia, Microsoft, Azure, Amazon, AWS | + | |keywords=artificial, intelligence, machine, learning, models, algorithms, data, singularity, moonshot, Tensorflow, Facebook, Meta, Google, Nvidia, Microsoft, Azure, Amazon, AWS |

|description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=ai+Reinforcement+Human+Feedback+RLHF YouTube] |

| − | [ | + | [https://www.quora.com/search?q=ai%20Reinforcement%20Human%20Feedback%20XRLHF ... Quora] |

| + | [https://www.google.com/search?q=ai+Reinforcement+Human+Feedback+RLHF ...Google search] | ||

| + | [https://news.google.com/search?q=ai+Reinforcement+Human+Feedback+RLHF ...Google News] | ||

| + | [https://www.bing.com/news/search?q=ai+Reinforcement+Human+Feedback+RLHF&qft=interval%3d%228%22 ...Bing News] | ||

| − | |||

* [[Reinforcement Learning (RL)]] | * [[Reinforcement Learning (RL)]] | ||

| − | * [[Generative | + | * [[Human-in-the-Loop (HITL) Learning]] |

| − | * [[ | + | * [[Agents]] ... [[Robotic Process Automation (RPA)|Robotic Process Automation]] ... [[Assistants]] ... [[Personal Companions]] ... [[Personal Productivity|Productivity]] ... [[Email]] ... [[Negotiation]] ... [[LangChain]] |

| + | * [[What is Artificial Intelligence (AI)? | Artificial Intelligence (AI)]] ... [[Generative AI]] ... [[Machine Learning (ML)]] ... [[Deep Learning]] ... [[Neural Network]] ... [[Reinforcement Learning (RL)|Reinforcement]] ... [[Learning Techniques]] | ||

| + | * [[Conversational AI]] ... [[ChatGPT]] | [[OpenAI]] ... [[Bing/Copilot]] | [[Microsoft]] ... [[Gemini]] | [[Google]] ... [[Claude]] | [[Anthropic]] ... [[Perplexity]] ... [[You]] ... [[phind]] ... [[Grok]] | [https://x.ai/ xAI] ... [[Groq]] ... [[Ernie]] | [[Baidu]] | ||

| + | * [[Policy]] ... [[Policy vs Plan]] ... [[Constitutional AI]] ... [[Trust Region Policy Optimization (TRPO)]] ... [[Policy Gradient (PG)]] ... [[Proximal Policy Optimization (PPO)]] | ||

* [https://www.surgehq.ai/blog/introduction-to-reinforcement-learning-with-human-feedback-rlhf-series-part-1 Introduction to Reinforcement Learning with Human Feedback | Edwin Chen - Surge] | * [https://www.surgehq.ai/blog/introduction-to-reinforcement-learning-with-human-feedback-rlhf-series-part-1 Introduction to Reinforcement Learning with Human Feedback | Edwin Chen - Surge] | ||

* [https://aisupremacy.substack.com/p/what-is-reinforcement-learning-with What is Reinforcement Learning with Human Feedback (RLHF)? | Michael Spencer] | * [https://aisupremacy.substack.com/p/what-is-reinforcement-learning-with What is Reinforcement Learning with Human Feedback (RLHF)? | Michael Spencer] | ||

| Line 33: | Line 38: | ||

| − | [https://huggingface.co/blog/rlhf Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla - Hugging Face] | + | [https://huggingface.co/blog/rlhf Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla -] [[Hugging Face] |

{|<!-- T --> | {|<!-- T --> | ||

| Line 49: | Line 54: | ||

* [https://twitter.com/thomassimonini Thomas Twitter] | * [https://twitter.com/thomassimonini Thomas Twitter] | ||

| − | Nathan Lambert is a Research Scientist at HuggingFace. He received his PhD from the University of California, Berkeley working at the intersection of machine learning and robotics. He was advised by Professor Kristofer Pister in the Berkeley Autonomous Microsystems Lab and Roberto Calandra at Meta AI Research. He was lucky to intern at Facebook AI and DeepMind during his Ph.D. Nathan was was awarded the UC Berkeley EECS Demetri Angelakos Memorial Achievement Award for Altruism for his efforts to better community norms. | + | Nathan Lambert is a Research Scientist at [[HuggingFace]]. He received his PhD from the University of California, Berkeley working at the intersection of machine learning and robotics. He was advised by Professor Kristofer Pister in the Berkeley Autonomous Microsystems Lab and Roberto Calandra at Meta AI Research. He was lucky to intern at [[Meta|Facebook]] AI and DeepMind during his Ph.D. Nathan was was awarded the UC Berkeley EECS Demetri Angelakos Memorial Achievement Award for Altruism for his efforts to better community norms. |

|} | |} | ||

|<!-- M --> | |<!-- M --> | ||

| Line 77: | Line 82: | ||

* 0:00 Introduction | * 0:00 Introduction | ||

| − | * 3:09 Embedding Space | + | * 3:09 [[Embedding]] Space |

* 15:35 Overall Transformer Architecture | * 15:35 Overall Transformer Architecture | ||

* 36:06 Transformer (Details) | * 36:06 Transformer (Details) | ||

| Line 96: | Line 101: | ||

|} | |} | ||

|}<!-- B --> | |}<!-- B --> | ||

| + | <youtube>bSvTVREwSNw</youtube> | ||

Latest revision as of 20:18, 9 April 2024

YouTube ... Quora ...Google search ...Google News ...Bing News

- Reinforcement Learning (RL)

- Human-in-the-Loop (HITL) Learning

- Agents ... Robotic Process Automation ... Assistants ... Personal Companions ... Productivity ... Email ... Negotiation ... LangChain

- Artificial Intelligence (AI) ... Generative AI ... Machine Learning (ML) ... Deep Learning ... Neural Network ... Reinforcement ... Learning Techniques

- Conversational AI ... ChatGPT | OpenAI ... Bing/Copilot | Microsoft ... Gemini | Google ... Claude | Anthropic ... Perplexity ... You ... phind ... Grok | xAI ... Groq ... Ernie | Baidu

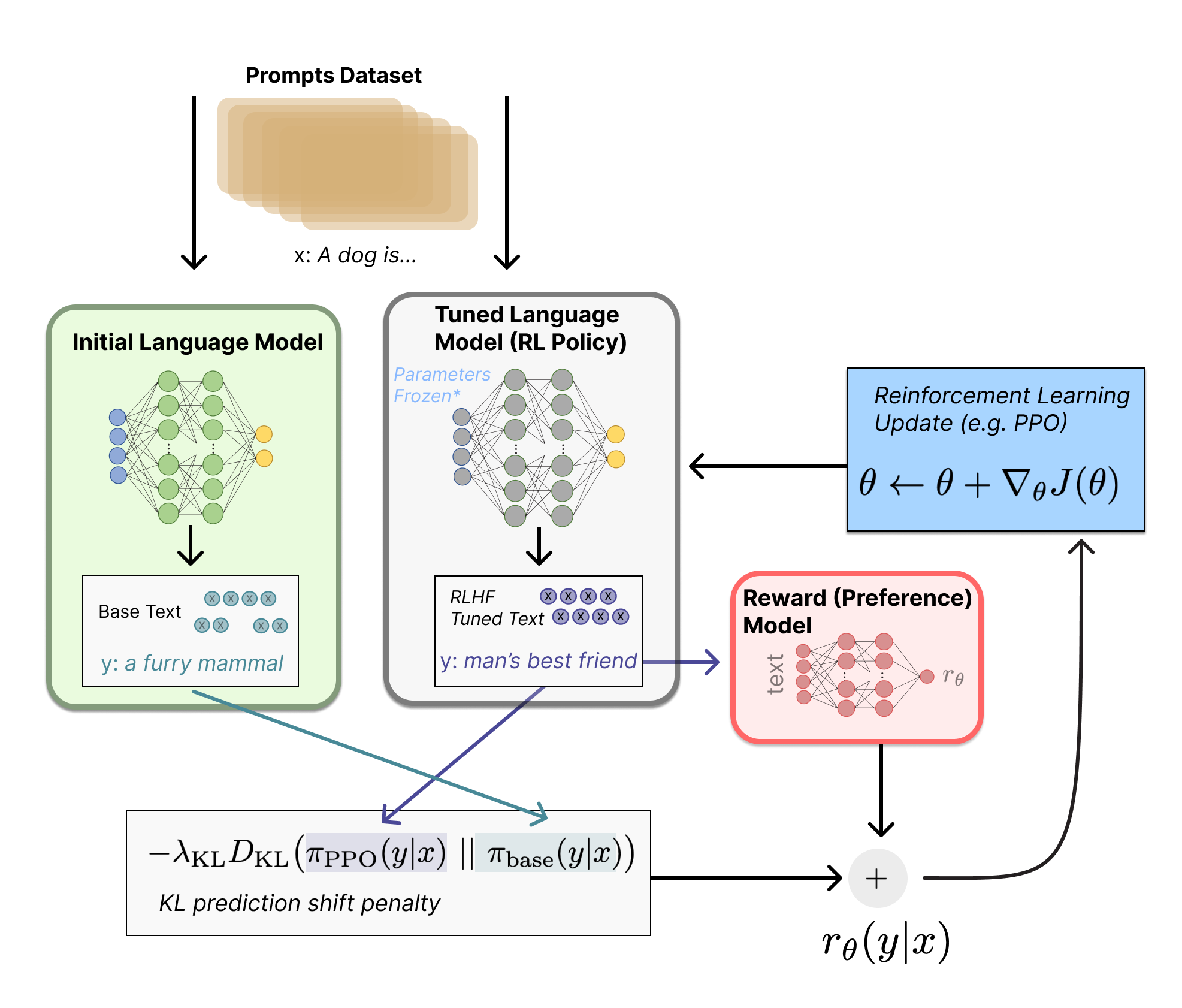

- Policy ... Policy vs Plan ... Constitutional AI ... Trust Region Policy Optimization (TRPO) ... Policy Gradient (PG) ... Proximal Policy Optimization (PPO)

- Introduction to Reinforcement Learning with Human Feedback | Edwin Chen - Surge

- What is Reinforcement Learning with Human Feedback (RLHF)? | Michael Spencer

- Compendium of problems with RLHF | Raphael S - LessWrong

- Reinforcement Learning from Human Feedback(RLHF)-ChatGPT | Sthanikam Santhosh - Medium

- Learning through human feedback | Google DeepMind

- Paper Review: Summarization using Reinforcement Learning From Human Feedback | - Towards AI ... AI Alignment, Reinforcement Learning from Human Feedback, Proximal Policy Optimization (PPO)

Reinforcement Learning from Human Feedback (RLHF) - a simplified explanation | Joao Lages

Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla - [[Hugging Face]

|

|