Difference between revisions of "Math for Intelligence"

| Line 2: | Line 2: | ||

* [http://www.3blue1brown.com/ Animated Math | Grant Sanderson @ 3blue1brown.com] | * [http://www.3blue1brown.com/ Animated Math | Grant Sanderson @ 3blue1brown.com] | ||

| − | * http://machinelearningmastery.com/introduction-matrices-machine-learning/ Introduction to Matrices and Matrix Arithmetic for Machine Learning | Jason Brownlee] | + | * [http://machinelearningmastery.com/introduction-matrices-machine-learning/ Introduction to Matrices and Matrix Arithmetic for Machine Learning | Jason Brownlee] |

| − | * [ | + | * [http://brilliant.org/courses/artificial-neural-networks/ Brilliant.org] |

* [http://triseum.com/variant-limits/ Varient: Limits] | * [http://triseum.com/variant-limits/ Varient: Limits] | ||

* [http://static1.squarespace.com/static/54bf3241e4b0f0d81bf7ff36/t/55e9494fe4b011aed10e48e5/1441352015658/probability_cheatsheet.pdf Probability Cheatsheet] | * [http://static1.squarespace.com/static/54bf3241e4b0f0d81bf7ff36/t/55e9494fe4b011aed10e48e5/1441352015658/probability_cheatsheet.pdf Probability Cheatsheet] | ||

| Line 22: | Line 22: | ||

== Explained == | == Explained == | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<youtube>ml4NSzCQobk</youtube> | <youtube>ml4NSzCQobk</youtube> | ||

<youtube>f5liqUk0ZTw</youtube> | <youtube>f5liqUk0ZTw</youtube> | ||

| Line 65: | Line 56: | ||

== Quantum Algorithm == | == Quantum Algorithm == | ||

<youtube>LhtnECml-KI</youtube> | <youtube>LhtnECml-KI</youtube> | ||

| + | |||

| + | == Dot Product Explained == | ||

| + | |||

| + | * http://en.wikipedia.org/wiki/Dot_product Dot Product | Wikipedia] | ||

| + | |||

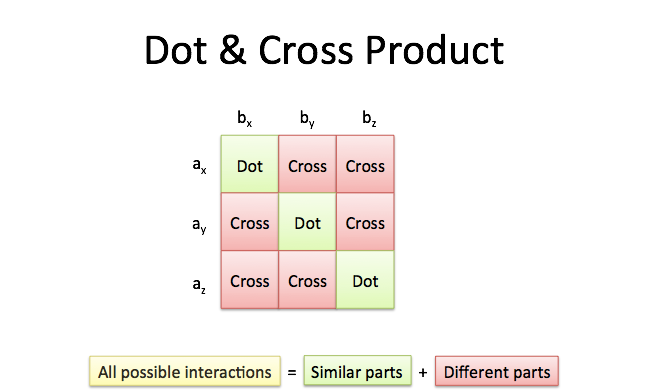

| + | Dot Product = | ||

| + | * Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers. | ||

| + | * Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them. | ||

| + | |||

| + | http://ujwlkarn.files.wordpress.com/2016/07/convolution_schematic.gif?w=268&h=196 | ||

| + | |||

| + | [http://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/ Take a moment to understand how the computation above is being done. We slide the orange matrix over our original image (green) by 1 pixel (also called ‘stride’) and for every position, we compute element wise multiplication (between the two matrices) and add the multiplication outputs to get the final integer which forms a single element of the output matrix (pink). Note that the 3×3 matrix “sees” only a part of the input image in each stride. In CNN terminology, the 3×3 matrix is called a ‘filter‘ or ‘kernel’ or ‘feature detector’ and the matrix formed by sliding the filter over the image and computing the dot product is called the ‘Convolved Feature’ or ‘Activation Map’ or the ‘Feature Map‘. It is important to note that filters acts as feature detectors from the original input image. | ujjwalkarn] | ||

| + | |||

| + | http://3qeqpr26caki16dnhd19sv6by6v-wpengine.netdna-ssl.com/wp-content/uploads/2017/12/Depection-of-Matrix-Multiplication.png | ||

| + | http://betterexplained.com/wp-content/uploads/crossproduct/cross-product-grid.png | ||

Revision as of 07:42, 9 December 2018

- Animated Math | Grant Sanderson @ 3blue1brown.com

- Introduction to Matrices and Matrix Arithmetic for Machine Learning | Jason Brownlee

- Brilliant.org

- Varient: Limits

- Probability Cheatsheet

Contents

3blue1brown

Explained

Siraj Raval

Josh Starmer - StatQuest

Quantum Algorithm

Dot Product Explained

- http://en.wikipedia.org/wiki/Dot_product Dot Product | Wikipedia]

Dot Product =

- Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers.

- Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them.

http://ujwlkarn.files.wordpress.com/2016/07/convolution_schematic.gif?w=268&h=196