Difference between revisions of "Deep Belief Network (DBN)"

m (Text replacement - "http:" to "https:") |

|||

| Line 5: | Line 5: | ||

|description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=deep+belief+network YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=deep+belief+network+deep+machine+learning+ML+artificial+intelligence ...Google search] |

* [[Deep Learning]] | * [[Deep Learning]] | ||

| − | * [ | + | * [https://www.asimovinstitute.org/author/fjodorvanveen/ Neural Network Zoo | Fjodor Van Veen] |

* [[Feature Exploration/Learning]] | * [[Feature Exploration/Learning]] | ||

| − | * [ | + | * [https://machinelearningmastery.com/introduction-to-bayesian-belief-networks/ A Gentle Introduction to Bayesian Belief Networks | Jason Brownlee - Machine Learning Mastery] |

| − | * [ | + | * [https://pathmind.com/wiki/deep-belief-network Deep-Belief Networks | Chris Nicholson - A.I. Wiki pathmind] |

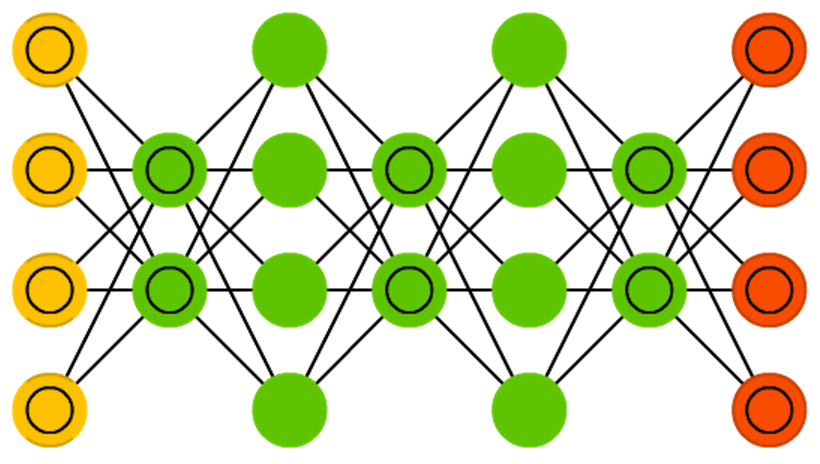

Stacked architectures of mostly [[Restricted Boltzmann Machine (RBM)]]s or [[Variational Autoencoder (VAE)]]s. These networks have been shown to be effectively trainable stack by stack, where each [[Autoencoder (AE) / Encoder-Decoder | Autoencoder (AE)]] or [[Restricted Boltzmann Machine (RBM)]] only has to learn to encode the previous network. This technique is also known as greedy training, where greedy means making locally optimal solutions to get to a decent but possibly not optimal answer. DBNs can be trained through contrastive divergence or back-propagation and learn to represent the data as a probabilistic model, just like regular [[Restricted Boltzmann Machine (RBM)]]s or [[Variational Autoencoder (VAE)]]s. Once trained or converged to a (more) stable state through unsupervised learning, the model can be used to generate new data. If trained with contrastive divergence, it can even classify existing data because the neurons have been taught to look for different features. Bengio, Yoshua, et al. “Greedy layer-wise training of deep networks.” Advances in neural information processing systems 19 (2007): 153. | Stacked architectures of mostly [[Restricted Boltzmann Machine (RBM)]]s or [[Variational Autoencoder (VAE)]]s. These networks have been shown to be effectively trainable stack by stack, where each [[Autoencoder (AE) / Encoder-Decoder | Autoencoder (AE)]] or [[Restricted Boltzmann Machine (RBM)]] only has to learn to encode the previous network. This technique is also known as greedy training, where greedy means making locally optimal solutions to get to a decent but possibly not optimal answer. DBNs can be trained through contrastive divergence or back-propagation and learn to represent the data as a probabilistic model, just like regular [[Restricted Boltzmann Machine (RBM)]]s or [[Variational Autoencoder (VAE)]]s. Once trained or converged to a (more) stable state through unsupervised learning, the model can be used to generate new data. If trained with contrastive divergence, it can even classify existing data because the neurons have been taught to look for different features. Bengio, Yoshua, et al. “Greedy layer-wise training of deep networks.” Advances in neural information processing systems 19 (2007): 153. | ||

| − | + | https://www.asimovinstitute.org/wp-content/uploads/2016/09/dbn.png | |

<youtube>GJdWESd543Y</youtube> | <youtube>GJdWESd543Y</youtube> | ||

Latest revision as of 08:05, 28 March 2023

YouTube search... ...Google search

- Deep Learning

- Neural Network Zoo | Fjodor Van Veen

- Feature Exploration/Learning

- A Gentle Introduction to Bayesian Belief Networks | Jason Brownlee - Machine Learning Mastery

- Deep-Belief Networks | Chris Nicholson - A.I. Wiki pathmind

Stacked architectures of mostly Restricted Boltzmann Machine (RBM)s or Variational Autoencoder (VAE)s. These networks have been shown to be effectively trainable stack by stack, where each Autoencoder (AE) or Restricted Boltzmann Machine (RBM) only has to learn to encode the previous network. This technique is also known as greedy training, where greedy means making locally optimal solutions to get to a decent but possibly not optimal answer. DBNs can be trained through contrastive divergence or back-propagation and learn to represent the data as a probabilistic model, just like regular Restricted Boltzmann Machine (RBM)s or Variational Autoencoder (VAE)s. Once trained or converged to a (more) stable state through unsupervised learning, the model can be used to generate new data. If trained with contrastive divergence, it can even classify existing data because the neurons have been taught to look for different features. Bengio, Yoshua, et al. “Greedy layer-wise training of deep networks.” Advances in neural information processing systems 19 (2007): 153.