Difference between revisions of "Hugging Face"

m (→Wolfram ChatGPT) |

m |

||

| Line 19: | Line 19: | ||

** [https://laion.ai/ LAION] | ** [https://laion.ai/ LAION] | ||

** [https://huggingface.co/ontocord Ontocord] | ** [https://huggingface.co/ontocord Ontocord] | ||

| − | + | * [[Wolfram]] | |

| − | |||

| − | |||

Hugging Face is an American company that develops tools for building applications using machine learning. It is most notable for its transformers library built for natural language processing applications and its platform that allows users to share machine learning models and datasets. Hugging Face is a community and a platform for artificial intelligence and data science that aims to democratize AI knowledge and assets used in AI models. The platform allows users to build, train and deploy state of the art models powered by open source machine learning. It also provides a place where a broad community of data scientists, researchers, and ML engineers can come together and share ideas, get support and contribute to open source projects. Is there anything else you would like to know? - [https://en.wikipedia.org/wiki/Hugging_Face Wikipedia] | Hugging Face is an American company that develops tools for building applications using machine learning. It is most notable for its transformers library built for natural language processing applications and its platform that allows users to share machine learning models and datasets. Hugging Face is a community and a platform for artificial intelligence and data science that aims to democratize AI knowledge and assets used in AI models. The platform allows users to build, train and deploy state of the art models powered by open source machine learning. It also provides a place where a broad community of data scientists, researchers, and ML engineers can come together and share ideas, get support and contribute to open source projects. Is there anything else you would like to know? - [https://en.wikipedia.org/wiki/Hugging_Face Wikipedia] | ||

| Line 40: | Line 38: | ||

<youtube>8xYYvO7LGBw</youtube> | <youtube>8xYYvO7LGBw</youtube> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

= <span id="HuggingGPT"></span>HuggingGPT = | = <span id="HuggingGPT"></span>HuggingGPT = | ||

Revision as of 19:08, 19 April 2023

YouTube ... Quora ...Google search ...Google News ...Bing News

- Hugging Face

- Platforms: AI/Machine Learning as a Service (AIaaS/MLaaS)

- Hugging Face course

- Reinforcement Learning (RL) from Human Feedback (RLHF)

- Pretrain Transformers Models in PyTorch Using Hugging Face Transformers | George Mihaila - TOPBOTS

- OpenChatKit | TogetherCompute ... The first open-source ChatGPT alternative released; a 20B chat-GPT model under the Apache-2.0 license, which is available for free on Hugging Face.

- Wolfram

Hugging Face is an American company that develops tools for building applications using machine learning. It is most notable for its transformers library built for natural language processing applications and its platform that allows users to share machine learning models and datasets. Hugging Face is a community and a platform for artificial intelligence and data science that aims to democratize AI knowledge and assets used in AI models. The platform allows users to build, train and deploy state of the art models powered by open source machine learning. It also provides a place where a broad community of data scientists, researchers, and ML engineers can come together and share ideas, get support and contribute to open source projects. Is there anything else you would like to know? - Wikipedia

Source: Conversation with Bing, 4/14/2023

(1) What is Hugging Face - A Beginner's Guide - ByteXD. https://bytexd.com/what-is-hugging-face-beginners-guide/.

(2) Hugging Face – The AI community building the future.. https://huggingface.co/.

(3) What's Hugging Face? An AI community for sharing ML models and datasets .... https://towardsdatascience.com/whats-hugging-face-122f4e7eb11a.

(4) Private Hub - Hugging Face. https://huggingface.co/platform.

(5) Hugging Face Hub documentation. https://huggingface.co/docs/hub/main.

(6) What is Hugging Face - A Beginner's Guide - ByteXD. https://bytexd.com/what-is-hugging-face-beginners-guide/.

allows users to share machine learning models and datasets.

HuggingGPT

YouTube ... Quora ...Google search ...Google News ...Bing News

- HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace | Y. Shen, K. Song, X. Tan, D. Li, W. Lu, & Y. Zhuang

- Assistants ... Hybrid Assistants ... Agents ... Negotiation ... HuggingGPT ... LangChain

- HuggingGPT ... A Framework That Leverages LLMs to Connect Various AI Models in Machine Learning Communities Hugging Face to Solve AI Tasks

- JARVIS Microsoft

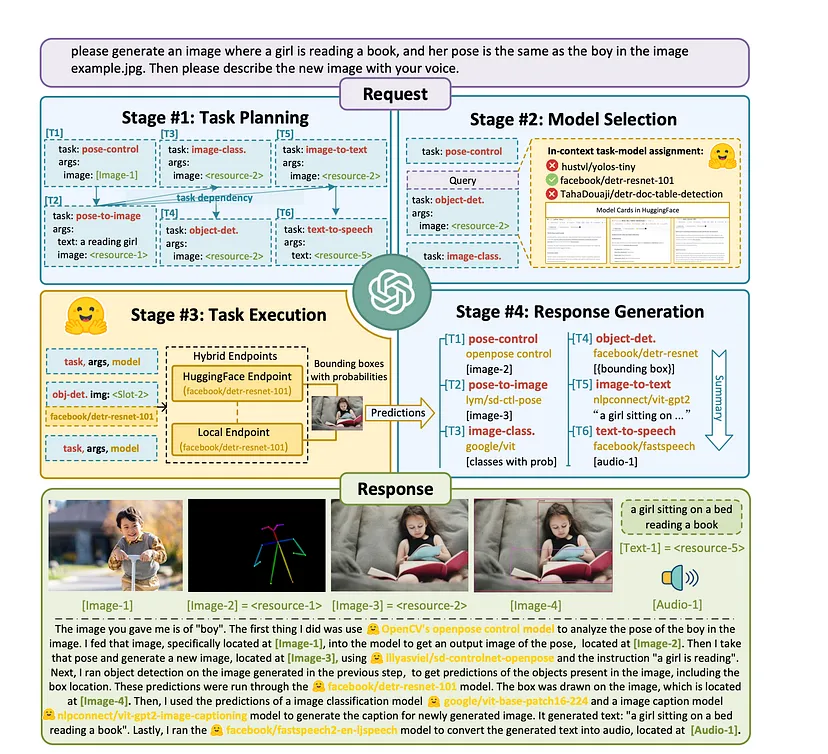

HuggingGPT is a framework that leverages Large Language Model (LLM) such as ChatGPT to connect various AI models in machine learning communities like Hugging Face to solve AI tasks. Solving complicated AI tasks with different domains and modalities is a key step toward advanced artificial intelligence. While there are abundant AI models available for different domains and modalities, they cannot handle complicated AI tasks. Considering Large Language Model (LLM) have exhibited exceptional ability in language understanding, generation, interaction, and reasoning, we advocate that LLMs could act as a controller to manage existing AI models to solve complicated AI tasks and language could be a generic interface to empower this. Based on this philosophy, we present HuggingGPT, a framework that leverages LLMs (e.g., ChatGPT) to connect various AI models in machine learning communities (e.g., Hugging Face) to solve AI tasks. Specifically, we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in Hugging Face, execute each subtask with the selected AI model, and summarize the response according to the execution results. The workflow of this system consists of four stages:

- Task Planning: Using ChatGPT to analyze the requests of users to understand their intention, and disassemble them into possible solvable tasks via prompts.

- Model Selection: To solve the planned tasks, ChatGPT selects expert models that are hosted on Hugging Face based on model descriptions.

- Task Execution: Invoke and execute each selected model, and return the results to ChatGPT.

- Response Generation: Finally, using ChatGPT to integrate the prediction of all models, and generate answers for users.

Hugging Face NLP Library - Open Parallel Corpus (OPUS)

- Hugging Face dives into machine translation with release of 1,000 models | Khari Johnson - VentureBeat

- Build Your Own Machine Translation Service with Transformers Using the latest Helsinki NLP models available in the Transformers library to create a standardized machine translation service | Kyle Gallatin - Towards Data Science

OPUS is a growing collection of translated texts from the web. In the OPUS project we try to convert and align free online data, to add linguistic annotation, and to provide the community with a publicly available parallel corpus. OPUS is a project undertaken by the University of Helsinki and global partners to gather and open-source a wide variety of language data sets. OPUS is based on open source products and the corpus is also delivered as an open content package. We used several tools to compile the current collection. All pre-processing is done automatically. No manual corrections have been carried out. The OPUS collection is growing! ... OPUS the open parallel corpus