Difference between revisions of "Backpropagation"

m |

m |

||

| Line 19: | Line 19: | ||

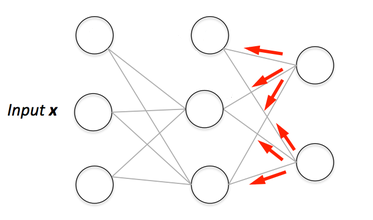

| − | + | The primary algorithm for performing gradient descent on neural networks. First, the output values of each node are calculated (and cached) in a forward pass. Then, the partial derivative of the error with respect to each parameter is calculated in a backward pass through the graph. [http://developers.google.com/machine-learning/glossary/ Machine Learning Glossary | Google] | |

Revision as of 06:45, 25 September 2020

Youtube search... ...Google search

- Gradient Descent Optimization & Challenges

- Objective vs. Cost vs. Loss vs. Error Function

- Wikipedia

- Manifold Hypothesis

- How the backpropagation algorithm works

- Backpropagation Step by Step

- What is Backpropagation? | Daniel Nelson - Unite.ai

- Other Challenges in Artificial Intelligence

- A Beginner's Guide to Backpropagation in Neural Networks | Chris Nicholson - A.I. Wiki pathmind

The primary algorithm for performing gradient descent on neural networks. First, the output values of each node are calculated (and cached) in a forward pass. Then, the partial derivative of the error with respect to each parameter is calculated in a backward pass through the graph. Machine Learning Glossary | Google