Difference between revisions of "Representation Learning"

m |

|||

| (3 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

* [[Feature Exploration/Learning]] | * [[Feature Exploration/Learning]] | ||

| − | The success of machine learning algorithms generally depends on data representation, and we hypothesize that this is because different representations can entangle and hide more or less the different explanatory factors of variation behind the data. Although specific domain knowledge can be used to help design representations, learning with generic priors can also be used, and the quest for AI is motivating the design of more powerful representation-learning algorithms implementing such priors. This paper reviews recent work in the area of unsupervised feature learning and deep learning, covering advances in probabilistic models, auto-encoders, manifold learning, and deep networks. This motivates longer-term unanswered questions about the appropriate objectives for learning good representations, for computing representations (i.e., inference), and the geometrical connections between representation learning, density estimation and manifold learning. [http://arxiv.org/abs/1206.5538 Representation Learning: A Review and New Perspectives | Y. Bengio, A. Courville, and P. Vincent] | + | The success of machine learning algorithms generally depends on data representation, and we hypothesize that this is because different representations can entangle and hide more or less the different explanatory factors of variation behind the data. Although specific domain knowledge can be used to help design representations, learning with generic priors can also be used, and the quest for AI is motivating the design of more powerful representation-learning algorithms implementing such priors. This paper reviews recent work in the area of unsupervised feature learning and deep learning, covering advances in probabilistic models, [[Autoencoder (AE) / Encoder-Decoder | auto-encoders]], manifold learning, and deep networks. This motivates longer-term unanswered questions about the appropriate objectives for learning good representations, for computing representations (i.e., inference), and the geometrical connections between representation learning, density estimation and manifold learning. [http://arxiv.org/abs/1206.5538 Representation Learning: A Review and New Perspectives | Y. Bengio, A. Courville, and P. Vincent] |

| − | * <b>Feature | + | * <b>Feature Learning</b> or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that machine learning tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data such as images, [[Video|video]], and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. [http://en.wikipedia.org/wiki/Feature_learning Wikipedia] |

| − | * <b>Manifold | + | * <b>[[Manifold Hypothesis#Manifold Learning|Manifold Learning]]</b> is an approach to non-linear dimensionality reduction. Algorithms for this task are based on the idea that the dimensionality of many data sets is only artificially high. [http://scikit-learn.org/stable/modules/manifold.html#:~:text=Manifold%20learning%20is%20an%20approach,sets%20is%20only%20artificially%20high. Manifold learning | SciKitLearn] |

<youtube>e3GaXeqrG9I</youtube> | <youtube>e3GaXeqrG9I</youtube> | ||

| Line 32: | Line 32: | ||

= Self-Supervised = | = Self-Supervised = | ||

| + | * [[Self-Supervised]] | ||

<youtube>aGhYitrOJRc</youtube> | <youtube>aGhYitrOJRc</youtube> | ||

= Semi-Supervised = | = Semi-Supervised = | ||

| + | * [[Semi-Supervised]] | ||

<youtube>1tB7lALJ3ew</youtube> | <youtube>1tB7lALJ3ew</youtube> | ||

= Unsupervised = | = Unsupervised = | ||

| + | * [[Unsupervised]] | ||

<youtube>y-SrsyckRbo</youtube> | <youtube>y-SrsyckRbo</youtube> | ||

<youtube>ceD736_Fknc</youtube> | <youtube>ceD736_Fknc</youtube> | ||

Latest revision as of 14:18, 16 September 2023

YouTube search... ...Google search

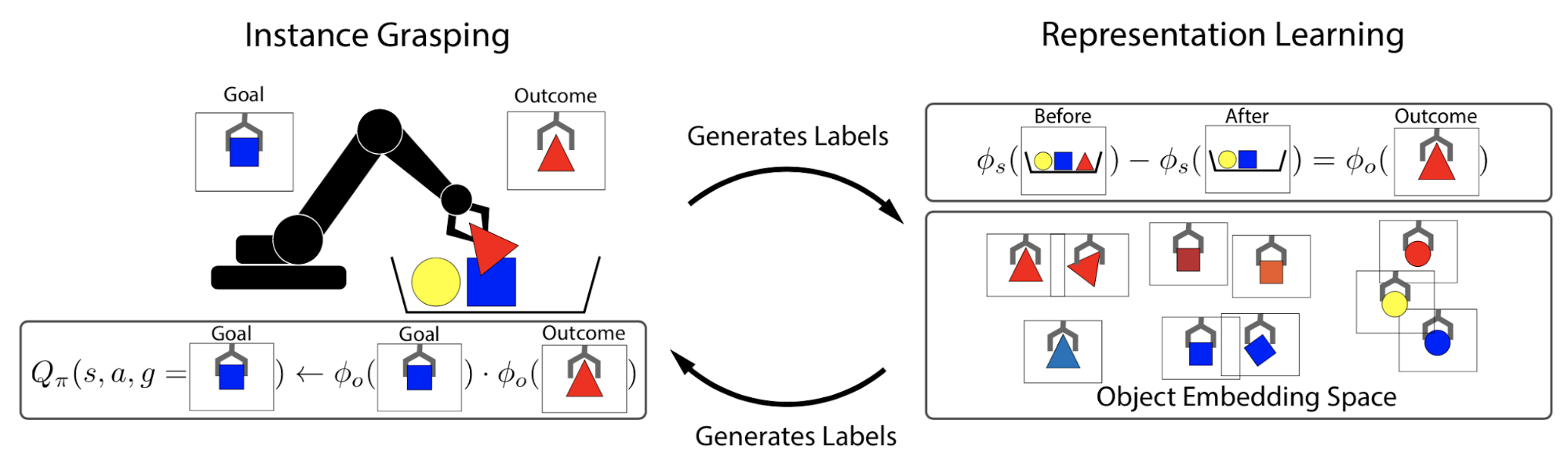

The success of machine learning algorithms generally depends on data representation, and we hypothesize that this is because different representations can entangle and hide more or less the different explanatory factors of variation behind the data. Although specific domain knowledge can be used to help design representations, learning with generic priors can also be used, and the quest for AI is motivating the design of more powerful representation-learning algorithms implementing such priors. This paper reviews recent work in the area of unsupervised feature learning and deep learning, covering advances in probabilistic models, auto-encoders, manifold learning, and deep networks. This motivates longer-term unanswered questions about the appropriate objectives for learning good representations, for computing representations (i.e., inference), and the geometrical connections between representation learning, density estimation and manifold learning. Representation Learning: A Review and New Perspectives | Y. Bengio, A. Courville, and P. Vincent

- Feature Learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that machine learning tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data such as images, video, and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Wikipedia

- Manifold Learning is an approach to non-linear dimensionality reduction. Algorithms for this task are based on the idea that the dimensionality of many data sets is only artificially high. Manifold learning | SciKitLearn

Contents

Representation Learning and Deep Learning

Yoshua Bengio | Institute for Pure & Applied Mathematics (IPAM)

Self-Supervised

Semi-Supervised

Unsupervised

Supervised Learning of Rules for Unsupervised

Large-Scale Graph