Difference between revisions of "Cross-Entropy Loss"

m |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, GPT-4, GPT-5, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Cross+Entropy+Loss+deep+learning+hyperparameter YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Cross+Entropy+Loss+deep+learning+hyperparameter ...Google search] |

| − | * [[ | + | * [[Loss]] |

| + | * [[Backpropagation]] ... [[Feed Forward Neural Network (FF or FFNN)|FFNN]] ... [[Forward-Forward]] ... [[Activation Functions]] ...[[Softmax]] ... [[Loss]] ... [[Boosting]] ... [[Gradient Descent Optimization & Challenges|Gradient Descent]] ... [[Algorithm Administration#Hyperparameter|Hyperparameter]] ... [[Manifold Hypothesis]] ... [[Principal Component Analysis (PCA)|PCA]] | ||

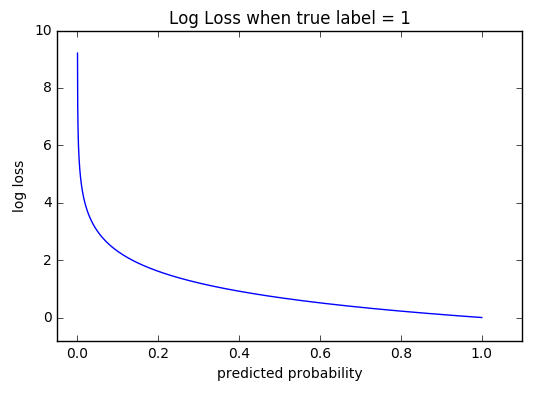

| − | Cross-entropy [[loss]], or log [[loss]], measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [ | + | Cross-entropy [[loss]], or log [[loss]], measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html |

| − | Cross-entropy loss is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. [ | + | Cross-entropy [[loss]] is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. [https://arxiv.org/pdf/1904.09523.pdf Neural Architecture Search for Deep Face Recognition | Ning Zhu] |

| − | + | https://ml-cheatsheet.readthedocs.io/en/latest/_images/cross_entropy.png | |

Latest revision as of 19:58, 2 September 2023

YouTube search... ...Google search

- Loss

- Backpropagation ... FFNN ... Forward-Forward ... Activation Functions ...Softmax ... Loss ... Boosting ... Gradient Descent ... Hyperparameter ... Manifold Hypothesis ... PCA

Cross-entropy loss, or log loss, measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html

Cross-entropy loss is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. Neural Architecture Search for Deep Face Recognition | Ning Zhu