Difference between revisions of "Deep Reinforcement Learning (DRL)"

| Line 14: | Line 14: | ||

==== OTHER: Learning; MDP, Q, and SARSA ==== | ==== OTHER: Learning; MDP, Q, and SARSA ==== | ||

* [[Markov Decision Process (MDP)]] | * [[Markov Decision Process (MDP)]] | ||

| − | * [[Deep Q | + | * [[Deep Q Network (DQN)]] |

* [[Neural Coreference]] | * [[Neural Coreference]] | ||

* [[State-Action-Reward-State-Action (SARSA)]] | * [[State-Action-Reward-State-Action (SARSA)]] | ||

Revision as of 14:14, 1 September 2019

Youtube search... ...Google search

OTHER: Learning; MDP, Q, and SARSA

- Markov Decision Process (MDP)

- Deep Q Network (DQN)

- Neural Coreference

- State-Action-Reward-State-Action (SARSA)

OTHER: Policy Gradient Methods

_______________________________________________________________________________________

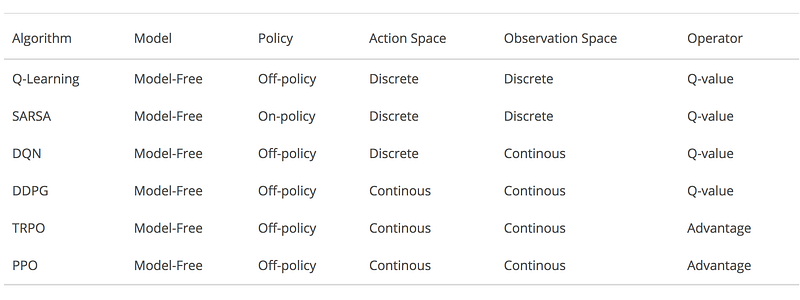

- Introduction to Various Reinforcement Learning Algorithms. Part I (Q-Learning, SARSA, DQN, DDPG) | Steeve Huang

- Introduction to Various Reinforcement Learning Algorithms. Part II (TRPO, PPO) | Steeve Huang

- Guide

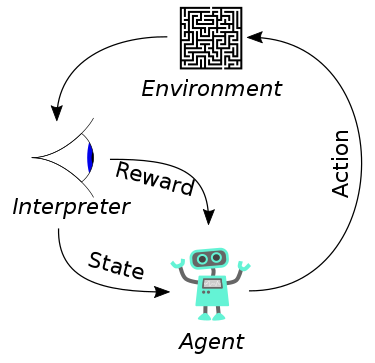

Goal-oriented algorithms, which learn how to attain a complex objective (goal) or maximize along a particular dimension over many steps; for example, maximize the points won in a game over many moves. Reinforcement learning solves the difficult problem of correlating immediate actions with the delayed returns they produce. Like humans, reinforcement learning algorithms sometimes have to wait a while to see the fruit of their decisions. They operate in a delayed return environment, where it can be difficult to understand which action leads to which outcome over many time steps.