Difference between revisions of "Backpropagation"

m |

|||

| (10 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, GPT-4, GPT-5, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=backpropagation Youtube search...] |

| − | [ | + | [https://www.google.com/search?q=Backpropagation+deep+machine+learning+ML ...Google search] |

| − | * [[Gradient Descent Optimization & Challenges]] | + | * [[Backpropagation]] ... [[Feed Forward Neural Network (FF or FFNN)|FFNN]] ... [[Forward-Forward]] ... [[Activation Functions]] ...[[Softmax]] ... [[Loss]] ... [[Boosting]] ... [[Gradient Descent Optimization & Challenges|Gradient Descent]] ... [[Algorithm Administration#Hyperparameter|Hyperparameter]] ... [[Manifold Hypothesis]] ... [[Principal Component Analysis (PCA)|PCA]] |

* [[Objective vs. Cost vs. Loss vs. Error Function]] | * [[Objective vs. Cost vs. Loss vs. Error Function]] | ||

| − | * [ | + | * [[Optimization Methods]] |

| − | * [ | + | * [https://en.wikipedia.org/wiki/Backpropagation Wikipedia] |

| − | * [ | + | * [https://neuralnetworksanddeeplearning.com/chap2.html How the backpropagation algorithm works] |

| − | * [ | + | * [https://hmkcode.github.io/ai/backpropagation-step-by-step/ Backpropagation Step by Step] |

| + | * [https://www.unite.ai/what-is-backpropagation/ What is Backpropagation? | Daniel Nelson - Unite.ai] | ||

* [[Other Challenges]] in Artificial Intelligence | * [[Other Challenges]] in Artificial Intelligence | ||

| − | * [ | + | * [https://pathmind.com/wiki/backpropagation A Beginner's Guide to Backpropagation in Neural Networks | Chris Nicholson - A.I. Wiki pathmind] |

| − | |||

| − | |||

| + | The primary algorithm for performing gradient descent on neural networks. First, the output values of each node are calculated (and cached) in a forward pass. Then, the partial derivative of the error with respect to each parameter is calculated in a backward pass through the graph. [https://developers.google.com/machine-learning/glossary/ Machine Learning Glossary | Google] | ||

| − | |||

| + | https://hmkcode.github.io/images/ai/backpropagation.png | ||

| − | |||

| − | |||

<youtube>Ilg3gGewQ5U</youtube> | <youtube>Ilg3gGewQ5U</youtube> | ||

<youtube>An5z8lR8asY</youtube> | <youtube>An5z8lR8asY</youtube> | ||

| Line 33: | Line 40: | ||

<youtube>WZDMNM36PsM</youtube> | <youtube>WZDMNM36PsM</youtube> | ||

<youtube>g9V-MHxSCcs</youtube> | <youtube>g9V-MHxSCcs</youtube> | ||

| + | <youtube>q555kfIFUCM</youtube> | ||

| + | <youtube>FaHHWdsIYQg</youtube> | ||

Latest revision as of 09:30, 6 August 2023

Youtube search... ...Google search

- Backpropagation ... FFNN ... Forward-Forward ... Activation Functions ...Softmax ... Loss ... Boosting ... Gradient Descent ... Hyperparameter ... Manifold Hypothesis ... PCA

- Objective vs. Cost vs. Loss vs. Error Function

- Optimization Methods

- Wikipedia

- How the backpropagation algorithm works

- Backpropagation Step by Step

- What is Backpropagation? | Daniel Nelson - Unite.ai

- Other Challenges in Artificial Intelligence

- A Beginner's Guide to Backpropagation in Neural Networks | Chris Nicholson - A.I. Wiki pathmind

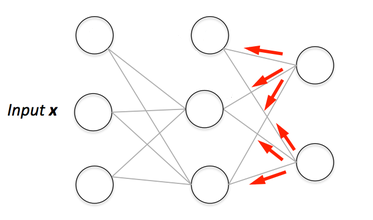

The primary algorithm for performing gradient descent on neural networks. First, the output values of each node are calculated (and cached) in a forward pass. Then, the partial derivative of the error with respect to each parameter is calculated in a backward pass through the graph. Machine Learning Glossary | Google