Difference between revisions of "Reinforcement Learning (RL) from Human Feedback (RLHF)"

m |

m |

||

| Line 18: | Line 18: | ||

* [https://www.deepmind.com/blog/learning-through-human-feedback Learning through human feedback |] [[Google]] DeepMind | * [https://www.deepmind.com/blog/learning-through-human-feedback Learning through human feedback |] [[Google]] DeepMind | ||

* [https://pub.towardsai.net/paper-review-summarization-using-reinforcement-learning-from-human-feedback-e000a66404ff Paper Review: Summarization using Reinforcement Learning From Human Feedback | - Towards AI] ... AI Alignment, Reinforcement Learning from Human Feedback, [https://huggingface.co/blog/deep-rl-ppo Proximal Policy Optimization (PPO)] | * [https://pub.towardsai.net/paper-review-summarization-using-reinforcement-learning-from-human-feedback-e000a66404ff Paper Review: Summarization using Reinforcement Learning From Human Feedback | - Towards AI] ... AI Alignment, Reinforcement Learning from Human Feedback, [https://huggingface.co/blog/deep-rl-ppo Proximal Policy Optimization (PPO)] | ||

| + | |||

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/rlhf/rlhf.png" width="800"> | <img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/rlhf/rlhf.png" width="800"> | ||

| + | |||

| + | <hr> | ||

| + | [https://arxiv.org/abs/1706.03741 Deep reinforcement learning from human preferences | P. Christiano, J. Leike, T. B. Brown, M. Martic, S. Legg, and D. Amodei] | ||

| + | <hr> | ||

| + | |||

| + | |||

{|<!-- T --> | {|<!-- T --> | ||

Revision as of 11:23, 29 January 2023

YouTube search... ...Google search

- ChatGPT

- Reinforcement Learning (RL)

- Generative Pre-trained Transformer (GPT)

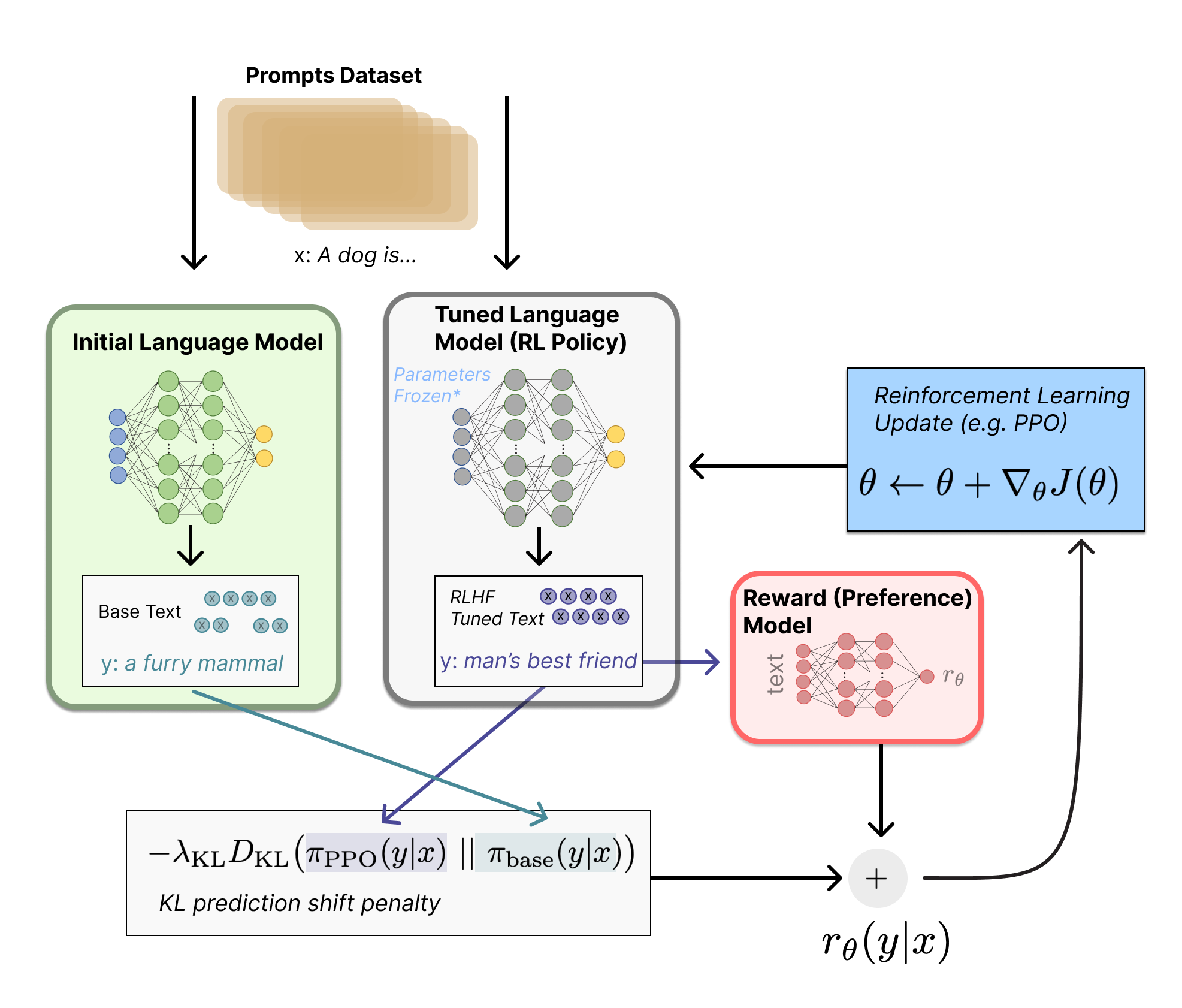

- Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla - Hugging Face

- Introduction to Reinforcement Learning with Human Feedback | Edwin Chen - Surge

- What is Reinforcement Learning with Human Feedback (RLHF)? | Michael Spencer

- Compendium of problems with RLHF | Raphael S - LessWrong

- Reinforcement Learning from Human Feedback(RLHF)-ChatGPT | Sthanikam Santhosh - Medium

- Learning through human feedback | Google DeepMind

- Paper Review: Summarization using Reinforcement Learning From Human Feedback | - Towards AI ... AI Alignment, Reinforcement Learning from Human Feedback, Proximal Policy Optimization (PPO)

|

|