Difference between revisions of "Reinforcement Learning (RL) from Human Feedback (RLHF)"

m |

m |

||

| Line 41: | Line 41: | ||

Amidst the hype of ChatGPT, it can be easy to assume that the model can reason and think for itself. Here, we try to demystify how the model works, first starting with a basic introduction of Transformers, and then how we can improve the model's output using Reinforcement Learning with Human Feedback (RLHF). | Amidst the hype of ChatGPT, it can be easy to assume that the model can reason and think for itself. Here, we try to demystify how the model works, first starting with a basic introduction of Transformers, and then how we can improve the model's output using Reinforcement Learning with Human Feedback (RLHF). | ||

| − | + | [https://github.com/tanchongmin/TensorFlow-Implementations Slides and code here] | |

| − | + | [https://www.youtube.com/watch?v=iBamMr2WEsQ Transformer Introduction here] | |

References: | References: | ||

| − | Original Transformer Paper (Attention is all you need) | + | * [https://arxiv.org/pdf/1706.03762.pdf Original Transformer Paper (Attention is all you need)] |

| − | GPT Paper | + | * [https://arxiv.org/pdf/2005.14165.pdf GPT Paper] |

| − | DialoGPT Paper (conversational AI by Microsoft) | + | * [https://arxiv.org/pdf/1911.00536.pdf DialoGPT Paper (conversational AI by Microsoft) |

| − | InstructGPT Paper (with RLHF) | + | * [https://arxiv.org/pdf/2203.02155.pdf InstructGPT Paper (with RLHF)] |

| − | + | ||

| − | + | * [https://jalammar.github.io/illustrated-transformer/ Illustrated Transformer] | |

| + | * [https://jalammar.github.io/illustrated-gpt2/ Illustrated GPT-2] | ||

* 0:00 Introduction | * 0:00 Introduction | ||

Revision as of 09:01, 29 January 2023

YouTube search... ...Google search

- Reinforcement Learning (RL)

- ChatGPT

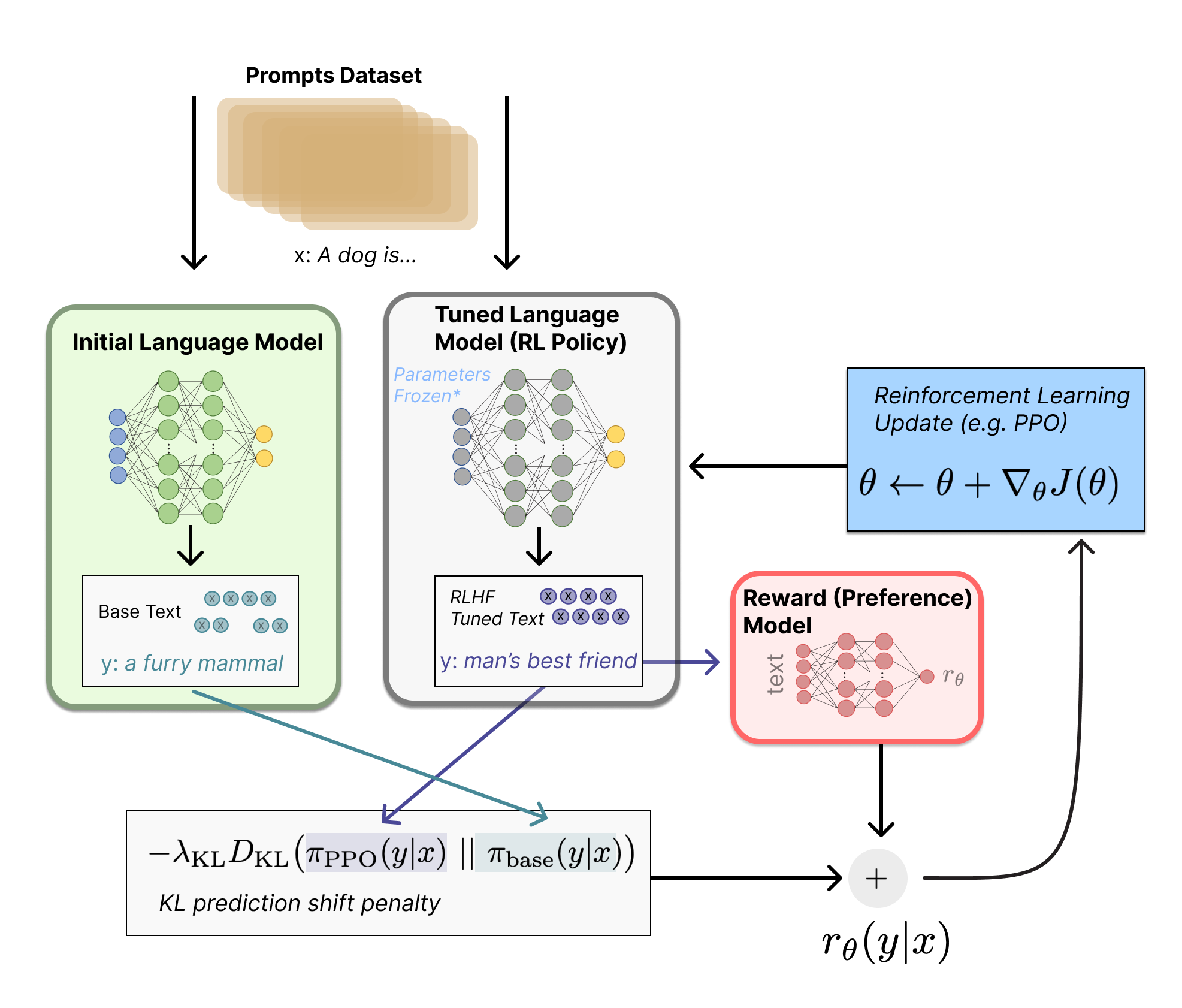

- Illustrating Reinforcement Learning from Human Feedback (RLHF) | N. Lambert, L. Castricato, L. von Werra, and A. Havrilla - Hugging Face

|

|