Difference between revisions of "Generative Pre-trained Transformer (GPT)"

m (→Let's build GPT: from scratch, in code, spelled out | Andrej Karpathy) |

m (→Let's build GPT: from scratch, in code, spelled out | Andrej Karpathy) |

||

| Line 152: | Line 152: | ||

Suggested exercises: | Suggested exercises: | ||

| − | + | * EX1: The n-dimensional tensor mastery challenge: Combine the `Head` and `MultiHeadAttention` into one class that processes all the heads in parallel, treating the heads as another batch dimension (answer is in nanoGPT). | |

| − | + | * EX2: Train the GPT on your own dataset of choice! What other data could be fun to blabber on about? (A fun advanced suggestion if you like: train a GPT to do addition of two numbers, i.e. a+b=c. You may find it helpful to predict the digits of c in reverse order, as the typical addition algorithm (that you're hoping it learns) would proceed right to left too. You may want to modify the data loader to simply serve random problems and skip the generation of train.bin, val.bin. You may want to mask out the loss at the input positions of a+b that just specify the problem using y=-1 in the targets (see CrossEntropyLoss ignore_index). Does your Transformer learn to add? Once you have this, swole doge project: build a calculator clone in GPT, for all of +-*/. Not an easy problem. You may need Chain of Thought traces.) | |

| − | + | * EX3: Find a dataset that is very large, so large that you can't see a gap between train and val loss. Pretrain the transformer on this data, then initialize with that model and finetune it on tiny shakespeare with a smaller number of steps and lower learning rate. Can you obtain a lower validation loss by the use of pretraining? | |

| − | + | * EX4: Read some transformer papers and implement one additional feature or change that people seem to use. Does it improve the performance of your GPT? | |

Chapters: | Chapters: | ||

| − | 00:00:00 intro: ChatGPT, Transformers, nanoGPT, Shakespeare | + | * 00:00:00 intro: ChatGPT, Transformers, nanoGPT, Shakespeare baseline language modeling, code setup |

| − | baseline language modeling, code setup | + | * 00:07:52 reading and exploring the data |

| − | 00:07:52 reading and exploring the data | + | * 00:09:28 tokenization, train/val split |

| − | 00:09:28 tokenization, train/val split | + | * 00:14:27 data loader: batches of chunks of data |

| − | 00:14:27 data loader: batches of chunks of data | + | * 00:22:11 simplest baseline: bigram language model, loss, generation |

| − | 00:22:11 simplest baseline: bigram language model, loss, generation | + | * 00:34:53 training the bigram model |

| − | 00:34:53 training the bigram model | + | * 00:38:00 port our code to a script Building the "self-attention" |

| − | 00:38:00 port our code to a script | + | * 00:42:13 version 1: averaging past context with for loops, the weakest form of aggregation |

| − | Building the "self-attention" | + | * 00:47:11 the trick in self-attention: matrix multiply as weighted aggregation |

| − | 00:42:13 version 1: averaging past context with for loops, the weakest form of aggregation | + | * 00:51:54 version 2: using matrix multiply |

| − | 00:47:11 the trick in self-attention: matrix multiply as weighted aggregation | + | * 00:54:42 version 3: adding softmax |

| − | 00:51:54 version 2: using matrix multiply | + | * 00:58:26 minor code cleanup |

| − | 00:54:42 version 3: adding softmax | + | * 01:00:18 positional encoding |

| − | 00:58:26 minor code cleanup | + | * 01:02:00 THE CRUX OF THE VIDEO: version 4: self-attention |

| − | 01:00:18 positional encoding | + | * 01:11:38 note 1: attention as communication |

| − | 01:02:00 THE CRUX OF THE VIDEO: version 4: self-attention | + | * 01:12:46 note 2: attention has no notion of space, operates over sets |

| − | 01:11:38 note 1: attention as communication | + | * 01:13:40 note 3: there is no communication across batch dimension |

| − | 01:12:46 note 2: attention has no notion of space, operates over sets | + | * 01:14:14 note 4: encoder blocks vs. decoder blocks |

| − | 01:13:40 note 3: there is no communication across batch dimension | + | * 01:15:39 note 5: attention vs. self-attention vs. cross-attention |

| − | 01:14:14 note 4: encoder blocks vs. decoder blocks | + | * 01:16:56 note 6: "scaled" self-attention. why divide by sqrt(head_size) Building the Transformer |

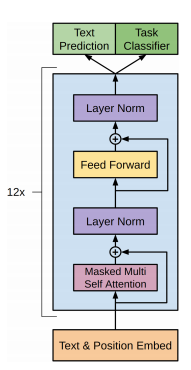

| − | 01:15:39 note 5: attention vs. self-attention vs. cross-attention | + | * 01:19:11 inserting a single self-attention block to our network |

| − | 01:16:56 note 6: "scaled" self-attention. why divide by sqrt(head_size) | + | * 01:21:59 multi-headed self-attention |

| − | Building the Transformer | + | * 01:24:25 feedforward layers of transformer block |

| − | 01:19:11 inserting a single self-attention block to our network | + | * 01:26:48 residual connections |

| − | 01:21:59 multi-headed self-attention | + | * 01:32:51 layernorm (and its relationship to our previous batchnorm) |

| − | 01:24:25 feedforward layers of transformer block | + | * 01:37:49 scaling up the model! creating a few variables. adding dropout Notes on Transformer |

| − | 01:26:48 residual connections | + | * 01:42:39 encoder vs. decoder vs. both (?) Transformers |

| − | 01:32:51 layernorm (and its relationship to our previous batchnorm) | + | * 01:46:22 super quick walkthrough of nanoGPT, batched multi-headed self-attention |

| − | 01:37:49 scaling up the model! creating a few variables. adding dropout | + | * 01:48:53 back to ChatGPT, GPT-3, pretraining vs. finetuning, RLHF |

| − | Notes on Transformer | + | * 01:54:32 conclusions |

| − | 01:42:39 encoder vs. decoder vs. both (?) Transformers | ||

| − | 01:46:22 super quick walkthrough of nanoGPT, batched multi-headed self-attention | ||

| − | 01:48:53 back to ChatGPT, GPT-3, pretraining vs. finetuning, RLHF | ||

| − | 01:54:32 conclusions | ||

Corrections: | Corrections: | ||

| − | 00:57:00 Oops "tokens from the future cannot communicate", not "past". Sorry! :) | + | * 00:57:00 Oops "tokens from the future cannot communicate", not "past". Sorry! :) |

| − | 01:20:05 Oops I should be using the head_size for the normalization, not C | + | * 01:20:05 Oops I should be using the head_size for the normalization, not C |

|} | |} | ||

|<!-- M --> | |<!-- M --> | ||

| Line 211: | Line 207: | ||

GUEST BIO: | GUEST BIO: | ||

| − | Andrej Karpathy is a legendary AI researcher, engineer, and educator. He's the former director of AI at Tesla, a founding member of [[OpenAI]], and an educator at Stanford. | + | [[Creatives#Andrej Karpathy |Andrej Karpathy]] is a legendary AI researcher, engineer, and educator. He's the former director of AI at Tesla, a founding member of [[OpenAI]], and an educator at Stanford. |

PODCAST INFO: | PODCAST INFO: | ||

Revision as of 11:20, 28 January 2023

YouTube search... ...Google search

- Case Studies

- Text Transfer Learning

- Natural Language Generation (NLG)

- Natural Language Tools & Services

- Attention

- Generated Image

- OpenAI Blog | OpenAI

- Language Models are Unsupervised Multitask Learners | Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever

- Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units.

- Attention Mechanism/Transformer Model

- Bidirectional Encoder Representations from Transformers (BERT)

- ELMo

- SynthPub

- Language Models are Unsupervised Multitask Learners - GitHub

- Microsoft Releases DialogGPT AI Conversation Model | Anthony Alford - InfoQ - trained on over 147M dialogs

- minGPT | Andrej Karpathy - GitHub

- SambaNova Systems ... Dataflow-as-a-Service GPT

- Facebook-owner Meta opens access to AI large language model | Elizabeth Culliford - Reuters ... Facebook 175-billion-parameter language model - Open Pretrained Transformer (OPT-175B)

Contents

ChatGPT

YouTube search... ...Google search

- ChatGPT | OpenAI

- Cybersecurity

- OpenAI invites everyone to test ChatGPT, a new AI-powered chatbot—with amusing results | Benj Edwards - ARS Technica ... ChatGPT aims to produce accurate and harmless talk—but it's a work in progress.

- How to use ChatGPT for language generation in Python in 2023? | John Williams - Its ChatGPT ... Examples ... How to earn money

Generates human-like text, making ChatGPT performs a wide range of natural language processing (NLP) tasks; chatbots, automated writing, language translation, text summarization and generate computer programs. OpenAI states ChatGPT is a significant iterative step in the direction of providing a safe AI model for everyone. ChatGPT interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow-up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is a sibling model to InstructGPT, which is trained to follow an instruction in a prompt and provide a detailed response.

|

|

|

|

|

|

Let's build GPT: from scratch, in code, spelled out | Andrej Karpathy

|

|

Generative Pre-trained Transformer (GPT-3)

- Language Models are Few-Shot Learners | T. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell, S. Agarwal, A. Herbert-Voss, G. Krueger, T. Henighan, R. Child, A. Ramesh, D. Ziegler, J. Wu, C. Winter, C. Hesse, M. Chen, E. Sigler, M. Litwin, S. Gray, B. Chess, J. Clark, C. Berner, S. McCandlish, A. Radford, I. Sutskever, and D. Amodei - arXiv.org

- GPT-3: Demos, Use-cases, Implications | Simon O'Regan - Towards Data Science

- OpenAI API ...today the API runs models with weights from the GPT-3 family with many speed and throughput improvements.

- GPT-3 by OpenAI – Outlook and Examples | Praveen Govindaraj | Medium

- GPT-3 Creative Fiction | R. Gwern

Try...

- Sushant Kumar's micro-site - Replace your 'word' in the following URL to see what GPT-3 generates: https://thoughts.sushant-kumar.com/word

- Serendipity ...an AI powered recommendation engine for anything you want.

- Taglines.ai ... just about every business has a tagline — a short, catchy phrase designed to quickly communicate what it is that they do.

- Simplify.so ...simple, easy-to-understand explanations for everything

|

|

|

|

|

|

|

|

|

|

|

|

GPT Impact to Development

- Development

- https://analyticsindiamag.com/will-the-much-hyped-gpt-3-impact-the-coders/ Will The Much-Hyped GPT-3 Impact The Coders? | Analytics India Magazine]

- With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you. | Sharif Shameem - debuild

|

|

|

|

|

|

Generative Pre-trained Transformer (GPT-2)

- GitHub

- How to Get Started with OpenAIs GPT-2 for Text Generation | Amal Nair - Analytics India Magazine

- GPT-2: It learned on the Internet | Janelle Shane

- Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma

- GPT-2 A nascent transfer learning method that could eliminate supervised learning some NLP tasks | Ajit Rajasekharan - Medium

- OpenAI Creates Platform for Generating Fake News. Wonderful | Nick Kolakowski - Dice

- InferKit | Adam D King- completes your text.

Coding Train Late Night 2

|

|

r/SubSimulator

Subreddit populated entirely by AI personifications of other subreddits -- all posts and comments are generated automatically using:

results in coherent and realistic simulated content.

GetBadNews

- Get Bad News game - Can you beat my score? Play the fake news game! Drop all pretense of ethics and choose the path that builds your persona as an unscrupulous media magnate. Your task is to get as many followers as you can while