XGBoost; eXtreme Gradient Boosted trees

YouTube search... ...Google search

- AI Solver

- Capabilities

- Boosting

- Gradient Boosting Machine (GBM)

- Random Forest (or) Random Decision Forest

- Boosted Random Forest

- LightGBM ...Microsoft's tree-based gradient boosting framework

- How to Use XGBoost for Time Series Forecasting | Jason Brownlee - Machine Learning Mastery ...time series datasets can be transformed into supervised learning using a sliding-window representation.

- XGBoost's three main forms of gradient boosting:

- Gradient Boosting Machine (GBM) - creates a model to predict which gradient will improve the function results. The next iteration considers both the initial values and these corrections as its original state, and looks for the next gradient to improve the prediction function results even further. The process stops when the prediction function results match the real values or the number of iterations reaches a limit.

- Stochastic Gradient Boosting - a big insight into bagging ensembles and random forest was allowing trees to be greedily created from subsamples of the training dataset

- Regularized Gradient Boosting - regularization via shrinkage improves performance considerably. In combination with shrinkage, stochastic gradient boosting can produce more accurate models by reducing the variance via [[Multiclassifiers; Ensembles and Hybrids; Bagging, Boosting, and Stacking | bagging].

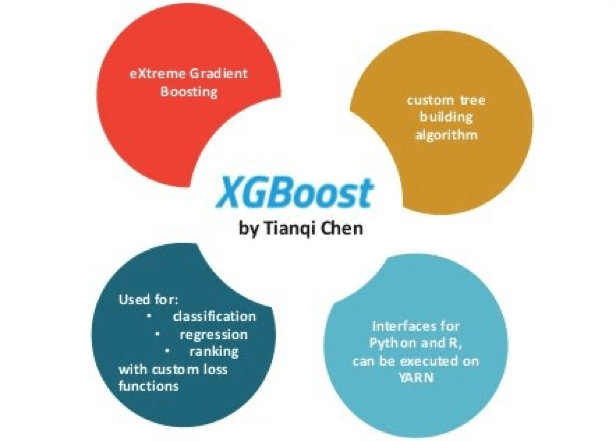

Its name stands for eXtreme Gradient Boosting, it was developed by Tianqi Chen and now is part of a wider collection of open-source libraries developed by the Distributed Machine Learning Community (DMLC). XGBoost is a scalable and accurate implementation of gradient boosting machines and it has proven to push the limits of computing power for boosted trees algorithms as it was built and developed for the sole purpose of model performance and computational speed. Specifically, it was engineered to exploit every bit of memory and hardware resources for tree boosting algorithms. The implementation of XGBoost offers several advanced features for model tuning, computing environments and algorithm enhancement. It is capable of performing the three main forms of gradient boosting (Gradient Boosting (GB), Stochastic GB and Regularized GB) and it is robust enough to support fine-tuning and addition of regularization parameters. According to Tianqi Chen, the latter is what makes it superior and different to other libraries. XGBoost, a Top Machine Learning Method on Kaggle, Explained | Ilan Reinstein - KDnuggets