Difference between revisions of "Transformer-XL"

m |

m |

||

| Line 8: | Line 8: | ||

[http://www.google.com/search?q=Transformer+XL+attention+model+deep+machine+learning+ML ...Google search] | [http://www.google.com/search?q=Transformer+XL+attention+model+deep+machine+learning+ML ...Google search] | ||

| − | * [[Bidirectional Encoder Representations from Transformers (BERT)]] | + | * [[Attention]] Mechanism ...[[Transformer]] ...[[Generative Pre-trained Transformer (GPT)]] ... [[Generative Adversarial Network (GAN)|GAN]] ... [[Bidirectional Encoder Representations from Transformers (BERT)|BERT]] |

* [http://medium.com/dair-ai/a-light-introduction-to-transformer-xl-be5737feb13 A Light Introduction to Transformer-XL | Elvis - Medium] | * [http://medium.com/dair-ai/a-light-introduction-to-transformer-xl-be5737feb13 A Light Introduction to Transformer-XL | Elvis - Medium] | ||

* [http://towardsdatascience.com/transformer-xl-explained-combining-transformers-and-rnns-into-a-state-of-the-art-language-model-c0cfe9e5a924 Transformer-XL Explained: Combining Transformers and RNNs into a State-of-the-art Language Model | Rani Horev - Towards Data Science] | * [http://towardsdatascience.com/transformer-xl-explained-combining-transformers-and-rnns-into-a-state-of-the-art-language-model-c0cfe9e5a924 Transformer-XL Explained: Combining Transformers and RNNs into a State-of-the-art Language Model | Rani Horev - Towards Data Science] | ||

| Line 15: | Line 15: | ||

* [[Memory Networks]] | * [[Memory Networks]] | ||

* [[Autoencoder (AE) / Encoder-Decoder]] | * [[Autoencoder (AE) / Encoder-Decoder]] | ||

| − | |||

Latest revision as of 13:38, 3 May 2023

YouTube search... ...Google search

- Attention Mechanism ...Transformer ...Generative Pre-trained Transformer (GPT) ... GAN ... BERT

- A Light Introduction to Transformer-XL | Elvis - Medium

- Transformer-XL Explained: Combining Transformers and RNNs into a State-of-the-art Language Model | Rani Horev - Towards Data Science

- Transformer-XL: Language Modeling with Longer-Term Dependency | Z. Dai, Z. Yang, Y. Yang, W.W. Cohen, J. Carbonell, Quoc V. Le, ad R. Salakhutdinov

- Large Language Model (LLM) ... Natural Language Processing (NLP) ...Generation ... Classification ... Understanding ... Translation ... Tools & Services

- Memory Networks

- Autoencoder (AE) / Encoder-Decoder

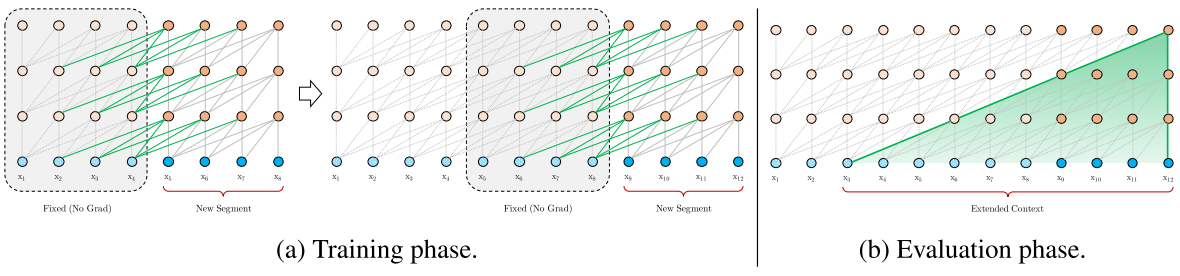

Combines the two leading architectures for language modeling:

- Recurrent Neural Network (RNN) to handles the input tokens — words or characters — one by one to learn the relationship between them

- Attention Mechanism/Transformer Model to receive a segment of tokens and learns the dependencies between at once them using an attention mechanism.