Difference between revisions of "Transformer"

m |

|||

| (3 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

* [[Attention]] | * [[Attention]] | ||

| + | * [[Generative Pre-trained Transformer (GPT)]] | ||

* [http://jalammar.github.io/illustrated-transformer/ The Illustrated Transformer | Jay Alammar] | * [http://jalammar.github.io/illustrated-transformer/ The Illustrated Transformer | Jay Alammar] | ||

* [http://medium.com/inside-machine-learning/what-is-a-transformer-d07dd1fbec04 What is a Transformer? | Maxime Allard - Medium] | * [http://medium.com/inside-machine-learning/what-is-a-transformer-d07dd1fbec04 What is a Transformer? | Maxime Allard - Medium] | ||

| Line 15: | Line 16: | ||

* [[Natural Language Processing (NLP)]] | * [[Natural Language Processing (NLP)]] | ||

* [[Memory Networks]] | * [[Memory Networks]] | ||

| − | * [[Transformer-XL]] | + | * [[Google]] [[Transformer-XL]] ...T5-XXL ...[http://venturebeat.com/2021/01/12/google-trained-a-trillion-parameter-ai-language-model/ Google trained a trillion-parameter AI language model | Kyle Wiggers - VB] |

* [http://github.com/huggingface/transformers Transformers] provides state-of-the-art general-purpose architectures ([[Bidirectional Encoder Representations from Transformers (BERT)]], [[Generative Pre-trained Transformer]]-2 (GPT-2), RoBERTa, XLM, DistilBert, [[XLNet]]...) for Natural Language Understanding (NLU) and Natural Language Generation (NLG) with over 32+ pretrained models in 100+ languages and deep interoperability between TensorFlow 2.0 and PyTorch. | GitHub | * [http://github.com/huggingface/transformers Transformers] provides state-of-the-art general-purpose architectures ([[Bidirectional Encoder Representations from Transformers (BERT)]], [[Generative Pre-trained Transformer]]-2 (GPT-2), RoBERTa, XLM, DistilBert, [[XLNet]]...) for Natural Language Understanding (NLU) and Natural Language Generation (NLG) with over 32+ pretrained models in 100+ languages and deep interoperability between TensorFlow 2.0 and PyTorch. | GitHub | ||

* [[Generative]] Modeling | * [[Generative]] Modeling | ||

| Line 24: | Line 25: | ||

Transformer Model - uniquely have attention such that every output element is connected to every input element. The weightings between them are calculated dynamically, effectively. [http://venturebeat.com/2019/10/24/google-achieves-state-of-the-art-nlp-performance-with-an-enormous-language-model-and-data-set/ | Kyle Wiggers] The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an [[Autoencoder (AE) / Encoder-Decoder]] configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. [http://arxiv.org/abs/1706.03762 Attention Is All You Need | A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A.N. Gomez, L. Kaiser, and I. Polosukhin] | Transformer Model - uniquely have attention such that every output element is connected to every input element. The weightings between them are calculated dynamically, effectively. [http://venturebeat.com/2019/10/24/google-achieves-state-of-the-art-nlp-performance-with-an-enormous-language-model-and-data-set/ | Kyle Wiggers] The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an [[Autoencoder (AE) / Encoder-Decoder]] configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. [http://arxiv.org/abs/1706.03762 Attention Is All You Need | A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A.N. Gomez, L. Kaiser, and I. Polosukhin] | ||

| − | The Transformer is a deep machine learning model introduced in 2017, used primarily in the field of natural language processing (NLP). Like [[Recurrent Neural Network (RNN)]], Transformers are designed to handle ordered sequences of data, such as natural language, for various tasks such as machine translation and text summarization. However, unlike RNNs, Transformers do not require that the sequence be processed in order. So, if the data in question is natural language, the Transformer does not need to process the beginning of a sentence before it processes the end. Due to this feature, the Transformer allows for much more parallelization than RNNs during training. Since their introduction, Transformers have become the basic building block of most state-of-the-art architectures in [[Natural Language Processing (NLP)]], replacing gated recurrent neural network models such as the [[Long Short-Term Memory (LSTM)]] in many cases. Since the Transformer architecture facilitates more parallelization during training computations, it has enabled training on much more data than was possible before it was introduced. This led to the development of pretrained systems such as [[Bidirectional Encoder Representations from Transformers (BERT)]] and [[Generative Pre-trained Transformer]]-2 | + | The Transformer is a deep machine learning model introduced in 2017, used primarily in the field of natural language processing (NLP). Like [[Recurrent Neural Network (RNN)]], Transformers are designed to handle ordered sequences of data, such as natural language, for various tasks such as machine translation and text summarization. However, unlike RNNs, Transformers do not require that the sequence be processed in order. So, if the data in question is natural language, the Transformer does not need to process the beginning of a sentence before it processes the end. Due to this feature, the Transformer allows for much more parallelization than RNNs during training. Since their introduction, Transformers have become the basic building block of most state-of-the-art architectures in [[Natural Language Processing (NLP)]], replacing gated recurrent neural network models such as the [[Long Short-Term Memory (LSTM)]] in many cases. Since the Transformer architecture facilitates more parallelization during training computations, it has enabled training on much more data than was possible before it was introduced. This led to the development of pretrained systems such as [[Bidirectional Encoder Representations from Transformers (BERT)]] and [[Generative Pre-trained Transformer (GPT)]]-2, which have been trained with huge amounts of general language data prior to being released, and can then be fine-tune trained to specific language tasks.[http://en.wikipedia.org/wiki/Transformer_(machine_learning_model) Wikipedia] |

== Tensor2Tensor (T2T) | Google Brain == | == Tensor2Tensor (T2T) | Google Brain == | ||

Revision as of 21:53, 13 January 2021

YouTube search... ...Google search

- Attention

- Generative Pre-trained Transformer (GPT)

- The Illustrated Transformer | Jay Alammar

- What is a Transformer? | Maxime Allard - Medium

- Sequence to Sequence (Seq2Seq) ----> Recurrent Neural Networks (RNN) ----> Transformer

- Bidirectional Encoder Representations from Transformers (BERT)

- Natural Language Processing (NLP)

- Memory Networks

- Google Transformer-XL ...T5-XXL ...Google trained a trillion-parameter AI language model | Kyle Wiggers - VB

- Transformers provides state-of-the-art general-purpose architectures (Bidirectional Encoder Representations from Transformers (BERT), Generative Pre-trained Transformer-2 (GPT-2), RoBERTa, XLM, DistilBert, XLNet...) for Natural Language Understanding (NLU) and Natural Language Generation (NLG) with over 32+ pretrained models in 100+ languages and deep interoperability between TensorFlow 2.0 and PyTorch. | GitHub

- Generative Modeling

- How do Transformers Work in NLP? A Guide to the Latest State-of-the-Art Models | Prateek Joshi - Analytics Vidhya

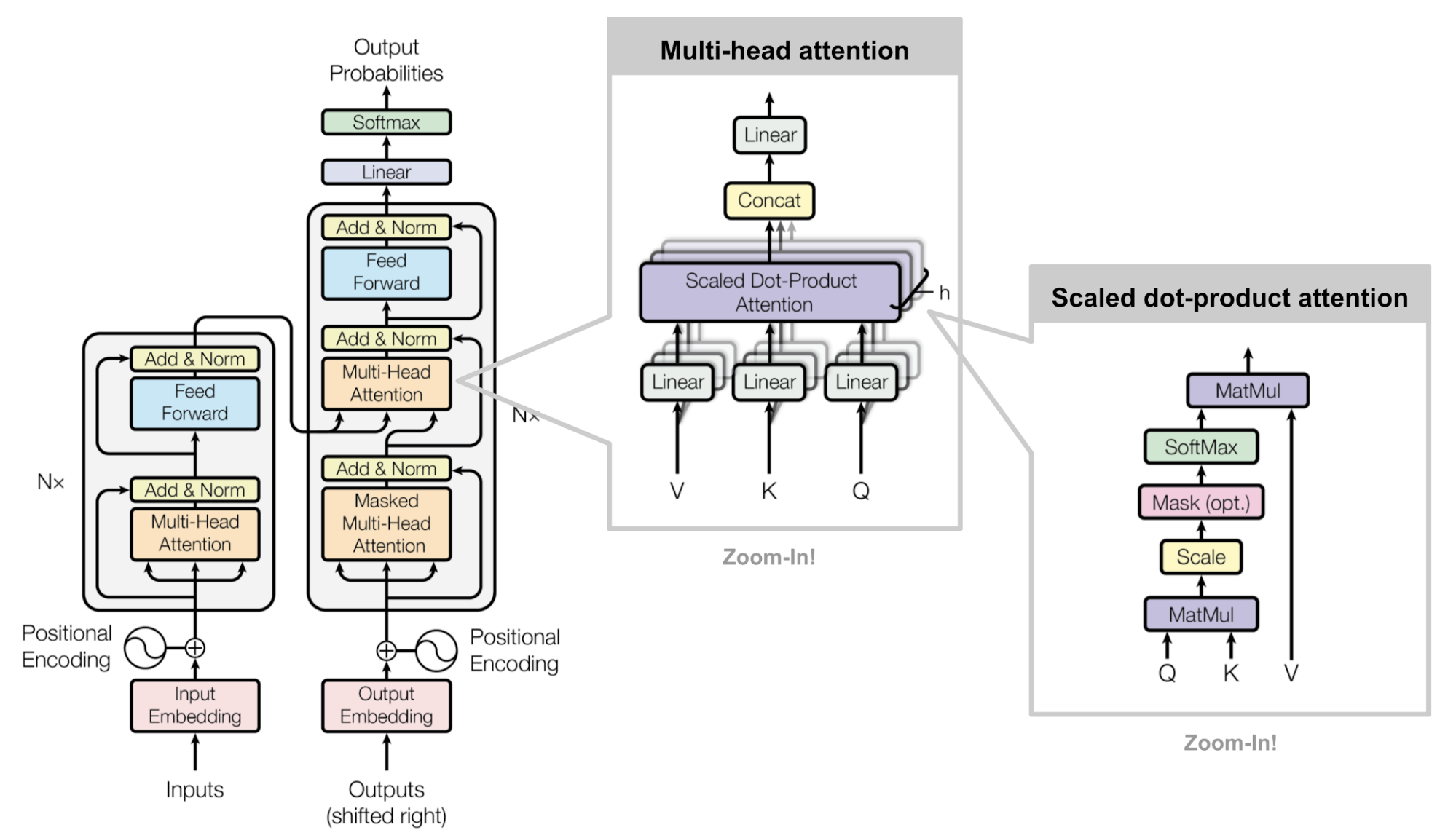

Transformer Model - uniquely have attention such that every output element is connected to every input element. The weightings between them are calculated dynamically, effectively. | Kyle Wiggers The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an Autoencoder (AE) / Encoder-Decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. Attention Is All You Need | A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A.N. Gomez, L. Kaiser, and I. Polosukhin

The Transformer is a deep machine learning model introduced in 2017, used primarily in the field of natural language processing (NLP). Like Recurrent Neural Network (RNN), Transformers are designed to handle ordered sequences of data, such as natural language, for various tasks such as machine translation and text summarization. However, unlike RNNs, Transformers do not require that the sequence be processed in order. So, if the data in question is natural language, the Transformer does not need to process the beginning of a sentence before it processes the end. Due to this feature, the Transformer allows for much more parallelization than RNNs during training. Since their introduction, Transformers have become the basic building block of most state-of-the-art architectures in Natural Language Processing (NLP), replacing gated recurrent neural network models such as the Long Short-Term Memory (LSTM) in many cases. Since the Transformer architecture facilitates more parallelization during training computations, it has enabled training on much more data than was possible before it was introduced. This led to the development of pretrained systems such as Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformer (GPT)-2, which have been trained with huge amounts of general language data prior to being released, and can then be fine-tune trained to specific language tasks.Wikipedia

Tensor2Tensor (T2T) | Google Brain

- Tensor2Tensor Transformers: New Deep Models for NLP | Łukasz Kaiser

- Tensor2Tensor | GitHub

- Tensor2Tensor Library | GitHub

- # Welcome to the Tensor2Tensor Colab

Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and [accelerate ML research](https://research.googleblog.com/2017/06/accelerating-deep-learning-research.html). T2T is actively used and maintained by researchers and engineers within the [Google Brain team](https://research.google.com/teams/brain/) and a community of users. This colab shows you some datasets we have in T2T, how to download and use them, some models we have, how to download pre-trained models and use them, and how to create and train your own models. | Jay Alammar]

Multi-head scaled dot-product attention mechanism. (Image source: Fig 2 in Vaswani, et al., 2017)