Multiclassifiers; Ensembles and Hybrids; Bagging, Boosting, and Stacking

YouTube search... ...Google search

- Regularization

- Boosting

- Multiclassifiers; Ensembles and Hybrids; Bagging, Boosting, and Stacking

- Bagging - Bootstrap Aggregating

- Stacked Generalization (blending)

- Random Forest (or) Random Decision Forest

- The BigChaos Solution to the Netflix Grand Prize | Andreas T¨oscher and Michael Jahrer

- Boosting, Bagging, and Stacking — Ensemble Methods with sklearn and mlens | Robert R.F. DeFilippi - Medium

- Ensemble Averaging | Wikipedia

- AdaNet

_______________________________

The main causes of error in learning are due to noise, bias and variance. Ensemble helps to minimize these factors. These methods are designed to improve the stability and the accuracy of Machine Learning algorithms. Combinations of multiple classifiers decrease variance, especially in the case of unstable classifiers, and may produce a more reliable classification than a single classifier. To use Bagging or Boosting you must select a base learner algorithm. For example, if we choose a classification tree, Bagging and Boosting would consist of a pool of trees as big as we want. | Xristica, Quantdare @ KDnuggets

- Multiclassifiers - a set of hundreds or thousands of learners with a common objective are fused together to solve the problem. Ensemble and Hybrid methods are a subclasses of multiclassifiers.

- Ensemble methods - train multiple models using the same learning algorithm; pool of trees. Multiple classifiers trying to fit to a training set to approximate the target function. Since each classifier will have its own output, we will need to find a combining mechanism to combine the results. This can be through voting (majority wins), weighted voting (some classifier has more authority than the others), averaging the results, etc.

- Bagging (Bootstrap Aggregation) - the result is obtained by averaging the responses of the N learners (or majority vote); any element has the same probability to appear in a new data set

- Boosting - builds a new learner in a sequential way; each classifier is trained on data, taking into account the previous classifiers’ success; observations are weighted and therefore some of them will take part in the new sets more often. After each training step, the weights are redistributed. Boosting assigns a second set of weights, this time for the N classifiers, in order to take a weighted average of their estimates.

- Hybrid Methods - takes a set of different learners and combines them using new learning techniques.

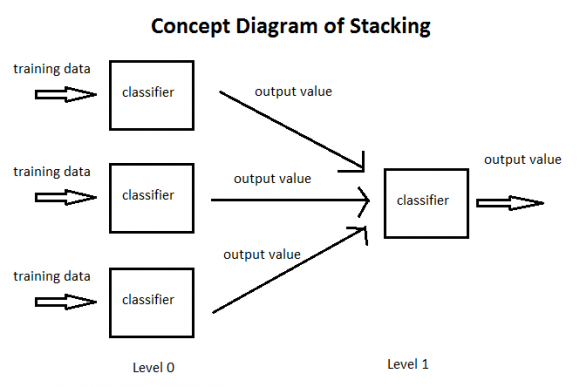

- Stacking (or blending, or Stacked Generalization) - the combining mechanism is that the output of the classifiers (Level 0 classifiers) will be used as training data for another classifier (Level 1 classifier) to approximate the same target function. Basically, you let the Level 1 classifier to figure out the combining mechanism.trained using different learning techniques; then create a final system by integrating the pieces. This method of diversification is one of the most convenient practices: divide the decision among several systems in order to avoid putting all your eggs in one basket. Dream team: combining classifiers | Xristica, Quantdare @ KDnuggets

- Ensemble methods - train multiple models using the same learning algorithm; pool of trees. Multiple classifiers trying to fit to a training set to approximate the target function. Since each classifier will have its own output, we will need to find a combining mechanism to combine the results. This can be through voting (majority wins), weighted voting (some classifier has more authority than the others), averaging the results, etc.

Is Bagging meta-learning? And Boosting? And Stacking?

Even simple model ensembling methods such as boosting or stacking can be considered meta-learning methods. There are many ensembling approaches and these can be even further combined in a hierarchical manner resembling structures in human brain. The proper topology for given problem is particularly important when you evaluate machine learning models by multiple criteria. The most important criterion is the generalization performance. Why Meta-learning is Crucial for Further Advances of Artificial Intelligence? | Pavel Kordik