YouTube search...

...Google search

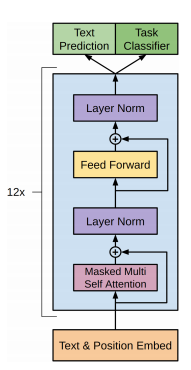

Generative Pre-trained Transformer (GPT-3)

- Language Models are Few-Shot Learners | T. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell, S. Agarwal, A. Herbert-Voss, G. Krueger, T. Henighan, R. Child, A. Ramesh, D. Ziegler, J. Wu, C. Winter, C. Hesse, M. Chen, E. Sigler, M. Litwin, S. Gray, B. Chess, J. Clark, C. Berner, S. McCandlish, A. Radford, I. Sutskever, and D. Amodei - arXiv.org

- GPT-3: Demos, Use-cases, Implications | Simon O'Regan - Towards Data Science

- OpenAI API ...today the API runs models with weights from the GPT-3 family with many speed and throughput improvements.

- GPT-3 by OpenAI – Outlook and Examples | Praveen Govindaraj | Medium

- GPT-3 Creative Fiction | R. Gwern

Try...

- Serendipity ...an AI powered recommendation engine for anything you want.

- Taglines.ai ... just about every business has a tagline — a short, catchy phrase designed to quickly communicate what it is that they do.

- Simplify.so ...simple, easy-to-understand explanations for everything

GPT Impact to Development

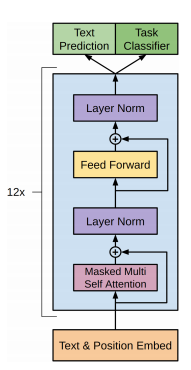

Generative Pre-trained Transformer (GPT-2)

a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher

Coding Train Late Night 2

r/SubSimulator

Subreddit populated entirely by AI personifications of other subreddits -- all posts and comments are generated automatically using:

results in coherent and realistic simulated content.

GetBadNews

- Get Bad News game - Can you beat my score? Play the fake news game! Drop all pretense of ethics and choose the path that builds your persona as an unscrupulous media magnate. Your task is to get as many followers as you can while