Difference between revisions of "Continuous Bag-of-Words (CBoW)"

| Line 8: | Line 8: | ||

[http://www.google.com/search?q=Continuous+Bag+Words+cbow+nlp+natural+language ...Google search] | [http://www.google.com/search?q=Continuous+Bag+Words+cbow+nlp+natural+language ...Google search] | ||

| − | * [[Bag-of-Words ( | + | * [[Bag-of-Words (BoW)]] |

* [[Natural Language Processing (NLP)]] | * [[Natural Language Processing (NLP)]] | ||

* [[Word2Vec]] | * [[Word2Vec]] | ||

Revision as of 11:03, 18 July 2019

YouTube search... ...Google search

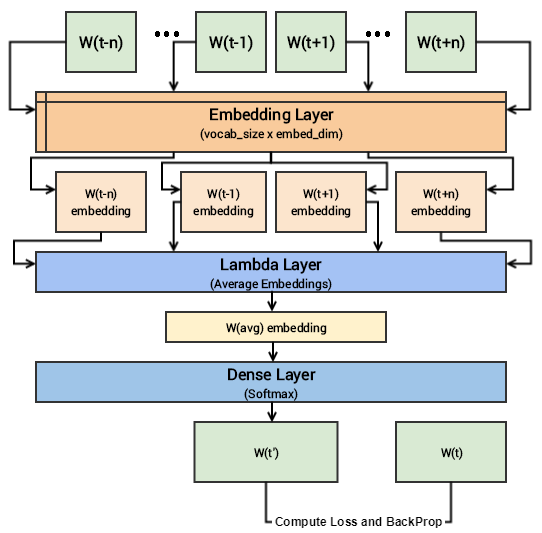

The CBOW model architecture tries to predict the current target word (the center word) based on the source context words (surrounding words). Considering a simple sentence, “the quick brown fox jumps over the lazy dog”, this can be pairs of (context_window, target_word) where if we consider a context window of size 2, we have examples like ([quick, fox], brown), ([the, brown], quick), ([the, dog], lazy) and so on. Thus the model tries to predict the target_word based on the context_window words. A hands-on intuitive approach to Deep Learning Methods for Text Data — Word2Vec, GloVe and FastText | Dipanjan Sarkar - Towards Data Science