Difference between revisions of "Cross-Entropy Loss"

m |

m (Text replacement - "http://" to "https://") |

||

| Line 5: | Line 5: | ||

|description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Cross+Entropy+Loss+deep+learning+hyperparameter YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Cross+Entropy+Loss+deep+learning+hyperparameter ...Google search] |

* [[Loss]] | * [[Loss]] | ||

| Line 12: | Line 12: | ||

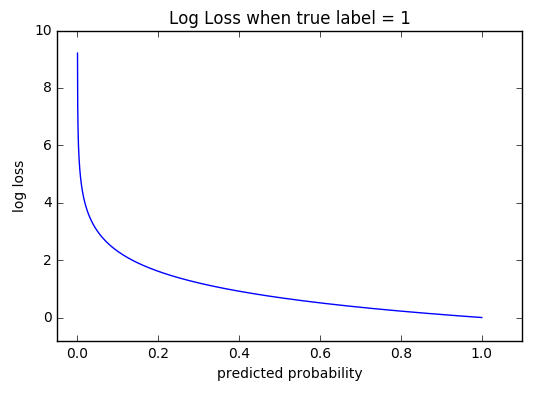

| − | Cross-entropy [[loss]], or log [[loss]], measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [ | + | Cross-entropy [[loss]], or log [[loss]], measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html |

| − | Cross-entropy [[loss]] is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. [ | + | Cross-entropy [[loss]] is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. [https://arxiv.org/pdf/1904.09523.pdf Neural Architecture Search for Deep Face Recognition | Ning Zhu] |

| − | + | https://ml-cheatsheet.readthedocs.io/en/latest/_images/cross_entropy.png | |

Revision as of 06:48, 28 March 2023

YouTube search... ...Google search

Cross-entropy loss, or log loss, measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual label. [https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html

Cross-entropy loss is one of the most widely used loss functions in classification scenarios. In face recognition tasks, the cross-entropy loss is an effective method to eliminate outliers. Neural Architecture Search for Deep Face Recognition | Ning Zhu