Difference between revisions of "Generative Pre-trained Transformer (GPT)"

| Line 14: | Line 14: | ||

* [http://neural-monkey.readthedocs.io/en/latest/machine_translation.html Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil] Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units. | * [http://neural-monkey.readthedocs.io/en/latest/machine_translation.html Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil] Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units. | ||

* [http://towardsdatascience.com/too-powerful-nlp-model-generative-pre-training-2-4cc6afb6655 Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma] | * [http://towardsdatascience.com/too-powerful-nlp-model-generative-pre-training-2-4cc6afb6655 Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma] | ||

| + | * [[BERT]] | ||

a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.[http://arstechnica.com/information-technology/2019/02/researchers-scared-by-their-own-work-hold-back-deepfakes-for-text-ai/ Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher] | a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.[http://arstechnica.com/information-technology/2019/02/researchers-scared-by-their-own-work-hold-back-deepfakes-for-text-ai/ Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher] | ||

Revision as of 22:26, 27 February 2019

GPT+generation+nlg+natural+language+semantics Youtube search... GPT+generation+nlg+natural+language+semantics+machine+learning+ML ...Google search

- Natural Language Generation (NLG)

- (117M parameter) version of GPT-2 | GitHub

- GPT-2: It learned on the Internet | Janelle Shane

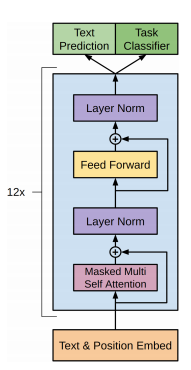

- Attention Mechanism/Model - Transformer Model

- Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units.

- Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma

- BERT

a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher

- Get Bad News game - Can you beat my score? Play the fake news game! Drop all pretense of ethics and choose the path that builds your persona as an unscrupulous media magnate. Your task is to get as many followers as you can while