Difference between revisions of "Gradient Descent Optimization & Challenges"

m |

|||

| Line 13: | Line 13: | ||

* [[Objective vs. Cost vs. Loss vs. Error Function]] | * [[Objective vs. Cost vs. Loss vs. Error Function]] | ||

* [[Topology and Weight Evolving Artificial Neural Network (TWEANN)]] | * [[Topology and Weight Evolving Artificial Neural Network (TWEANN)]] | ||

| − | * [[Other Challenges]] | + | * [[Other Challenges]] in Artificial Intelligence |

Revision as of 22:59, 16 February 2019

YouTube search... ...Google search

- Gradient Boosting Algorithms

- Backpropagation

- Objective vs. Cost vs. Loss vs. Error Function

- Topology and Weight Evolving Artificial Neural Network (TWEANN)

- Other Challenges in Artificial Intelligence

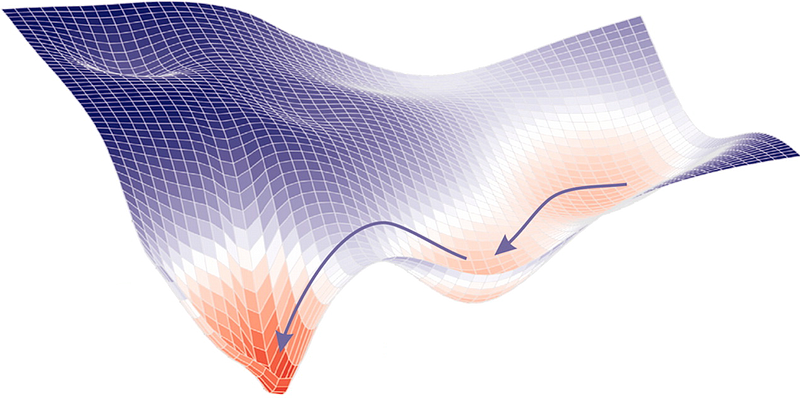

Gradient Descent - Stochastic (SGD), Batch (BGD) & Mini-Batch

Vanishing & Exploding Gradients Problems

Vanishing & Exploding Gradients Challenges with Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNN)