Difference between revisions of "Generative Pre-trained Transformer (GPT)"

m (→GPT Impact to Development) |

m |

||

| Line 156: | Line 156: | ||

{| class="wikitable" style="width: 550px;" | {| class="wikitable" style="width: 550px;" | ||

|| | || | ||

| − | <youtube> | + | <youtube>aDFLp4A1EmY</youtube> |

| − | <b> | + | <b>Panel discussion - GPT-3 and Artificial General Intelligence 27 Aug 2020 |

| − | </b><br>In | + | </b><br>Is GPT-3 a step towards creating artificial general intelligence? Chair: Associate Professor Kate Devitt - Chief Scientist, Trusted Autonomous Systems |

| + | |||

| + | Panel: | ||

| + | • Professor David Chalmers (NYU) | ||

| + | • Professor Susan Schneider (NASA and Florida Atlantic University) | ||

| + | • Professor Marcus Hutter (ANU) | ||

| + | |||

| + | A philosophical discussion on the development of artificial intelligence and specifically advances in Generative Pre-trained Transformer-3 (GPT-3). GPT-3 is an auto-complete algorithm created by OpenAI as part of their endeavour to develop artificial general intelligence. GPT-3 is the third in a series of autocomplete tools designed by OpenAI. (GPT stands for “generative pre-trained transformer.”). GPT-3 is fed on an unimaginatively large corpus of human knowledge including all of Wikipedia, millions of books, websites and other materials including philosophy texts. In fact, any type of information uploaded to the internet is possible food for GPT-3's artificial mind to dwell on. The result? Eerily coherent, complex and interesting thoughts about almost any topic. The sophisticated, nuanced text produced by GPT-3 seems to pass the Turing Test for many--including philosophers. Some of GPT-3's answers are shedding new light on enduring philosophical questions. Is GPT-3 the beginnings of an artificial general intelligence. Does it create ideas like a human mind, or even better than a human mind? Is human cognition similarly some sort of autocomplete program in our brains? Is it possible that GPT-3 one day becomes consciousness or is it already conscious?--How could we tell. If an AI passes our tests for consciousness, do we then have an obligation to accord it rights? If so, what sorts of rights might it deserve. Independently of rights, how should humans manage an AI that has access to everything that is posited and known and can trick humans into believing that another rational agent is communicating with them? The panel considers what GPT-3 tell us about the ambition to build an artificial general intelligence, consciousness, human thought and how we should treat AI in an increasingly digital and disembodied world rife with mis- and disinformation. | ||

|} | |} | ||

|}<!-- B --> | |}<!-- B --> | ||

| Line 177: | Line 184: | ||

{| class="wikitable" style="width: 550px;" | {| class="wikitable" style="width: 550px;" | ||

|| | || | ||

| − | <youtube> | + | <youtube>w4JYe-oY4HI</youtube> |

| − | <b> | + | <b>Code 10x Faster With This CRAZY New AI Tool (GPT-3) |

| − | </b><br> | + | </b><br>In this FREE LIVE training, Aaron and Naz will show you the new cutting edge machine learning AI, [[OpenAI]]'s GPT-3. |

|} | |} | ||

|<!-- M --> | |<!-- M --> | ||

Revision as of 21:05, 31 August 2020

YouTube search... ...Google search

- Case Studies

- Text Transfer Learning

- Natural Language Generation (NLG)

- Generated Image

- OpenAI Blog | OpenAI

- Language Models are Unsupervised Multitask Learners | Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever

- Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units.

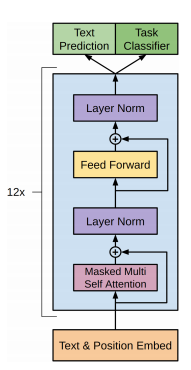

- Attention Mechanism/Transformer Model

- Bidirectional Encoder Representations from Transformers (BERT)

- ELMo

- Language Models are Unsupervised Multitask Learners - GitHub

- Microsoft Releases DialogGPT AI Conversation Model | Anthony Alford - InfoQ - trained on over 147M dialogs

- minGPT | Andrej Karpathy - GitHub

Contents

Generative Pre-trained Transformer (GPT-3)

- Language Models are Few-Shot Learners | T. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell, S. Agarwal, A. Herbert-Voss, G. Krueger, T. Henighan, R. Child, A. Ramesh, D. Ziegler, J. Wu, C. Winter, C. Hesse, M. Chen, E. Sigler, M. Litwin, S. Gray, B. Chess, J. Clark, C. Berner, S. McCandlish, A. Radford, I. Sutskever, and D. Amodei - arXiv.org

- GPT-3: Demos, Use-cases, Implications | Simon O'Regan - Towards Data Science

- OpenAI API ...today the API runs models with weights from the GPT-3 family with many speed and throughput improvements.

- GPT-3 by OpenAI – Outlook and Examples | Praveen Govindaraj | Medium

- GPT-3 Creative Fiction | R. Gwern

Try...

- Sushant Kumar's micro-site - Replace your 'word' in the following URL to see what GPT-3 generates: http://thoughts.sushant-kumar.com/word

- Serendipity ...an AI powered recommendation engine for anything you want.

- Taglines.ai ... just about every business has a tagline — a short, catchy phrase designed to quickly communicate what it is that they do.

- Simplify.so ...simple, easy-to-understand explanations for everything

|

|

|

|

|

|

|

|

|

|

|

|

GPT Impact to Development

- Development

- http://analyticsindiamag.com/will-the-much-hyped-gpt-3-impact-the-coders/ Will The Much-Hyped GPT-3 Impact The Coders? | Analytics India Magazine]

- With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you. | Sharif Shameem - debuild

|

|

Generative Pre-trained Transformer (GPT-2)

- GitHub

- How to Get Started with OpenAIs GPT-2 for Text Generation | Amal Nair - Analytics India Magazine

- GPT-2: It learned on the Internet | Janelle Shane

- Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma

- GPT-2 A nascent transfer learning method that could eliminate supervised learning some NLP tasks | Ajit Rajasekharan - Medium

- OpenAI Creates Platform for Generating Fake News. Wonderful | Nick Kolakowski - Dice

- InferKit | Adam D King- completes your text.

Coding Train Late Night 2

r/SubSimulator

Subreddit populated entirely by AI personifications of other subreddits -- all posts and comments are generated automatically using:

results in coherent and realistic simulated content.

GetBadNews

- Get Bad News game - Can you beat my score? Play the fake news game! Drop all pretense of ethics and choose the path that builds your persona as an unscrupulous media magnate. Your task is to get as many followers as you can while