Difference between revisions of "Generative Pre-trained Transformer (GPT)"

| Line 14: | Line 14: | ||

* [[Natural Language Generation (NLG)]] | * [[Natural Language Generation (NLG)]] | ||

* [[Generated Image]] | * [[Generated Image]] | ||

| + | * [[Attention]] Mechanism/[[Transformer]] Model | ||

* [http://openai.com/blog/gpt-2-6-month-follow-up/ OpenAI Blog] | [http://openai.com/ OpenAI] | * [http://openai.com/blog/gpt-2-6-month-follow-up/ OpenAI Blog] | [http://openai.com/ OpenAI] | ||

* [http://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf Language Models are Unsupervised Multitask Learners | Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever] | * [http://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf Language Models are Unsupervised Multitask Learners | Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever] | ||

| − | |||

| − | |||

| − | |||

* [http://neural-monkey.readthedocs.io/en/latest/machine_translation.html Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil] Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units. | * [http://neural-monkey.readthedocs.io/en/latest/machine_translation.html Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil] Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units. | ||

| − | + | ||

* [[Bidirectional Encoder Representations from Transformers (BERT)]] | * [[Bidirectional Encoder Representations from Transformers (BERT)]] | ||

* [[ELMo]] | * [[ELMo]] | ||

* [http://github.com/openai/gpt-2 Language Models are Unsupervised Multitask Learners - GitHub] | * [http://github.com/openai/gpt-2 Language Models are Unsupervised Multitask Learners - GitHub] | ||

| − | |||

| − | |||

| − | |||

* [http://www.infoq.com/news/2019/11/microsoft-ai-conversation/ Microsoft Releases DialogGPT AI Conversation Model | Anthony Alford - InfoQ] - trained on over 147M dialogs | * [http://www.infoq.com/news/2019/11/microsoft-ai-conversation/ Microsoft Releases DialogGPT AI Conversation Model | Anthony Alford - InfoQ] - trained on over 147M dialogs | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

http://cdn-images-1.medium.com/max/800/1*jbcwhhB8PEpJRk781rML_g.png | http://cdn-images-1.medium.com/max/800/1*jbcwhhB8PEpJRk781rML_g.png | ||

= GPT-3 = | = GPT-3 = | ||

| + | * [http://medium.com/@praveengovi.analytics/gpt-3-by-openai-outlook-and-examples-f234f9c62c41 GPT-3 by OpenAI – Outlook and Examples | Praveen Govindaraj | Medium] | ||

| + | |||

<youtube>pXOlc5CBKT8</youtube> | <youtube>pXOlc5CBKT8</youtube> | ||

<youtube>SY5PvZrJhLE</youtube> | <youtube>SY5PvZrJhLE</youtube> | ||

| Line 50: | Line 38: | ||

= GPT-2 = | = GPT-2 = | ||

| + | * [http://github.com/openai/gpt-2/blob/master/README.md (117M parameter) version of GPT-2 | GitHub] | ||

| + | * [http://analyticsindiamag.com/how-to-get-started-with-openais-gpt-2-for-text-generation/ How to Get Started with OpenAIs GPT-2 for Text Generation | Amal Nair - Analytics India Magazine] | ||

| + | * [http://aiweirdness.com/post/182824715257/gpt-2-it-learned-on-the-internet GPT-2: It learned on the Internet | Janelle Shane] | ||

| + | * [http://towardsdatascience.com/too-powerful-nlp-model-generative-pre-training-2-4cc6afb6655 Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma] | ||

| + | * [http://medium.com/@ajitrajasekharan/gpt-2-a-promising-but-nascent-transfer-learning-method-that-could-reduce-or-even-eliminate-in-some-48ea3370cc21 GPT-2 A nascent transfer learning method that could eliminate supervised learning some NLP tasks | Ajit Rajasekharan - Medium] | ||

| + | * [http://insights.dice.com/2019/02/19/openai-platform-generating-fake-news-wonderful OpenAI Creates Platform for Generating Fake News. Wonderful | Nick Kolakowski - Dice] | ||

| + | |||

| + | a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.[http://arstechnica.com/information-technology/2019/02/researchers-scared-by-their-own-work-hold-back-deepfakes-for-text-ai/ Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher] | ||

| + | |||

| + | <hr> | ||

| + | * [http://talktotransformer.com/ Try GPT-2...Talk to Transformer] - completes your text. | [http://adamdking.com/ Adam D King], [http://huggingface.co/ Hugging Face] and [http://openai.com/ OpenAI] | ||

| + | <hr> | ||

| + | |||

<youtube>UULqu7LQoHs</youtube> | <youtube>UULqu7LQoHs</youtube> | ||

<youtube>OJGDmXDt5QA</youtube> | <youtube>OJGDmXDt5QA</youtube> | ||

Revision as of 10:40, 19 July 2020

YouTube search... ...Google search

- Case Studies

- Text Transfer Learning

- Natural Language Generation (NLG)

- Generated Image

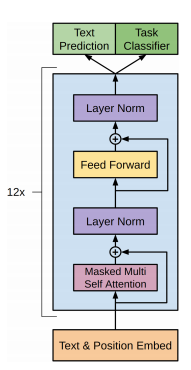

- Attention Mechanism/Transformer Model

- OpenAI Blog | OpenAI

- Language Models are Unsupervised Multitask Learners | Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever

- Neural Monkey | Jindřich Libovický, Jindřich Helcl, Tomáš Musil Byte Pair Encoding (BPE) enables NMT model translation on open-vocabulary by encoding rare and unknown words as sequences of subword units.

- Bidirectional Encoder Representations from Transformers (BERT)

- ELMo

- Language Models are Unsupervised Multitask Learners - GitHub

- Microsoft Releases DialogGPT AI Conversation Model | Anthony Alford - InfoQ - trained on over 147M dialogs

Contents

GPT-3

GPT-2

- (117M parameter) version of GPT-2 | GitHub

- How to Get Started with OpenAIs GPT-2 for Text Generation | Amal Nair - Analytics India Magazine

- GPT-2: It learned on the Internet | Janelle Shane

- Too powerful NLP model (GPT-2): What is Generative Pre-Training | Edward Ma

- GPT-2 A nascent transfer learning method that could eliminate supervised learning some NLP tasks | Ajit Rajasekharan - Medium

- OpenAI Creates Platform for Generating Fake News. Wonderful | Nick Kolakowski - Dice

a text-generating bot based on a model with 1.5 billion parameters. ...Ultimately, OpenAI's researchers kept the full thing to themselves, only releasing a pared-down 117 million parameter version of the model (which we have dubbed "GPT-2 Junior") as a safer demonstration of what the full GPT-2 model could do.Twenty minutes into the future with OpenAI’s Deep Fake Text AI | Sean Gallagher

- Try GPT-2...Talk to Transformer - completes your text. | Adam D King, Hugging Face and OpenAI

r/SubSimulator

Subreddit populated entirely by AI personifications of other subreddits -- all posts and comments are generated automatically using:

results in coherent and realistic simulated content.

GetBadNews

- Get Bad News game - Can you beat my score? Play the fake news game! Drop all pretense of ethics and choose the path that builds your persona as an unscrupulous media magnate. Your task is to get as many followers as you can while