Difference between revisions of "Image-to-Image Translation"

| Line 7: | Line 7: | ||

[http://www.youtube.com/results?search_query=CycleGAN YouTube search...] | [http://www.youtube.com/results?search_query=CycleGAN YouTube search...] | ||

[http://www.google.com/search?q=CycleGAN ...Google search] | [http://www.google.com/search?q=CycleGAN ...Google search] | ||

| − | |||

* [[Generated Image]] | * [[Generated Image]] | ||

| Line 29: | Line 28: | ||

<youtube>8XfcDkkFbMs</youtube> | <youtube>8XfcDkkFbMs</youtube> | ||

| − | <youtube> | + | <youtube>u8qPvzk0AfY</youtube> |

| + | |||

= CycleGAN = | = CycleGAN = | ||

Revision as of 08:12, 19 July 2020

YouTube search... ...Google search

Approaches:

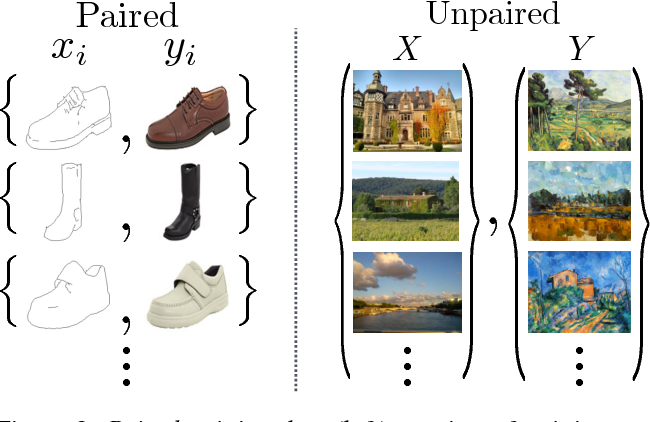

- Paired

- Unparied

Contents

StarGAN

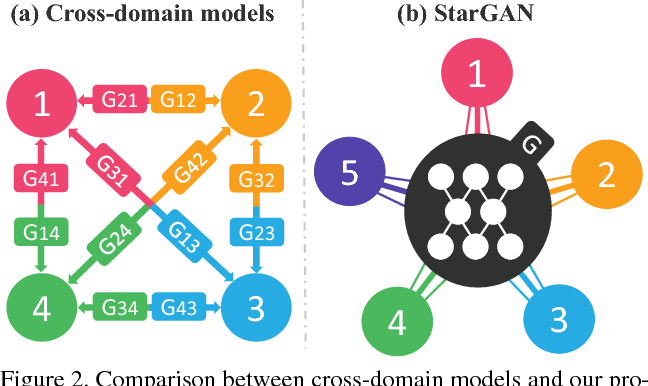

Existing image to image translation approaches have limited scalability and robustness in handling more than two domains, since different models should be built independently for every pair of image domains. StarGAN is a novel and scalable approach that can perform image-to-image translations for multiple domains using only a single model. Image-to-Image Translation | Yongfu Hao

CycleGAN

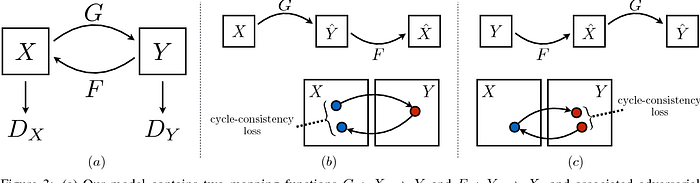

An approach for learning to translate an image from a source domain X to a target domain Y in the absence of paired examples Image-to-Image Translation | Yongfu Hao

pix2pix

UNIT