Difference between revisions of "Word2Vec"

m |

|||

| (12 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

|title=PRIMO.ai | |title=PRIMO.ai | ||

|titlemode=append | |titlemode=append | ||

| − | |keywords=artificial, intelligence, machine, learning, models | + | |keywords=ChatGPT, artificial, intelligence, machine, learning, NLP, NLG, NLC, NLU, models, data, singularity, moonshot, Sentience, AGI, Emergence, Moonshot, Explainable, TensorFlow, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Hugging Face, OpenAI, Tensorflow, OpenAI, Google, Nvidia, Microsoft, Azure, Amazon, AWS, Meta, LLM, metaverse, assistants, agents, digital twin, IoT, Transhumanism, Immersive Reality, Generative AI, Conversational AI, Perplexity, Bing, You, Bard, Ernie, prompt Engineering LangChain, Video/Image, Vision, End-to-End Speech, Synthesize Speech, Speech Recognition, Stanford, MIT |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools |

| − | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | + | |

| + | <!-- Google tag (gtag.js) --> | ||

| + | <script async src="https://www.googletagmanager.com/gtag/js?id=G-4GCWLBVJ7T"></script> | ||

| + | <script> | ||

| + | window.dataLayer = window.dataLayer || []; | ||

| + | function gtag(){dataLayer.push(arguments);} | ||

| + | gtag('js', new Date()); | ||

| + | |||

| + | gtag('config', 'G-4GCWLBVJ7T'); | ||

| + | </script> | ||

}} | }} | ||

[http://www.youtube.com/results?search_query=Word2Vec+word+vectors+nlp+nli+natural+language+semantics Youtube search...] | [http://www.youtube.com/results?search_query=Word2Vec+word+vectors+nlp+nli+natural+language+semantics Youtube search...] | ||

[http://www.google.com/search?q=Word2Vec+word+vectors+deep+machine+learning+ML+artificial+intelligence ...Google search] | [http://www.google.com/search?q=Word2Vec+word+vectors+deep+machine+learning+ML+artificial+intelligence ...Google search] | ||

| − | * [[ | + | * [[Embedding]] ... [[Fine-tuning]] ... [[Retrieval-Augmented Generation (RAG)|RAG]] ... [[Agents#AI-Powered Search|Search]] ... [[Clustering]] ... [[Recommendation]] ... [[Anomaly Detection]] ... [[Classification]] ... [[Dimensional Reduction]]. [[...find outliers]] |

| + | * [[Large Language Model (LLM)]] ... [[Large Language Model (LLM)#Multimodal|Multimodal]] ... [[Foundation Models (FM)]] ... [[Generative Pre-trained Transformer (GPT)|Generative Pre-trained]] ... [[Transformer]] ... [[Attention]] ... [[Generative Adversarial Network (GAN)|GAN]] ... [[Bidirectional Encoder Representations from Transformers (BERT)|BERT]] | ||

* [[Doc2Vec]] | * [[Doc2Vec]] | ||

* [[Node2Vec]] | * [[Node2Vec]] | ||

| Line 14: | Line 24: | ||

* [[Global Vectors for Word Representation (GloVe)]] | * [[Global Vectors for Word Representation (GloVe)]] | ||

* [[Bag-of-Words (BoW)]] | * [[Bag-of-Words (BoW)]] | ||

| − | * [[Continuous Bag-of-Words ( | + | * [[Continuous Bag-of-Words (CBoW)]] |

| + | * [[Natural Language Processing (NLP)#Similarity |Similarity]] | ||

| + | * [[TensorFlow]] | ||

| + | ** [http://projector.tensorflow.org/ Embedding Projector] | ||

| + | * [[Embedding]] ... [[Fine-tuning]] ... [[Retrieval-Augmented Generation (RAG)|RAG]] ... [[Agents#AI-Powered Search|Search]] ... [[Clustering]] ... [[Recommendation]] ... [[Anomaly Detection]] ... [[Classification]] ... [[Dimensional Reduction]]. [[...find outliers]] | ||

| + | ** [http://pathmind.com/wiki/word2vec A Beginner's Guide to Word2Vec and Neural Word Embeddings | Chris Nicholson - A.I. Wiki pathmind] | ||

| + | * [http://towardsdatascience.com/introduction-to-word-embedding-and-word2vec-652d0c2060fa Introduction to Word Embedding and Word2Vec | Dhruvil Karani - Towards Data Science - Medium] | ||

| + | * [http://arxiv.org/pdf/1310.4546.pdf Distributed Representations of Words and Phrases and their Compositionality | Tomas Mikolov -] [[Google]] | ||

| + | |||

| + | a shallow, two-layer neural networks which is trained to reconstruct linguistic contexts of words. It takes as its input a large corpus of words and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space. | ||

| + | |||

| + | |||

| + | http://miro.medium.com/max/1394/0*XMW5mf81LSHodnTi.png | ||

| + | |||

<youtube>ERibwqs9p38</youtube> | <youtube>ERibwqs9p38</youtube> | ||

Latest revision as of 09:39, 28 May 2025

Youtube search... ...Google search

- Embedding ... Fine-tuning ... RAG ... Search ... Clustering ... Recommendation ... Anomaly Detection ... Classification ... Dimensional Reduction. ...find outliers

- Large Language Model (LLM) ... Multimodal ... Foundation Models (FM) ... Generative Pre-trained ... Transformer ... Attention ... GAN ... BERT

- Doc2Vec

- Node2Vec

- Skip-Gram

- Global Vectors for Word Representation (GloVe)

- Bag-of-Words (BoW)

- Continuous Bag-of-Words (CBoW)

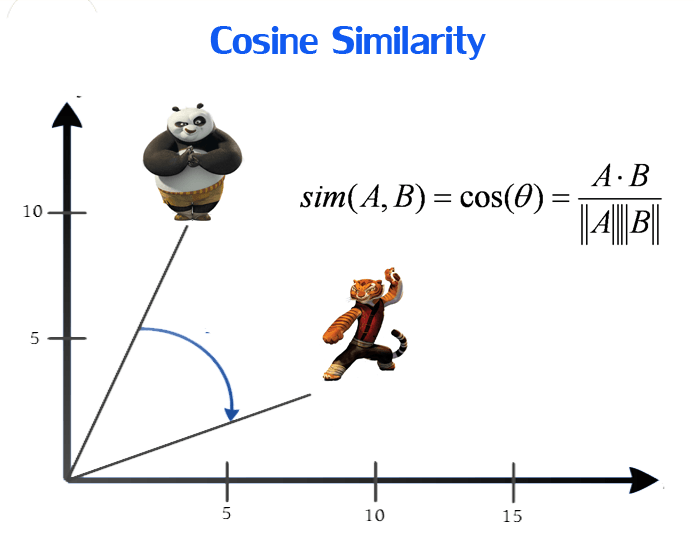

- Similarity

- TensorFlow

- Embedding ... Fine-tuning ... RAG ... Search ... Clustering ... Recommendation ... Anomaly Detection ... Classification ... Dimensional Reduction. ...find outliers

- Introduction to Word Embedding and Word2Vec | Dhruvil Karani - Towards Data Science - Medium

- Distributed Representations of Words and Phrases and their Compositionality | Tomas Mikolov - Google

a shallow, two-layer neural networks which is trained to reconstruct linguistic contexts of words. It takes as its input a large corpus of words and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space.