Difference between revisions of "Topology and Weight Evolving Artificial Neural Network (TWEANN)"

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [http://www.youtube.com/results?search_query=TWEANN | + | {{#seo: |

| − | [http://www.google.com/search?q=TWEANN | + | |title=PRIMO.ai |

| + | |titlemode=append | ||

| + | |keywords=artificial, intelligence, machine, learning, models, algorithms, data, singularity, moonshot, Tensorflow, Google, Nvidia, Microsoft, Azure, Amazon, AWS | ||

| + | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

| + | }} | ||

| + | [http://www.youtube.com/results?search_query=TWEANN+neural+artificial+intelligence Youtube search...] | ||

| + | [http://www.google.com/search?q=TWEANN+machine+learning+ML ...Google search] | ||

* [[Architectures]] | * [[Architectures]] | ||

| − | * [http:// | + | * [http://en.wikipedia.org/wiki/Neuroevolution NeuroEvolution | Wikipedia] |

| − | |||

* [[Evolutionary Computation / Genetic Algorithms]] | * [[Evolutionary Computation / Genetic Algorithms]] | ||

| + | * [[Gradient Descent Optimization & Challenges]] | ||

| + | * [[NeuroEvolution of Augmenting Topologies (NEAT)]] | ||

| − | <youtube> | + | Many neuroevolution algorithms have been defined. One common distinction is between algorithms that evolve only the strength of the connection weights for a fixed network topology (sometimes called conventional neuroevolution), as opposed to those that evolve both the topology of the network and its weights |

| + | |||

| + | ==== Comparison with gradient descent ==== | ||

| + | Most neural networks use gradient descent rather than neuroevolution. However, around 2017 researchers at Uber stated they had found that simple structural neuroevolution algorithms were competitive with sophisticated modern industry-standard gradient-descent deep learning algorithms, in part because neuroevolution was found to be less likely to get stuck in local minima. | ||

| + | |||

| + | ==== Topology ==== | ||

| + | But there's an issue we haven't yet addressed: how do you determine what connects to what in the first place? In other words, the behavior of your brain is not only determined by the weights of its connections, but by the overall architecture of the brain itself. Stochastic gradient descent does not even attempt to address this question. Rather, it simply does its best with the connections it is provided. [http://www.oreilly.com/ideas/neuroevolution-a-different-kind-of-deep-learning Neuroevolution: A different kind of deep learning | Kenneth O. Stanley] | ||

| + | |||

| + | |||

| + | http://cdn-images-1.medium.com/max/600/1*SrhRHdHjTDkAbq8W-ZMfSQ.png | ||

| + | |||

| + | <youtube>kHyNqSnzP8Y</youtube> | ||

Latest revision as of 22:51, 2 February 2019

Youtube search... ...Google search

- Architectures

- NeuroEvolution | Wikipedia

- Evolutionary Computation / Genetic Algorithms

- Gradient Descent Optimization & Challenges

- NeuroEvolution of Augmenting Topologies (NEAT)

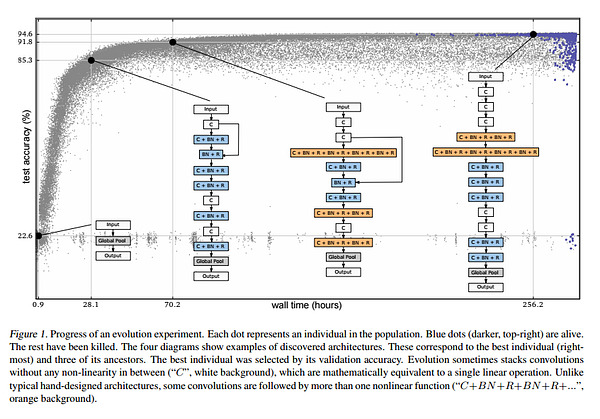

Many neuroevolution algorithms have been defined. One common distinction is between algorithms that evolve only the strength of the connection weights for a fixed network topology (sometimes called conventional neuroevolution), as opposed to those that evolve both the topology of the network and its weights

Comparison with gradient descent

Most neural networks use gradient descent rather than neuroevolution. However, around 2017 researchers at Uber stated they had found that simple structural neuroevolution algorithms were competitive with sophisticated modern industry-standard gradient-descent deep learning algorithms, in part because neuroevolution was found to be less likely to get stuck in local minima.

Topology

But there's an issue we haven't yet addressed: how do you determine what connects to what in the first place? In other words, the behavior of your brain is not only determined by the weights of its connections, but by the overall architecture of the brain itself. Stochastic gradient descent does not even attempt to address this question. Rather, it simply does its best with the connections it is provided. Neuroevolution: A different kind of deep learning | Kenneth O. Stanley