Difference between revisions of "Capsule Networks (CapNets)"

(Created page with "[http://www.youtube.com/results?search_query=Capsule+Network+tutorial Youtube search...] <youtube>pPN8d0E3900</youtube> <youtube>VKoLGnq15RM</youtube> <youtube>2Kawrd5szHE</y...") |

m |

||

| (14 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | {{#seo: |

| + | |title=PRIMO.ai | ||

| + | |titlemode=append | ||

| + | |keywords=artificial, intelligence, machine, learning, models, algorithms, data, singularity, moonshot, Tensorflow, Google, Nvidia, Microsoft, Azure, Amazon, AWS | ||

| + | |description=Helpful resources for your journey with artificial intelligence; videos, articles, techniques, courses, profiles, and tools | ||

| + | }} | ||

| + | [https://www.youtube.com/results?search_query=Capsule+Network+capNet Youtube search...] | ||

| + | [https://www.google.com/search?q=Capsule+Network+capNet+deep+machine+learning+ML ...Google search] | ||

| − | <youtube> | + | * [https://github.com/sekwiatkowski/awesome-capsule-networks Sekwiatkowski/Awesome Capsule Networks | GitHub] - A curated list of awesome resources related to Capsule Networks |

| + | * [https://medium.com/ai%C2%B3-theory-practice-business/understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b Understanding Hinton’s Capsule Networks | Max Pechyonkin] | ||

| + | * [https://towardsdatascience.com/capsule-networks-the-new-deep-learning-network-bd917e6818e8 Capsule Networks: The New Deep Learning Network | Aryan Misra] | ||

| + | * [https://openreview.net/pdf?id=HJWLfGWRb MATRIX CAPSULES WITH EM ROUTING | Geoffrey Hinton, Sara Sabour, Nicholas Frosst - Google Brain] | ||

| + | * [[Architectures]] | ||

| + | * [[Graph Convolutional Network (GCN), Graph Neural Networks (Graph Nets), Geometric Deep Learning]] | ||

| + | |||

| + | Capsule is a nested set of neural layers. So in a regular neural network you keep on adding more layers. In CapsNet you would add more layers inside a single layer. Or in other words nest a neural layer inside another. The state of the neurons inside a capsule capture the above properties of one entity inside an image. A capsule outputs a vector to represent the existence of the entity. The orientation of the vector represents the properties of the entity. The vector is sent to all possible parents in the neural network. For each possible parent a capsule can find a prediction vector. Prediction vector is calculated based on multiplying it’s own [[Activation Functions#Weights|weight]] and a [[Activation Functions#Weights|weight]] matrix. Whichever parent has the largest scalar prediction vector product, increases the capsule bond. Rest of the parents decrease their bond. This routing by agreement method is superior than the current mechanism like max-pooling ([[Pooling / Sub-sampling: Max, Mean]]). [https://hackernoon.com/what-is-a-capsnet-or-capsule-network-2bfbe48769cc What is a CapsNet or Capsule Network? | Debarko De] | ||

| + | |||

| + | A capsule is a group of neurons whose activity vector represents the instantiation parameters of a specific type of entity such as an object or an object part. We use the length of the activity vector to represent the probability that the entity exists and its orientation to represent the instantiation parameters. Active capsules at one level make predictions, via transformation matrices, for the instantiation parameters of higher-level capsules. When multiple predictions agree, a higher level capsule becomes active. We show that a discrimininatively trained, multi-layer capsule system achieves state-of-the-art performance on MNIST and is considerably better than a convolutional net at recognizing highly overlapping digits. To achieve these results we use an iterative routing-by-agreement mechanism: A lower-level capsule prefers to send its output to higher level capsules whose activity vectors have a big scalar product with the prediction coming from the lower-level capsule. [https://arxiv.org/abs/1710.09829 Dynamic Routing Between Capsules | Sara Sabour, Nicholas Frosst, Geoffrey E Hinton - Google Brain] | ||

| + | |||

| + | |||

| + | https://cdn-images-1.medium.com/max/1600/1*P1y-bAF1Wv9-EtdQcsErhA.png | ||

| + | |||

| + | <youtube>aaucpI5ynQc</youtube> | ||

<youtube>VKoLGnq15RM</youtube> | <youtube>VKoLGnq15RM</youtube> | ||

<youtube>2Kawrd5szHE</youtube> | <youtube>2Kawrd5szHE</youtube> | ||

<youtube>6S1_WqE55UQ</youtube> | <youtube>6S1_WqE55UQ</youtube> | ||

| + | <youtube>YqazfBLLV4U</youtube> | ||

| + | <youtube>kXvtqUl6ivU</youtube> | ||

| + | <youtube>pPN8d0E3900</youtube> | ||

| + | <youtube>EATWLTyLfmc</youtube> | ||

Latest revision as of 08:44, 6 August 2023

Youtube search... ...Google search

- Sekwiatkowski/Awesome Capsule Networks | GitHub - A curated list of awesome resources related to Capsule Networks

- Understanding Hinton’s Capsule Networks | Max Pechyonkin

- Capsule Networks: The New Deep Learning Network | Aryan Misra

- MATRIX CAPSULES WITH EM ROUTING | Geoffrey Hinton, Sara Sabour, Nicholas Frosst - Google Brain

- Architectures

- Graph Convolutional Network (GCN), Graph Neural Networks (Graph Nets), Geometric Deep Learning

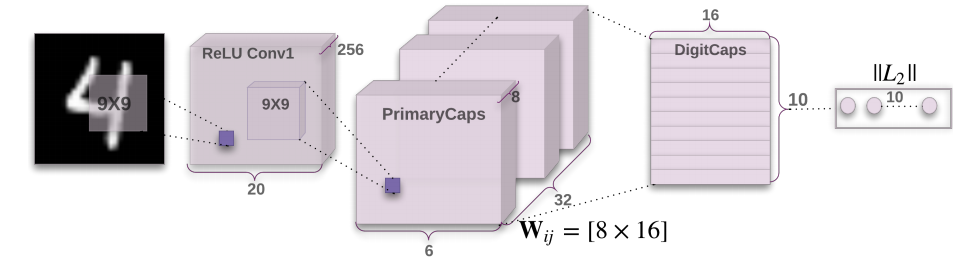

Capsule is a nested set of neural layers. So in a regular neural network you keep on adding more layers. In CapsNet you would add more layers inside a single layer. Or in other words nest a neural layer inside another. The state of the neurons inside a capsule capture the above properties of one entity inside an image. A capsule outputs a vector to represent the existence of the entity. The orientation of the vector represents the properties of the entity. The vector is sent to all possible parents in the neural network. For each possible parent a capsule can find a prediction vector. Prediction vector is calculated based on multiplying it’s own weight and a weight matrix. Whichever parent has the largest scalar prediction vector product, increases the capsule bond. Rest of the parents decrease their bond. This routing by agreement method is superior than the current mechanism like max-pooling (Pooling / Sub-sampling: Max, Mean). What is a CapsNet or Capsule Network? | Debarko De

A capsule is a group of neurons whose activity vector represents the instantiation parameters of a specific type of entity such as an object or an object part. We use the length of the activity vector to represent the probability that the entity exists and its orientation to represent the instantiation parameters. Active capsules at one level make predictions, via transformation matrices, for the instantiation parameters of higher-level capsules. When multiple predictions agree, a higher level capsule becomes active. We show that a discrimininatively trained, multi-layer capsule system achieves state-of-the-art performance on MNIST and is considerably better than a convolutional net at recognizing highly overlapping digits. To achieve these results we use an iterative routing-by-agreement mechanism: A lower-level capsule prefers to send its output to higher level capsules whose activity vectors have a big scalar product with the prediction coming from the lower-level capsule. Dynamic Routing Between Capsules | Sara Sabour, Nicholas Frosst, Geoffrey E Hinton - Google Brain