Difference between revisions of "Gradient Descent Optimization & Challenges"

m |

m (Text replacement - "http:" to "https:") |

||

| Line 6: | Line 6: | ||

}} | }} | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Gradient+Descent+Optimization+Challenges YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Gradient+Descent+Optimization+Challenges+deep+machine+learning+ML+artificial+intelligence ...Google search] |

* Gradient [[Boosting]] Algorithms | * Gradient [[Boosting]] Algorithms | ||

| Line 13: | Line 13: | ||

* [[Objective vs. Cost vs. Loss vs. Error Function]] | * [[Objective vs. Cost vs. Loss vs. Error Function]] | ||

* [[Topology and Weight Evolving Artificial Neural Network (TWEANN)]] | * [[Topology and Weight Evolving Artificial Neural Network (TWEANN)]] | ||

| − | * [ | + | * [https://www.unite.ai/what-is-gradient-descent/ What is Gradient Descent? | Daniel Nelson - Unite.ai] |

* [[Other Challenges]] in Artificial Intelligence | * [[Other Challenges]] in Artificial Intelligence | ||

* [[Average-Stochastic Gradient Descent (SGD) Weight-Dropped LSTM (AWD-LSTM)]] | * [[Average-Stochastic Gradient Descent (SGD) Weight-Dropped LSTM (AWD-LSTM)]] | ||

| − | + | https://cdn-images-1.medium.com/max/800/1*NRCWfdXa7b-ak2nBtmwRvw.png | |

| − | Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. In machine learning, we use gradient descent to update the parameters of our model. Parameters refer to coefficients in Linear Regression and weights in neural networks. [ | + | Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. In machine learning, we use gradient descent to update the parameters of our model. Parameters refer to coefficients in Linear Regression and weights in neural networks. [https://ml-cheatsheet.readthedocs.io/en/latest/gradient_descent.html Gradient Descent | ML Cheatsheet] |

Gradient Descent is the most common optimization algorithm in machine learning and deep learning. It is a first-order optimization algorithm. This means it only takes into account the first <b>derivative</b> when performing the updates on the parameters. On each iteration, we update the parameters in the opposite direction of the gradient of the objective function J(w) w.r.t the parameters where the gradient gives the direction of the steepest ascent. [https://towardsdatascience.com/gradient-descent-algorithm-and-its-variants-10f652806a3 Gradient Descent Algorithm and Its Variants | Imad Dabbura - Towards Data Science] | Gradient Descent is the most common optimization algorithm in machine learning and deep learning. It is a first-order optimization algorithm. This means it only takes into account the first <b>derivative</b> when performing the updates on the parameters. On each iteration, we update the parameters in the opposite direction of the gradient of the objective function J(w) w.r.t the parameters where the gradient gives the direction of the steepest ascent. [https://towardsdatascience.com/gradient-descent-algorithm-and-its-variants-10f652806a3 Gradient Descent Algorithm and Its Variants | Imad Dabbura - Towards Data Science] | ||

| − | Nonlinear [[Regression]] algorithms, which fit curves that are not linear in their parameters to data, are a little more complicated, because, unlike linear [[Regression]] problems, they can’t be solved with a deterministic method. Instead, the nonlinear [[Regression]] algorithms implement some kind of iterative minimization process, often some variation on the method of steepest descent. Steepest descent basically computes the squared error and its gradient at the current parameter values, picks a step size (aka learning rate), follows the direction of the gradient “down the hill,” and then recomputes the squared error and its gradient at the new parameter values. Eventually, with luck, the process converges. The variants on steepest descent try to improve the convergence properties. Machine learning algorithms are even less straightforward than nonlinear [[Regression]], partly because machine learning dispenses with the constraint of fitting to a specific mathematical function, such as a polynomial. There are two major categories of problems that are often solved by machine learning: [[Regression]] and classification. [[Regression]] is for numeric data (e.g. What is the likely income for someone with a given address and profession?) and classification is for non-numeric data (e.g. Will the applicant default on this loan?). [ | + | Nonlinear [[Regression]] algorithms, which fit curves that are not linear in their parameters to data, are a little more complicated, because, unlike linear [[Regression]] problems, they can’t be solved with a deterministic method. Instead, the nonlinear [[Regression]] algorithms implement some kind of iterative minimization process, often some variation on the method of steepest descent. Steepest descent basically computes the squared error and its gradient at the current parameter values, picks a step size (aka learning rate), follows the direction of the gradient “down the hill,” and then recomputes the squared error and its gradient at the new parameter values. Eventually, with luck, the process converges. The variants on steepest descent try to improve the convergence properties. Machine learning algorithms are even less straightforward than nonlinear [[Regression]], partly because machine learning dispenses with the constraint of fitting to a specific mathematical function, such as a polynomial. There are two major categories of problems that are often solved by machine learning: [[Regression]] and classification. [[Regression]] is for numeric data (e.g. What is the likely income for someone with a given address and profession?) and classification is for non-numeric data (e.g. Will the applicant default on this loan?). [https://www.infoworld.com/article/3394399/machine-learning-algorithms-explained.html Machine learning algorithms explained | Martin Heller - InfoWorld] |

= Gradient Descent - Stochastic (SGD), Batch (BGD) & Mini-Batch = | = Gradient Descent - Stochastic (SGD), Batch (BGD) & Mini-Batch = | ||

| − | [ | + | [https://www.youtube.com/results?search_query=gradient+descent+sgd+bgd+mini+batch+Optimization+neural+network+deep+machine+learning Youtube search...] |

| − | [ | + | [https://www.google.com/search?q=gradient+descent+sgd+bgd+mini+batch+Optimization+neural+network+deep+machine+learning ...Google search] |

<youtube>W9iWNJNFzQI</youtube> | <youtube>W9iWNJNFzQI</youtube> | ||

| Line 37: | Line 37: | ||

== <span id="Learning Rate Decay"></span>Learning Rate Decay == | == <span id="Learning Rate Decay"></span>Learning Rate Decay == | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Learning+Rate+DecayGradient+Descent+deep+machine+learning YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Learning+Rate+DecayGradient+Descent+deep+machine+learning ...Google search] |

| − | Adapting the learning rate for your stochastic gradient descent optimization procedure can increase performance and reduce training time. Sometimes this is called learning rate annealing or adaptive learning rates. The simplest and perhaps most used adaptation of learning rate during training are techniques that reduce the learning rate over time. These have the benefit of making large changes at the beginning of the training procedure when larger learning rate values are used, and decreasing the learning rate such that a smaller rate and therefore smaller training updates are made to weights later in the training procedure. This has the effect of quickly learning good weights early and fine tuning them later. [ | + | Adapting the learning rate for your stochastic gradient descent optimization procedure can increase performance and reduce training time. Sometimes this is called learning rate annealing or adaptive learning rates. The simplest and perhaps most used adaptation of learning rate during training are techniques that reduce the learning rate over time. These have the benefit of making large changes at the beginning of the training procedure when larger learning rate values are used, and decreasing the learning rate such that a smaller rate and therefore smaller training updates are made to weights later in the training procedure. This has the effect of quickly learning good weights early and fine tuning them later. [https://medium.com/cracking-the-data-science-interview/the-10-deep-learning-methods-ai-practitioners-need-to-apply-885259f402c1 The 10 Deep Learning Methods AI Practitioners Need to Apply | James Le] |

Two popular and easy to use learning rate decay are as follows: | Two popular and easy to use learning rate decay are as follows: | ||

| Line 46: | Line 46: | ||

* Decrease the learning rate using punctuated large drops at specific epochs. | * Decrease the learning rate using punctuated large drops at specific epochs. | ||

| − | + | https://miro.medium.com/max/500/1*jzIVDhOzzrHV9m8cEsTiSA.jpeg | |

| Line 55: | Line 55: | ||

<youtube>QzulmoOg2JE</youtube> | <youtube>QzulmoOg2JE</youtube> | ||

<b>Learning Rate Decay (C2W2L09) | <b>Learning Rate Decay (C2W2L09) | ||

| − | </b><br>Deeplearning.ai [[Creatives#Andrew Ng|Andrew Ng]] Take the Deep Learning Specialization: | + | </b><br>Deeplearning.ai [[Creatives#Andrew Ng|Andrew Ng]] Take the Deep Learning Specialization: https://bit.ly/2Tx5XGn Check out all our courses: https://www.deeplearning.ai Subscribe to The Batch, our weekly newsletter: https://www.deeplearning.ai/thebatch |

|} | |} | ||

|<!-- M --> | |<!-- M --> | ||

| Line 63: | Line 63: | ||

<youtube>jWT-AX9677k</youtube> | <youtube>jWT-AX9677k</youtube> | ||

<b>Learning Rate in a Neural Network explained | <b>Learning Rate in a Neural Network explained | ||

| − | </b><br>In this video, we explain the concept of the learning rate used during training of an artificial neural network and also show how to specify the learning rate in code with [[Keras]]. Hey, we're Chris and Mandy, the creators of deeplizard! | + | </b><br>In this video, we explain the concept of the learning rate used during training of an artificial neural network and also show how to specify the learning rate in code with [[Keras]]. Hey, we're Chris and Mandy, the creators of deeplizard! https://youtube.com/deeplizardvlog |

|} | |} | ||

|}<!-- B --> | |}<!-- B --> | ||

== <span id="Gradient Descent With Momentum"></span>Gradient Descent With Momentum == | == <span id="Gradient Descent With Momentum"></span>Gradient Descent With Momentum == | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Momentum+Gradient+Descent+deep+machine YouTube search...] |

| − | [ | + | [https://www.google.com/search?q=Momentum+Gradient+Descent+deep+machine ...Google search] |

| − | helps accelerate gradients vectors in the right directions, thus leading to faster converging. It is one of the most popular optimization algorithms and many state-of-the-art models are trained using it... With Stochastic Gradient Descent we don’t compute the exact derivate of our loss function. Instead, we’re estimating it on a small batch. Which means we’re not always going in the optimal direction, because our derivatives are ‘noisy’. Just like in my graphs above. So, exponentially weighed averages can provide us a better estimate which is closer to the actual derivate than our noisy calculations. This is one reason why momentum might work better than classic SGD. The other reason lies in ravines. Ravine is an area, where the surface curves much more steeply in one dimension than in another. Ravines are common near local minimas in deep learning and SGD has troubles navigating them. SGD will tend to oscillate across the narrow ravine since the negative gradient will point down one of the steep sides rather than along the ravine towards the optimum. Momentum helps accelerate gradients in the right direction. This is expressed in the following pictures: [ | + | helps accelerate gradients vectors in the right directions, thus leading to faster converging. It is one of the most popular optimization algorithms and many state-of-the-art models are trained using it... With Stochastic Gradient Descent we don’t compute the exact derivate of our loss function. Instead, we’re estimating it on a small batch. Which means we’re not always going in the optimal direction, because our derivatives are ‘noisy’. Just like in my graphs above. So, exponentially weighed averages can provide us a better estimate which is closer to the actual derivate than our noisy calculations. This is one reason why momentum might work better than classic SGD. The other reason lies in ravines. Ravine is an area, where the surface curves much more steeply in one dimension than in another. Ravines are common near local minimas in deep learning and SGD has troubles navigating them. SGD will tend to oscillate across the narrow ravine since the negative gradient will point down one of the steep sides rather than along the ravine towards the optimum. Momentum helps accelerate gradients in the right direction. This is expressed in the following pictures: [https://towardsdatascience.com/stochastic-gradient-descent-with-momentum-a84097641a5d Stochastic Gradient Descent with momentum | Vitaly Bushaev - Towards Data Science] |

| − | + | https://miro.medium.com/max/410/1*JHYIDkzf1ImuZK487q_kiw.gif https://miro.medium.com/max/410/1*uTiP1uRl2CaHaA-dFu3NKw.gif | |

{|<!-- T --> | {|<!-- T --> | ||

| Line 81: | Line 81: | ||

<youtube>k8fTYJPd3_I</youtube> | <youtube>k8fTYJPd3_I</youtube> | ||

<b>Gradient Descent With Momentum (C2W2L06) | <b>Gradient Descent With Momentum (C2W2L06) | ||

| − | </b><br>Deeplearning.ai [[Creatives#Andrew Ng|Andrew Ng]] Take the Deep Learning Specialization: | + | </b><br>Deeplearning.ai [[Creatives#Andrew Ng|Andrew Ng]] Take the Deep Learning Specialization: https://bit.ly/2Tx5XGn Check out all our courses: https://www.deeplearning.ai Subscribe to The Batch, our weekly newsletter: https://www.deeplearning.ai/thebatch |

|} | |} | ||

|<!-- M --> | |<!-- M --> | ||

| Line 89: | Line 89: | ||

<youtube>wrEcHhoJxjM</youtube> | <youtube>wrEcHhoJxjM</youtube> | ||

<b>23. Accelerating Gradient Descent (Use Momentum) | <b>23. Accelerating Gradient Descent (Use Momentum) | ||

| − | </b><br>MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 Instructor: Gilbert Strang In this lecture, Professor Strang explains both momentum-based gradient descent and Nesterov's accelerated gradient descent. View the complete course: | + | </b><br>MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 Instructor: Gilbert Strang In this lecture, Professor Strang explains both momentum-based gradient descent and Nesterov's accelerated gradient descent. View the complete course: https://ocw.mit.edu/18-065S18 |

|} | |} | ||

|}<!-- B --> | |}<!-- B --> | ||

= Vanishing & Exploding Gradients Problems = | = Vanishing & Exploding Gradients Problems = | ||

| − | [ | + | [https://www.youtube.com/results?search_query=vanishing+Exploding+gradient+problem+in+neural+networks+deep+machine+learning YouTube Search] |

| − | [ | + | [https://www.google.com/search?q=vanishing+Exploding+gradient+problem+in+neural+networks+deep+machine+learning ...Google search] |

| − | * [ | + | * [https://en.wikipedia.org/wiki/Vanishing_gradient_problem Vanishing gradient problem] |

<youtube>qhXZsFVxGKo</youtube> | <youtube>qhXZsFVxGKo</youtube> | ||

| Line 105: | Line 105: | ||

== Vanishing & Exploding Gradients Challenges with Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNN) == | == Vanishing & Exploding Gradients Challenges with Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNN) == | ||

| − | [ | + | [https://www.youtube.com/results?search_query=Difficult+to+Train+an+RNN+GRU+LSTM+vanishing+deep+machine+learning YouTube Search] |

| − | [ | + | [https://www.google.com/search?q=Difficult+to+Train+an+RNN+GRU+LSTM+vanishing+deep+machine+learning ...Google search] |

* [[Recurrent Neural Network (RNN)]] | * [[Recurrent Neural Network (RNN)]] | ||

<youtube>2GNbIKTKCfE</youtube> | <youtube>2GNbIKTKCfE</youtube> | ||

Revision as of 17:05, 28 March 2023

YouTube search... ...Google search

- Gradient Boosting Algorithms

- Backpropagation ...Gradient Descent Optimization & Challenges ...Feed Forward Neural Network (FF or FFNN) ...Forward-Forward

- Objective vs. Cost vs. Loss vs. Error Function

- Topology and Weight Evolving Artificial Neural Network (TWEANN)

- What is Gradient Descent? | Daniel Nelson - Unite.ai

- Other Challenges in Artificial Intelligence

- Average-Stochastic Gradient Descent (SGD) Weight-Dropped LSTM (AWD-LSTM)

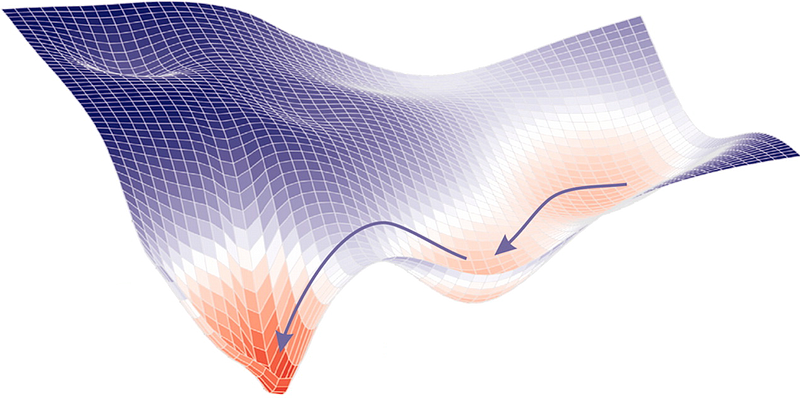

Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. In machine learning, we use gradient descent to update the parameters of our model. Parameters refer to coefficients in Linear Regression and weights in neural networks. Gradient Descent | ML Cheatsheet

Gradient Descent is the most common optimization algorithm in machine learning and deep learning. It is a first-order optimization algorithm. This means it only takes into account the first derivative when performing the updates on the parameters. On each iteration, we update the parameters in the opposite direction of the gradient of the objective function J(w) w.r.t the parameters where the gradient gives the direction of the steepest ascent. Gradient Descent Algorithm and Its Variants | Imad Dabbura - Towards Data Science

Nonlinear Regression algorithms, which fit curves that are not linear in their parameters to data, are a little more complicated, because, unlike linear Regression problems, they can’t be solved with a deterministic method. Instead, the nonlinear Regression algorithms implement some kind of iterative minimization process, often some variation on the method of steepest descent. Steepest descent basically computes the squared error and its gradient at the current parameter values, picks a step size (aka learning rate), follows the direction of the gradient “down the hill,” and then recomputes the squared error and its gradient at the new parameter values. Eventually, with luck, the process converges. The variants on steepest descent try to improve the convergence properties. Machine learning algorithms are even less straightforward than nonlinear Regression, partly because machine learning dispenses with the constraint of fitting to a specific mathematical function, such as a polynomial. There are two major categories of problems that are often solved by machine learning: Regression and classification. Regression is for numeric data (e.g. What is the likely income for someone with a given address and profession?) and classification is for non-numeric data (e.g. Will the applicant default on this loan?). Machine learning algorithms explained | Martin Heller - InfoWorld

Contents

[hide]Gradient Descent - Stochastic (SGD), Batch (BGD) & Mini-Batch

Youtube search... ...Google search

Learning Rate Decay

YouTube search... ...Google search

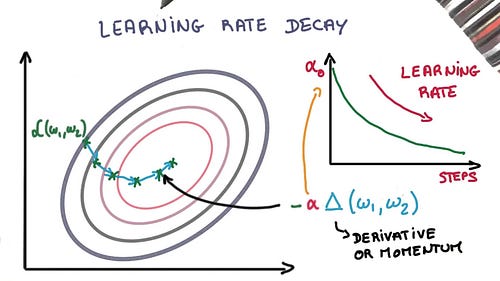

Adapting the learning rate for your stochastic gradient descent optimization procedure can increase performance and reduce training time. Sometimes this is called learning rate annealing or adaptive learning rates. The simplest and perhaps most used adaptation of learning rate during training are techniques that reduce the learning rate over time. These have the benefit of making large changes at the beginning of the training procedure when larger learning rate values are used, and decreasing the learning rate such that a smaller rate and therefore smaller training updates are made to weights later in the training procedure. This has the effect of quickly learning good weights early and fine tuning them later. The 10 Deep Learning Methods AI Practitioners Need to Apply | James Le

Two popular and easy to use learning rate decay are as follows:

- Decrease the learning rate gradually based on the epoch.

- Decrease the learning rate using punctuated large drops at specific epochs.

|

|

Gradient Descent With Momentum

YouTube search... ...Google search

helps accelerate gradients vectors in the right directions, thus leading to faster converging. It is one of the most popular optimization algorithms and many state-of-the-art models are trained using it... With Stochastic Gradient Descent we don’t compute the exact derivate of our loss function. Instead, we’re estimating it on a small batch. Which means we’re not always going in the optimal direction, because our derivatives are ‘noisy’. Just like in my graphs above. So, exponentially weighed averages can provide us a better estimate which is closer to the actual derivate than our noisy calculations. This is one reason why momentum might work better than classic SGD. The other reason lies in ravines. Ravine is an area, where the surface curves much more steeply in one dimension than in another. Ravines are common near local minimas in deep learning and SGD has troubles navigating them. SGD will tend to oscillate across the narrow ravine since the negative gradient will point down one of the steep sides rather than along the ravine towards the optimum. Momentum helps accelerate gradients in the right direction. This is expressed in the following pictures: Stochastic Gradient Descent with momentum | Vitaly Bushaev - Towards Data Science

|

|

Vanishing & Exploding Gradients Problems

YouTube Search ...Google search

Vanishing & Exploding Gradients Challenges with Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNN)

YouTube Search ...Google search