Difference between revisions of "(Boosted) Decision Tree"

| Line 6: | Line 6: | ||

* [[Boosted Decision Tree Regression]] | * [[Boosted Decision Tree Regression]] | ||

* [http://xgboost.readthedocs.io/en/latest/model.html Introduction to Boosted Trees | XGBoost] | * [http://xgboost.readthedocs.io/en/latest/model.html Introduction to Boosted Trees | XGBoost] | ||

| − | |||

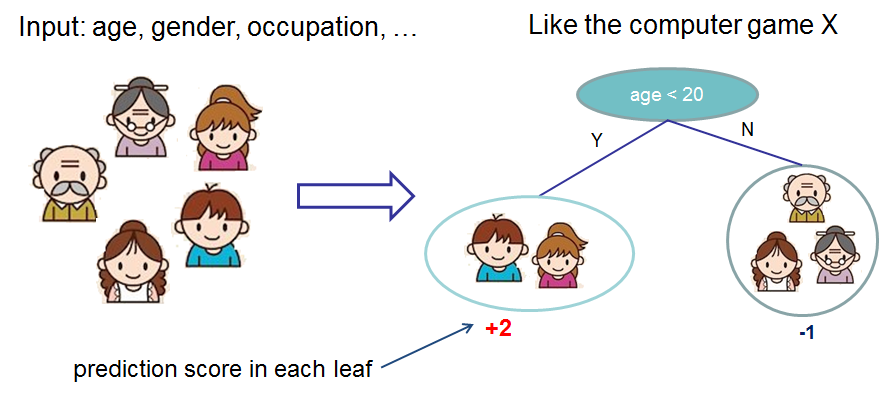

A boosted decision tree is an ensemble learning method in which the second tree corrects for the errors of the first tree, the third tree corrects for the errors of the first and second trees, and so forth. Predictions are based on the entire ensemble of trees together that makes the prediction. For further technical details, see the Research section of this article. Generally, when properly configured, boosted decision trees are the easiest methods with which to get top performance on a wide variety of machine learning tasks. However, they are also one of the more memory-intensive learners, and the current implementation holds everything in memory. Therefore, a boosted decision tree model might not be able to process the very large datasets that some linear learners can handle. | A boosted decision tree is an ensemble learning method in which the second tree corrects for the errors of the first tree, the third tree corrects for the errors of the first and second trees, and so forth. Predictions are based on the entire ensemble of trees together that makes the prediction. For further technical details, see the Research section of this article. Generally, when properly configured, boosted decision trees are the easiest methods with which to get top performance on a wide variety of machine learning tasks. However, they are also one of the more memory-intensive learners, and the current implementation holds everything in memory. Therefore, a boosted decision tree model might not be able to process the very large datasets that some linear learners can handle. | ||

| Line 16: | Line 15: | ||

<youtube>BXrrBWnuKlc</youtube> | <youtube>BXrrBWnuKlc</youtube> | ||

<youtube>gehNcYRXs4M</youtube> | <youtube>gehNcYRXs4M</youtube> | ||

| + | |||

| + | == Two-Class Boosted Decision Tree == | ||

| + | [http://www.youtube.com/results?search_query=Two-Class+boosted+decision+tree+artificial+intelligence YouTube search...] | ||

| + | |||

| + | * [http://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/two-class-boosted-decision-tree Two-Class Boosted Decision Tree | Microsoft] | ||

| + | |||

| + | <youtube>Q1N3ZVe1jvY</youtube> | ||

| + | <youtube>8i1ZqVC-SHQ</youtube> | ||

| + | <youtube>6hJ2Xa5R1XE</youtube> | ||

| + | <youtube>LX9oYUDX1kM</youtube> | ||

Revision as of 19:40, 3 June 2018

A boosted decision tree is an ensemble learning method in which the second tree corrects for the errors of the first tree, the third tree corrects for the errors of the first and second trees, and so forth. Predictions are based on the entire ensemble of trees together that makes the prediction. For further technical details, see the Research section of this article. Generally, when properly configured, boosted decision trees are the easiest methods with which to get top performance on a wide variety of machine learning tasks. However, they are also one of the more memory-intensive learners, and the current implementation holds everything in memory. Therefore, a boosted decision tree model might not be able to process the very large datasets that some linear learners can handle.

Two-Class Boosted Decision Tree