Difference between revisions of "Word2Vec"

| (4 intermediate revisions by the same user not shown) | |||

| Line 14: | Line 14: | ||

* [[Global Vectors for Word Representation (GloVe)]] | * [[Global Vectors for Word Representation (GloVe)]] | ||

* [[Bag-of-Words (BoW)]] | * [[Bag-of-Words (BoW)]] | ||

| − | * [[Continuous Bag-of-Words ( | + | * [[Continuous Bag-of-Words (CBoW)]] |

| + | * [[Natural Language Processing (NLP)#Similarity |Similarity]] | ||

| + | * [http://projector.tensorflow.org/ Embedding Projector] | ||

| + | * [http://pathmind.com/wiki/word2vec A Beginner's Guide to Word2Vec and Neural Word Embeddings | Chris Nicholson - A.I. Wiki pathmind] | ||

| + | * [http://towardsdatascience.com/introduction-to-word-embedding-and-word2vec-652d0c2060fa Introduction to Word Embedding and Word2Vec | Dhruvil Karani - Towards Data Science - Medium] | ||

| + | * [http://arxiv.org/pdf/1310.4546.pdf Distributed Representations of Words and Phrases | ||

| + | and their Compositionality | Tomas Mikolov - Google] | ||

| + | |||

| + | a shallow, two-layer neural networks which is trained to reconstruct linguistic contexts of words. It takes as its input a large corpus of words and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space. | ||

| + | |||

| + | |||

| + | http://miro.medium.com/max/1394/0*XMW5mf81LSHodnTi.png | ||

| + | |||

<youtube>ERibwqs9p38</youtube> | <youtube>ERibwqs9p38</youtube> | ||

Revision as of 23:14, 3 May 2020

Youtube search... ...Google search

- Natural Language Processing (NLP)

- Doc2Vec

- Node2Vec

- Skip-Gram

- Global Vectors for Word Representation (GloVe)

- Bag-of-Words (BoW)

- Continuous Bag-of-Words (CBoW)

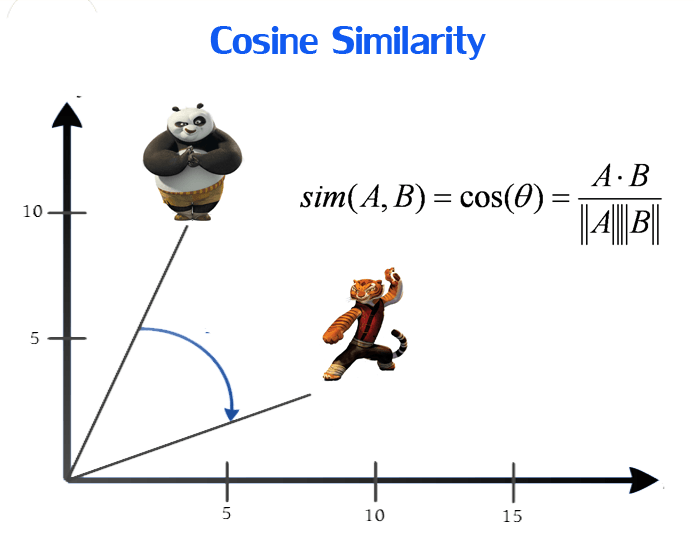

- Similarity

- Embedding Projector

- A Beginner's Guide to Word2Vec and Neural Word Embeddings | Chris Nicholson - A.I. Wiki pathmind

- Introduction to Word Embedding and Word2Vec | Dhruvil Karani - Towards Data Science - Medium

- [http://arxiv.org/pdf/1310.4546.pdf Distributed Representations of Words and Phrases

and their Compositionality | Tomas Mikolov - Google]

a shallow, two-layer neural networks which is trained to reconstruct linguistic contexts of words. It takes as its input a large corpus of words and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space.